All software & hardware's used or referenced in this guide belong to their respective vendors. We have developed this guide based on our development infrastructure and this guide may or may not work on other systems and technical infrastructure. We are not liable for any direct or indirect problems caused to the users using this guide.

The purpose of this document is to provide adequate information to users to implement a advanced reinforcement learning model. In order to achieve this, we are using the self driver car problem that may become a reality of automobile industries. The problem is be solved using Decision Trees, Random Forests a supervised machine learning model, Convolutional Neural Networks & Deep Q Networks.

Identifying lanes on the road as performed by all human drivers to ensure their vehicles are within lane constraints when driving, so as to make sure traffic is smooth and minimize chances of collisions with other cars due to lane misalignment It is a critical task for an autonomous vehicle to perform. It turns out that recognizing lane markings on roads is possible using well known computer vision technique that avoids accidents & makes the traffic smooth. Lane Changing is an Important aspect of self-driving cars. Self-driving cars must incorporate lane changing system for smooth & efficient driving. For a self-driving cars When changing lanes, the most important thing is to wait until there is a clear gap in the traffic, then move safely and smoothly into the center of the desired lane, while maintaining space in the flow of traffic so that no other vehicle is forced to slow down, speed up, or change lanes to avoid collision.

Human driven cars are monitored by humans sitting behind a steering wheel. The driver of the car needs to be vary of variety of things. He/she needs to drive the vehicle with permissible speed, follow lane discipline etc. All these changes with the rapid development of complex technologies that slowly start to emerge on different cars is going to take over the various functions that were normally performed by the driver. The development of the technology directs us into the use of self-driving cars as well as driveless cars.

Step 1 : Defining a Clear Problem Statement

Step 2 : Identification of the Input Data (Camera Images, Sensor Images)

Step 3 : Application of Computer Vision Techniques on the Input Road Image for Lane Detection or Identification

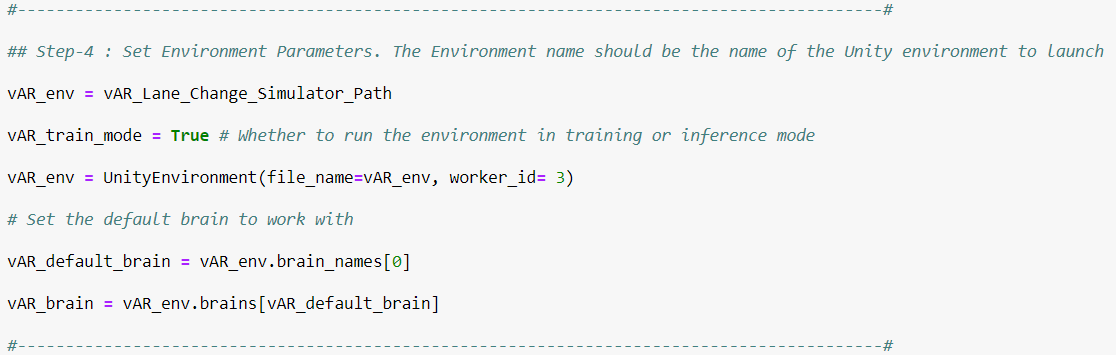

Step 4 : Once the Lane is Detected next step is to incorporate a lane changing system. So first the environment is downloaded & Started.

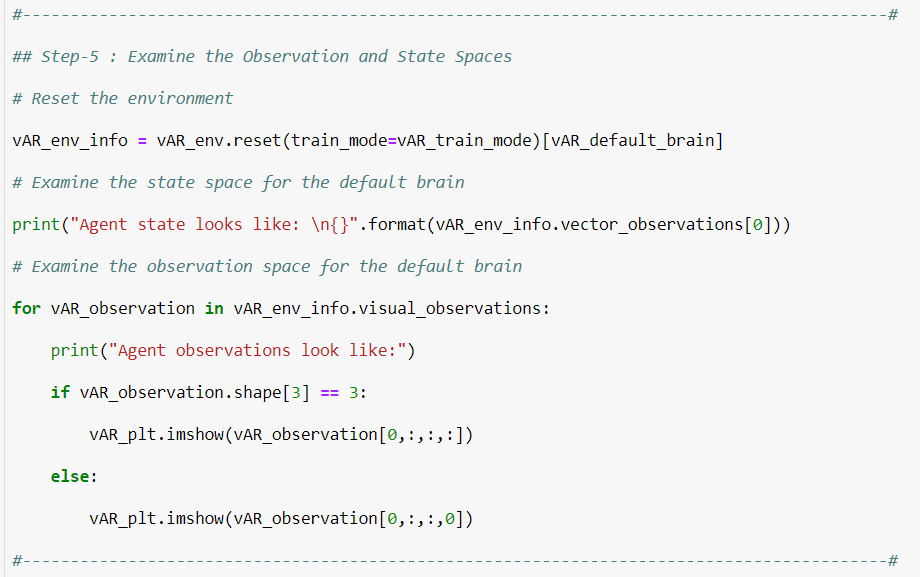

Step 5 : Once the Environment is initialized we need to examine the Observation & State Spaces

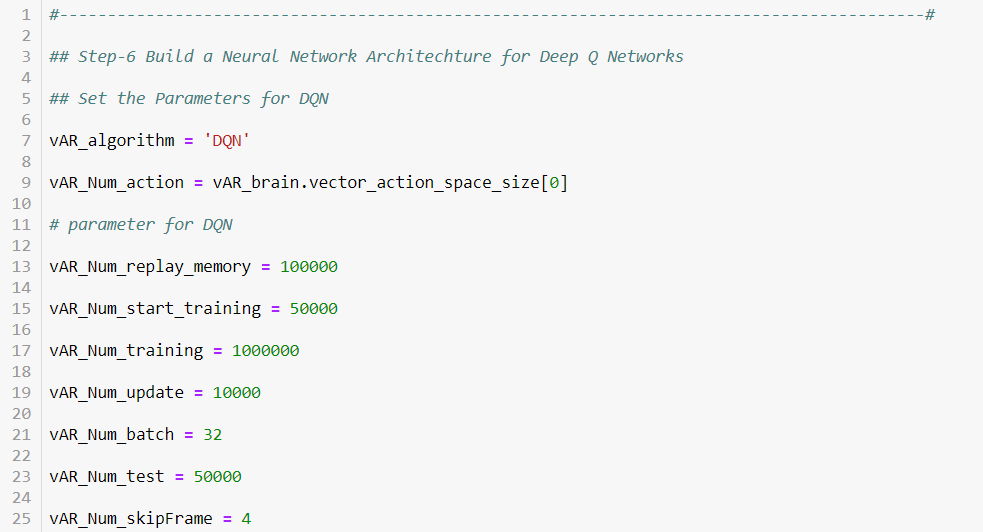

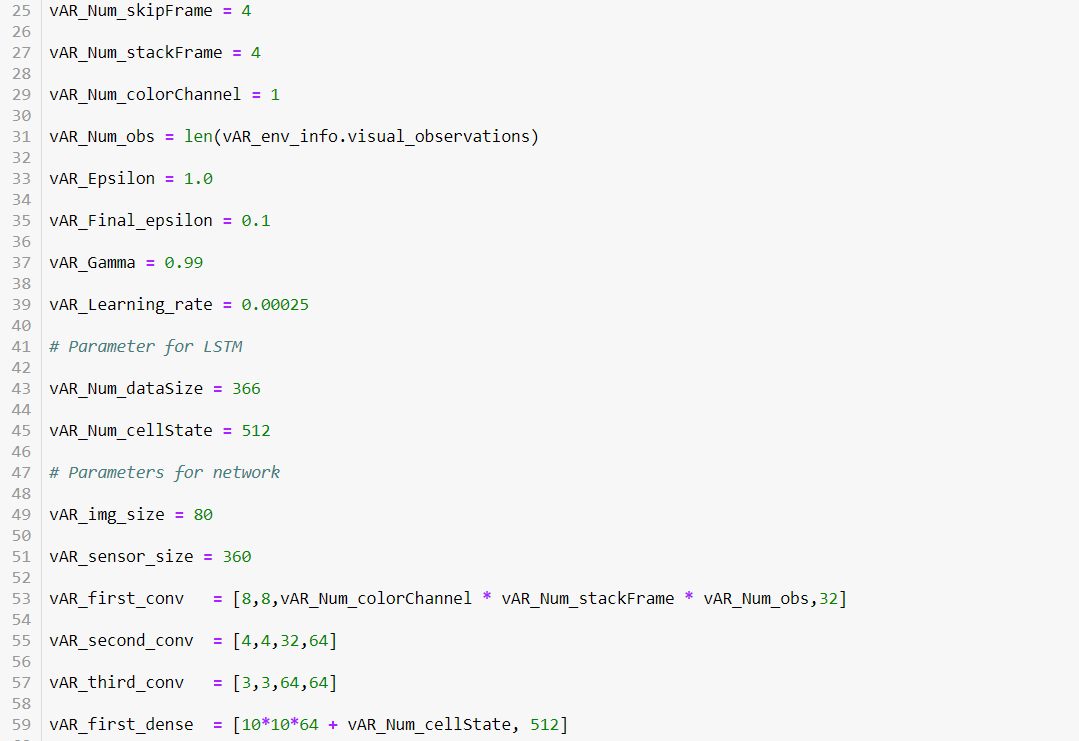

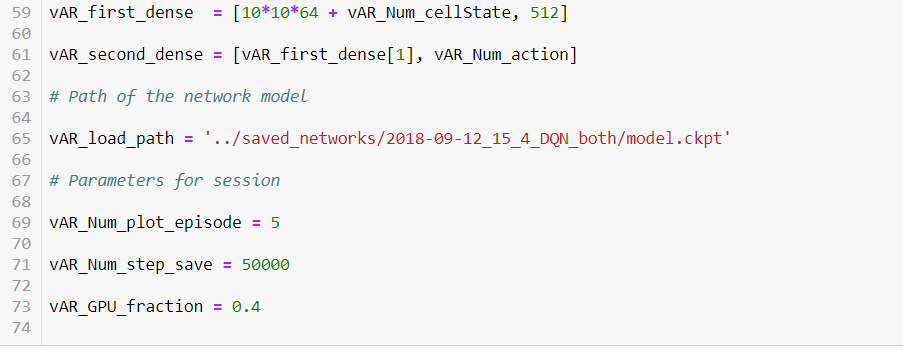

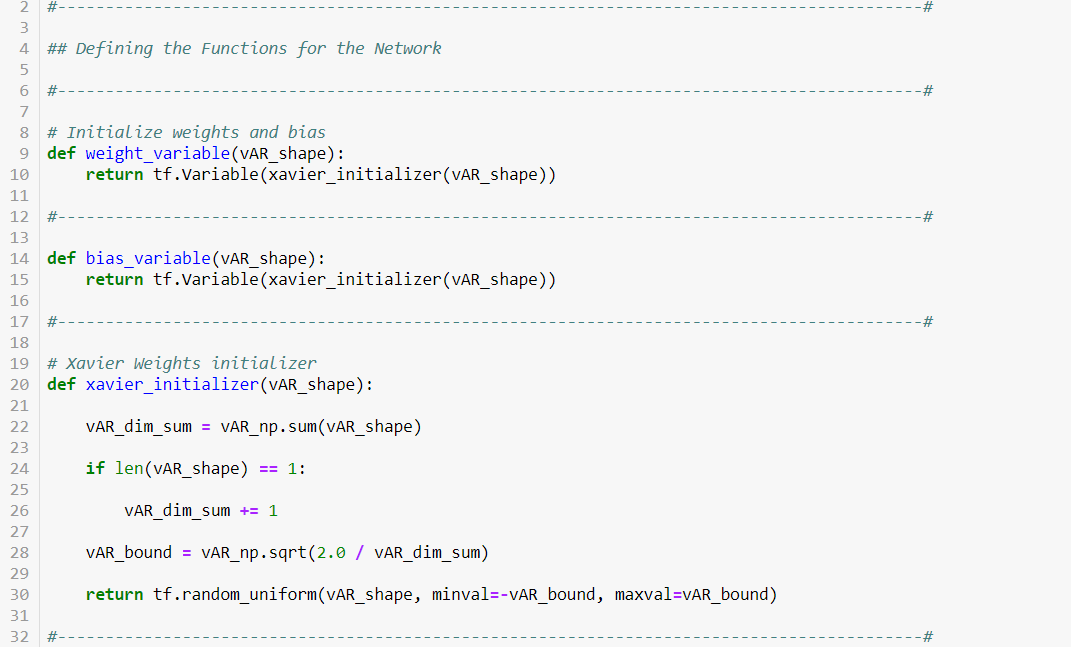

Step 6 : Training & test the agent on Action & States for a maximum reward using a Deep reinforcement algorithm called Deep Q Networks.

Model selection is the process of choosing between different machine learning, deep learning or reinforcement learning approaches - e.g. SVM, CNN, Deep Q Learning etc. or choosing between different hyperparameters or sets of features for the same machine/deep/reinforcement learning approach - e.g. deciding between the polynomial degrees/complexities for linear regression.

The choice of the actual learning algorithm is less important than you'd think - there may be a "best" algorithm for a particular problem, but often its performance is not much better than other well-performing approaches for that problem.

There may be certain qualities you look for in a model:

Our Problem here is a Reinforcement Learning Problem. The Problem is to identify lanes & drive the car along the identified lane with great steering control. There are various algorithms that could be used Ex: Q-Learning, State-Action-Reward-State-Action (SARSA), Deep Deterministic Policy Gradient (DDPG) etc. These could be alternative’s to DQN's. Below are the points makes Deep Q Networks an ideal choice.

Feature engineering is a crucial step in the process of predictive modeling. It involves the transformation of given feature space, typically using mathematical functions, with the objective of reducing the modeling error for a given target. However, there is no well-defined basis for performing effective feature engineering. It involves domain knowledge, intuition, and most of all, a lengthy process of trial and error. The human attention involved in overseeing this process significantly influences the cost of model generation. In this implementation we present a new framework to automate feature engineering. The Reinforcement model Deep Q-learning uses Neural Network architecture.

Feature engineering is the most important art in machine learning which creates a huge difference between a good model and a bad model.

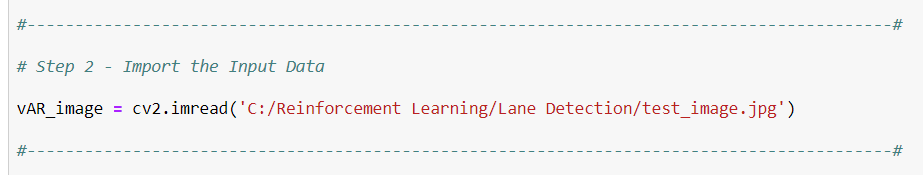

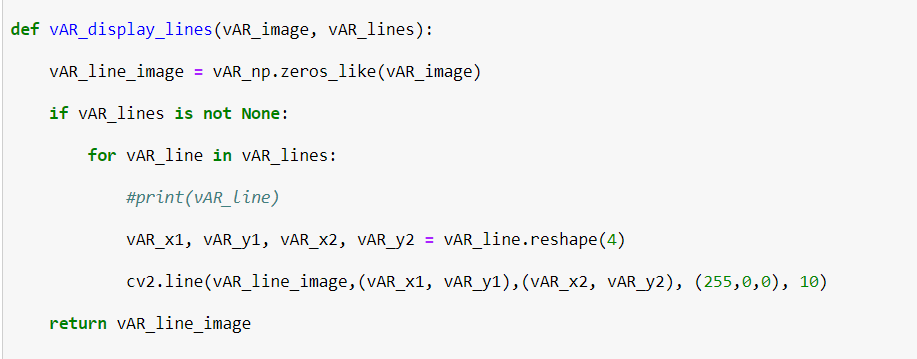

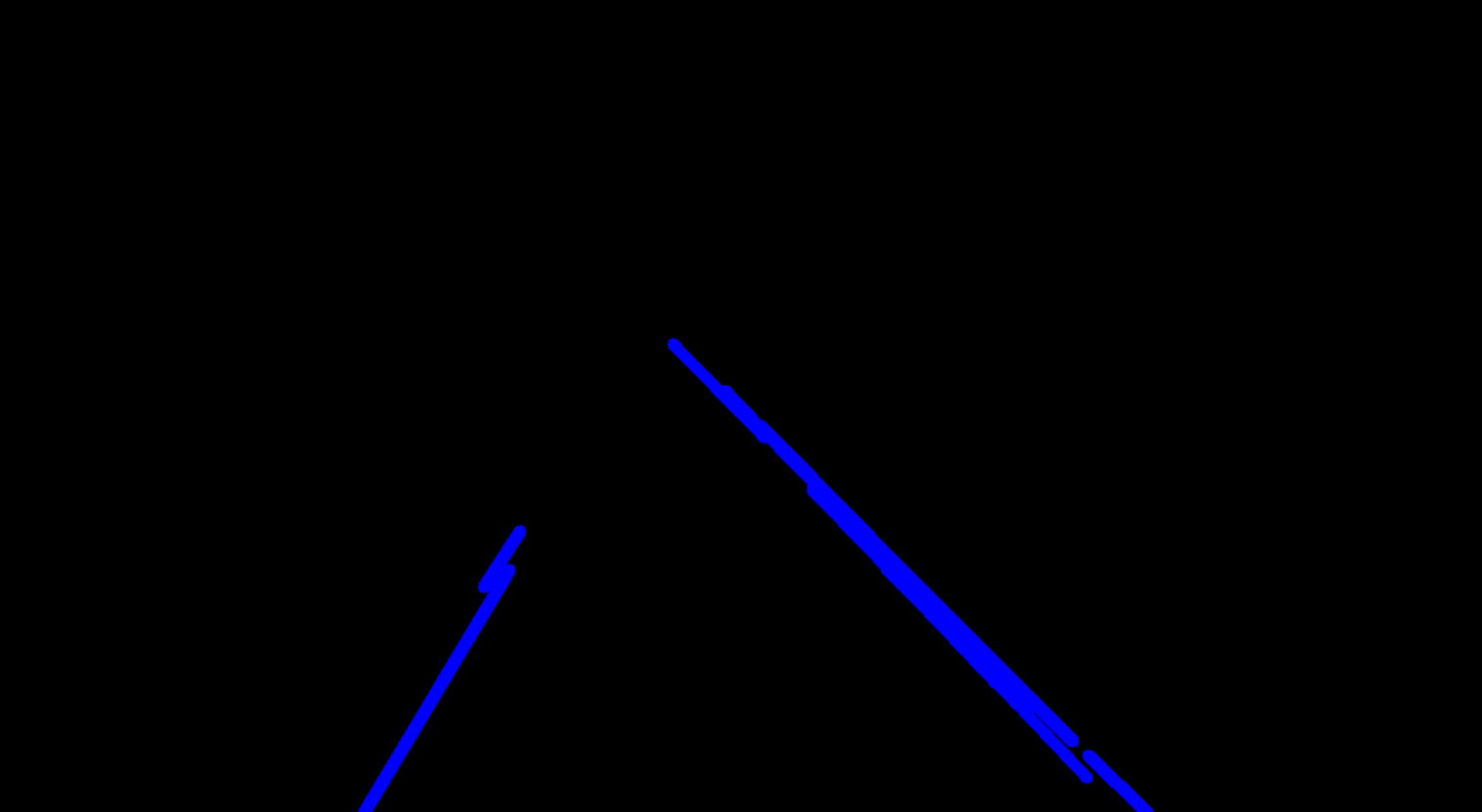

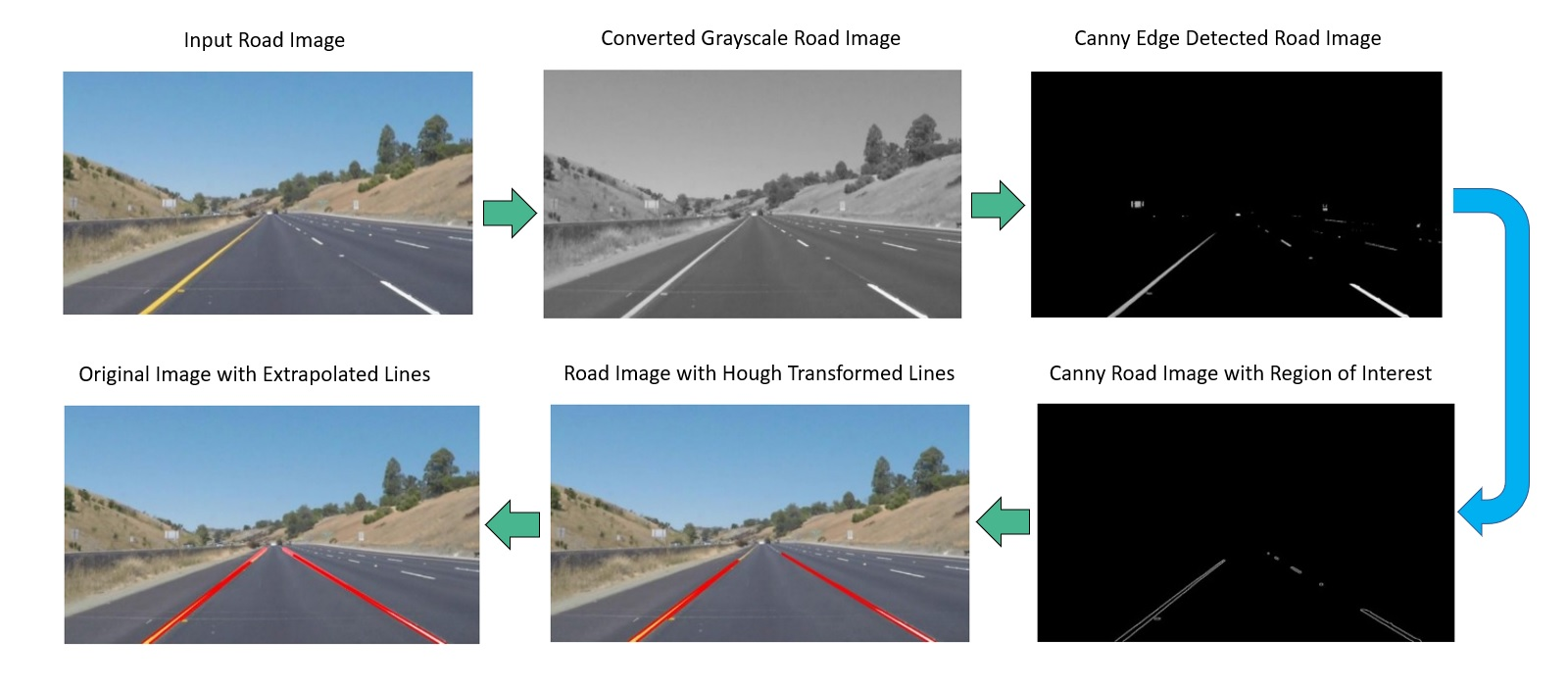

The Input Dataset are set of images that would undergo various computer vision techniques like color selection, region of interest selection, grayscaling, Gaussian smoothing, Canny Edge Detection and Hough Transform line detection. A pipeline is used to detect the line segments in the image, then average/extrapolate them and draw them onto the image for display.

The Input Dataset are set of images that would undergo various computer vision techniques. This is the actual data that is to under several computer vision techniques & finally detecting the lane lines.

There are several Reinforcement and data engineering libraries available. We are using the following libraries, and these libraries and their associated functions are readily available to use in Python to develop business application.

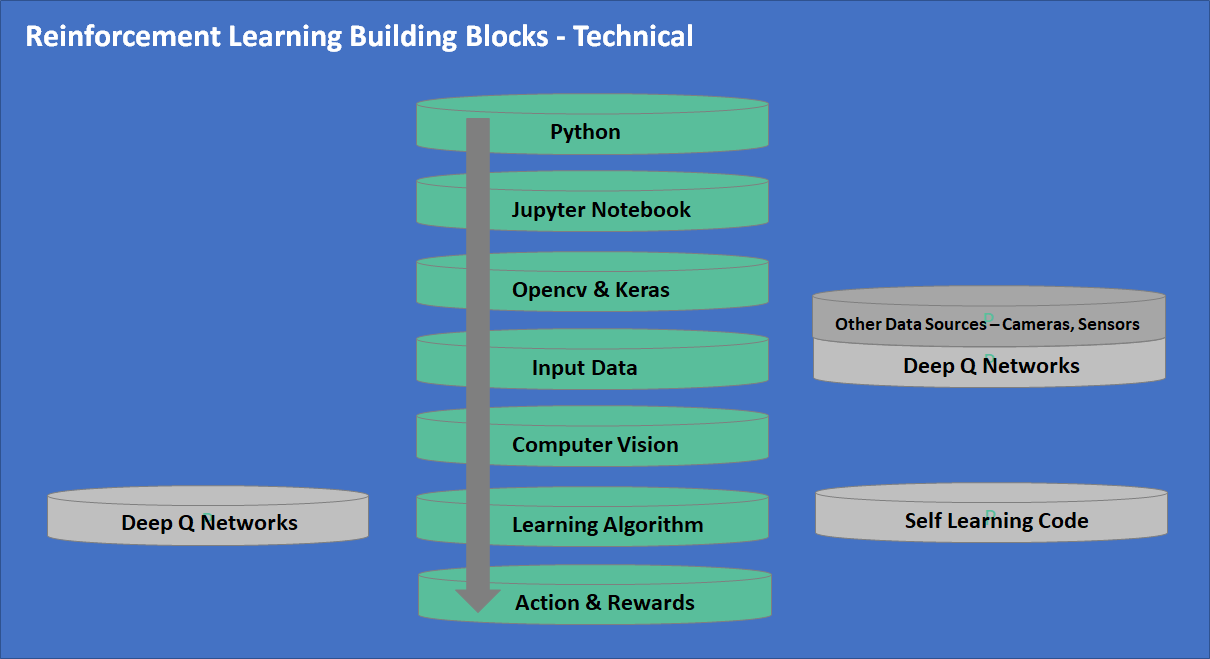

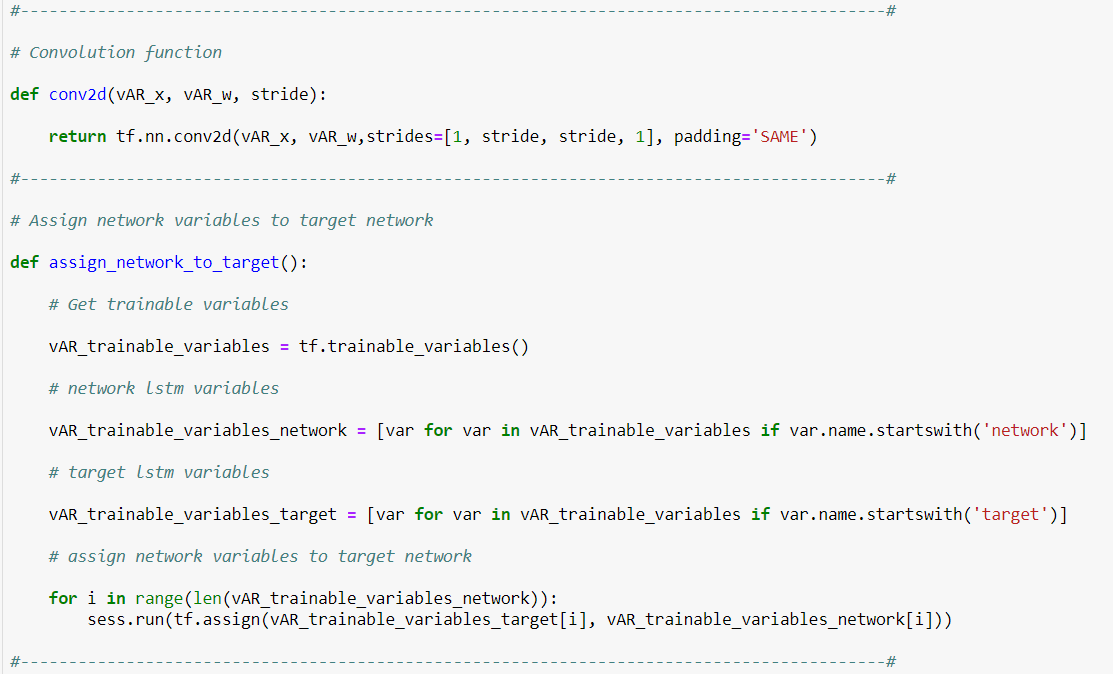

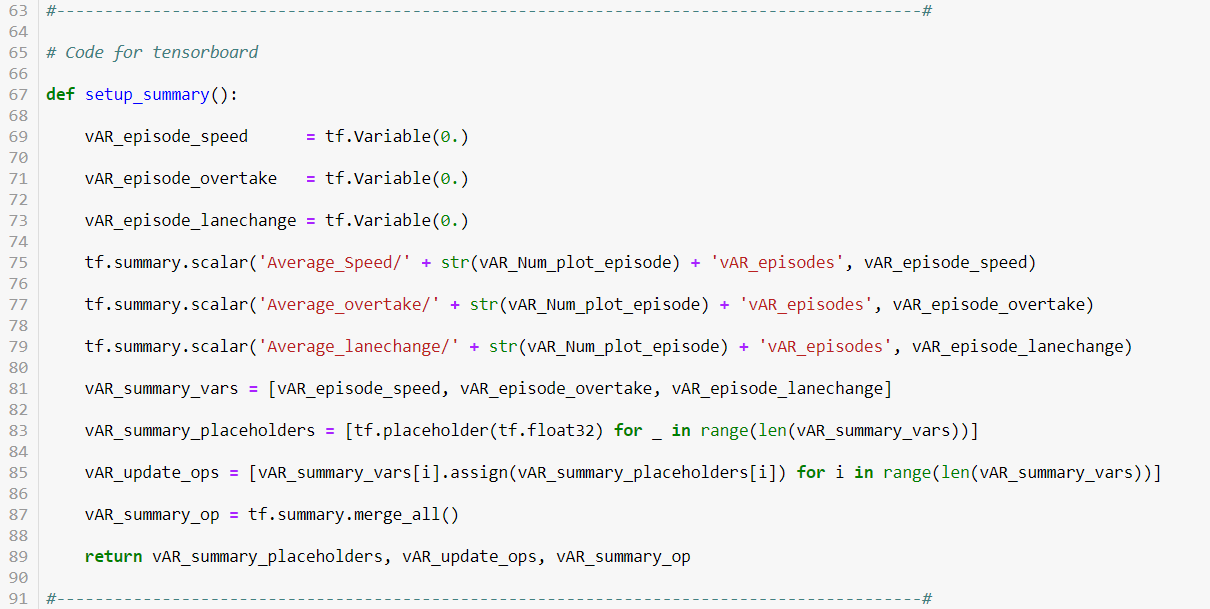

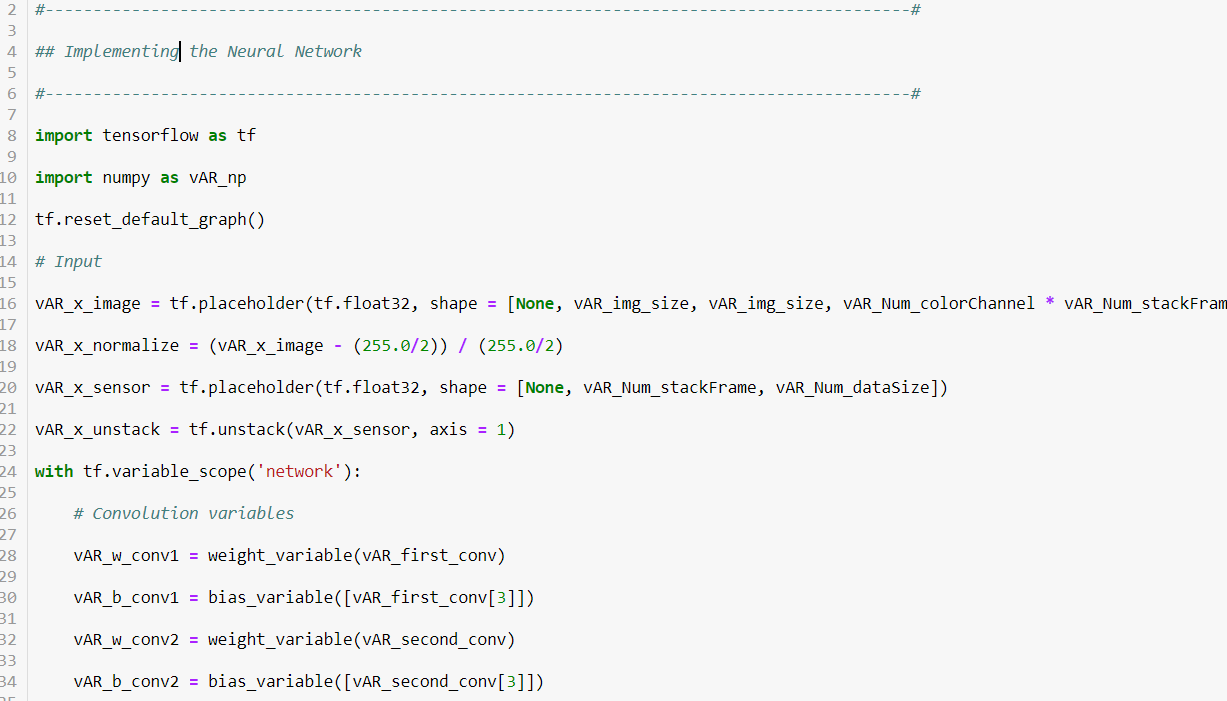

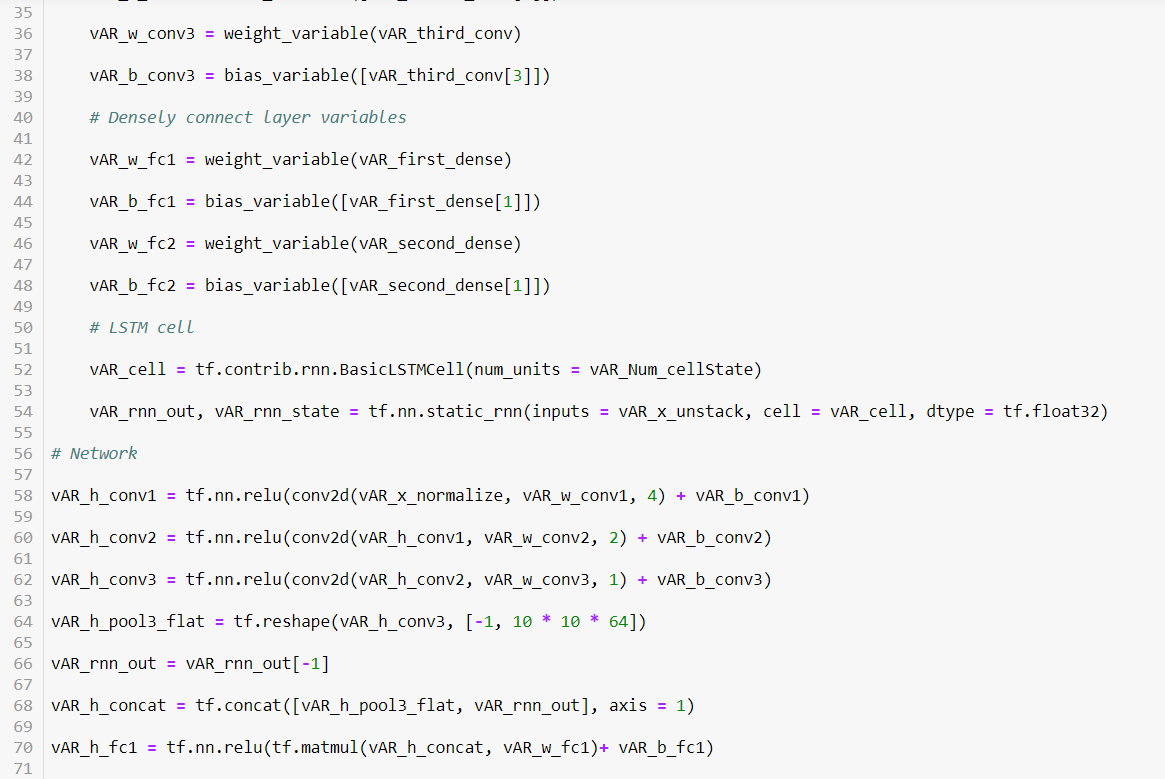

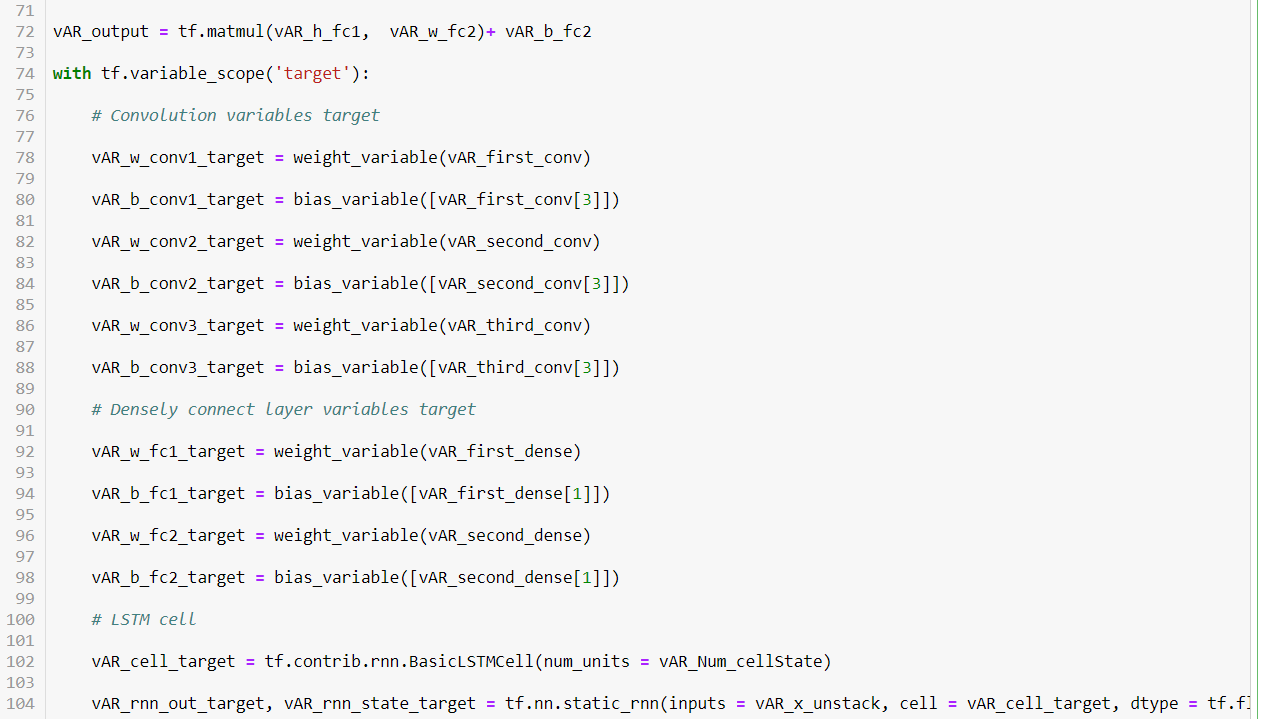

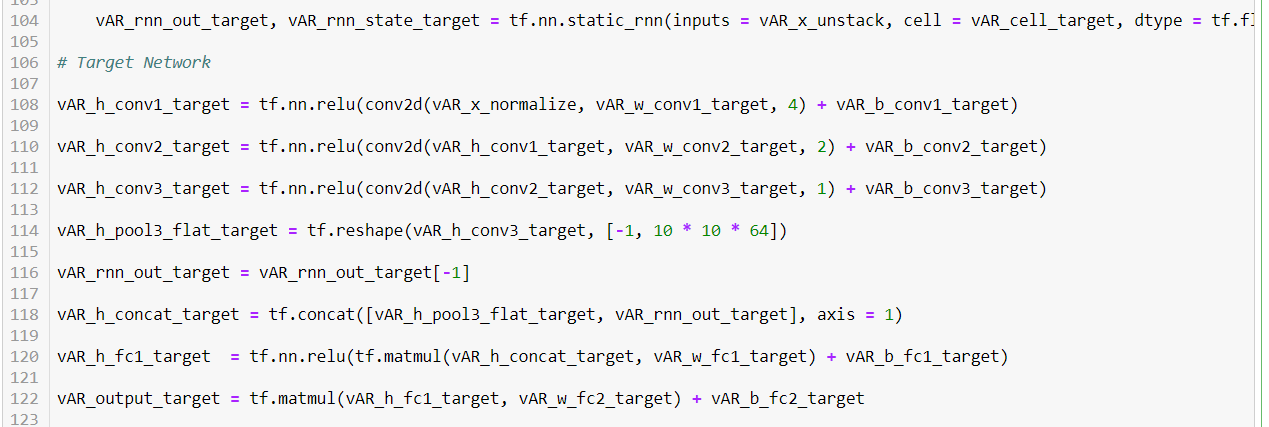

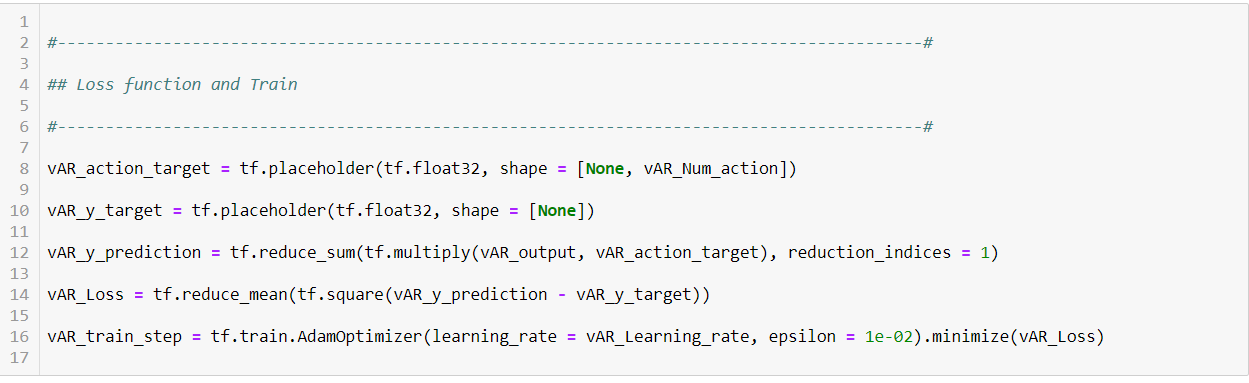

As we explained we are using Reinforcement learning model Deep Neural Networks (DQN).

There are several technical and functional components involved in implementing this model. Here are the key building blocks to implement the model.

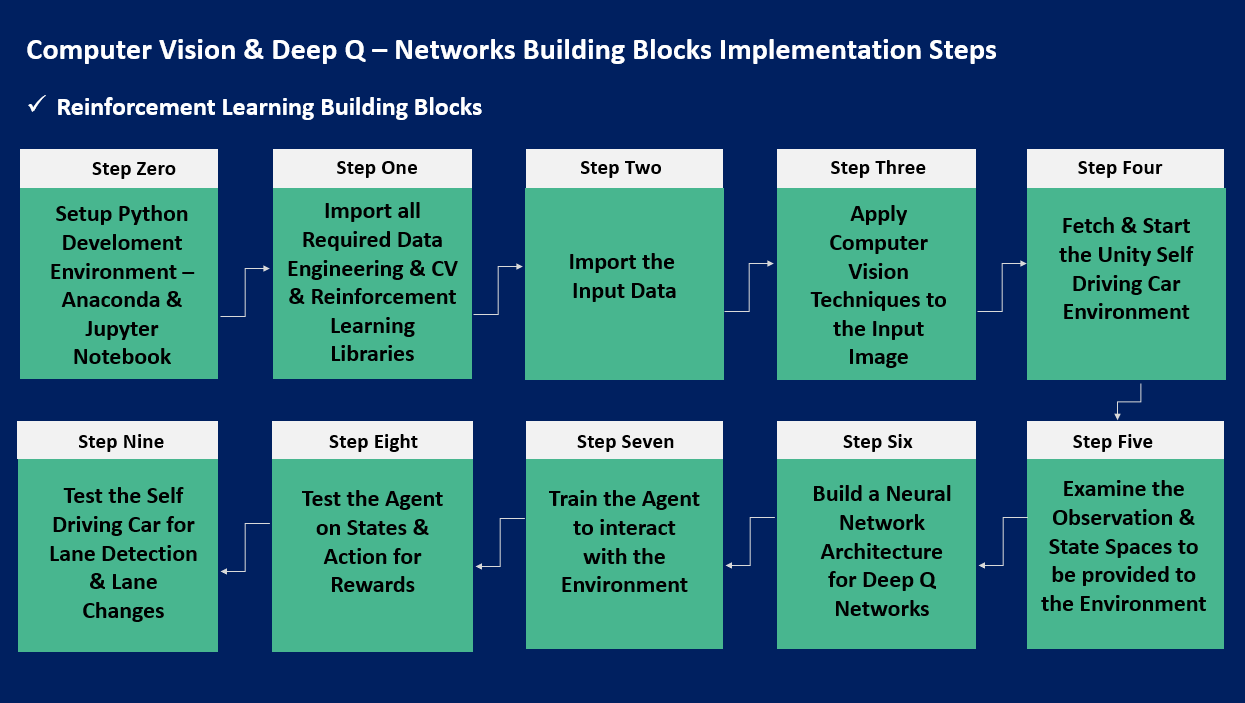

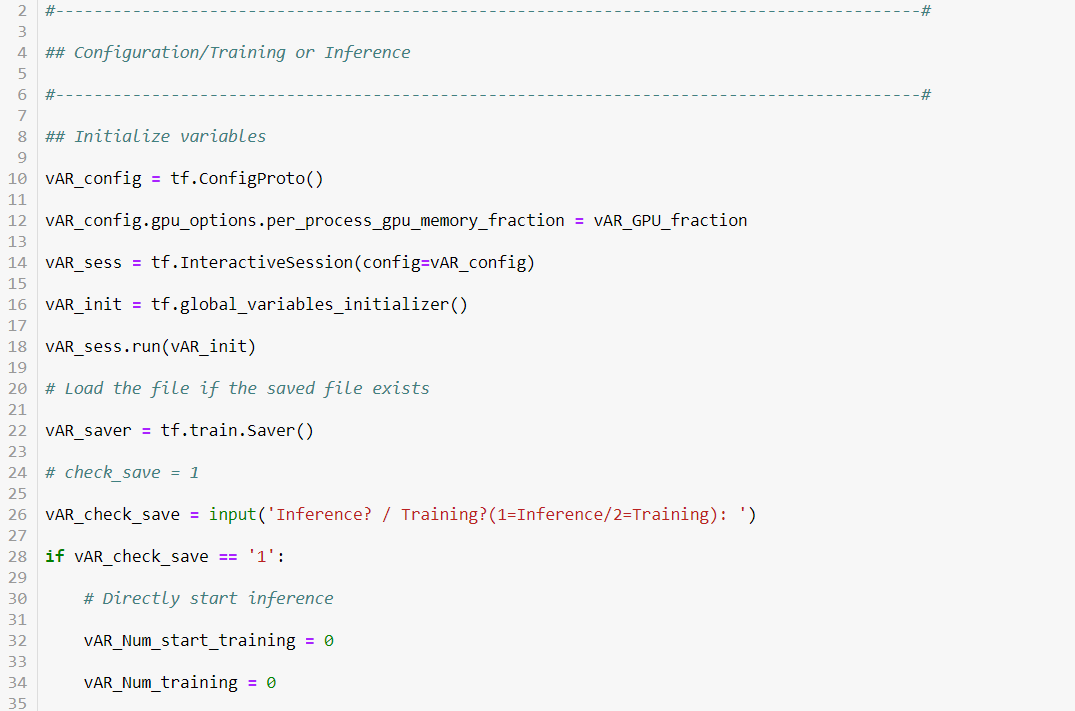

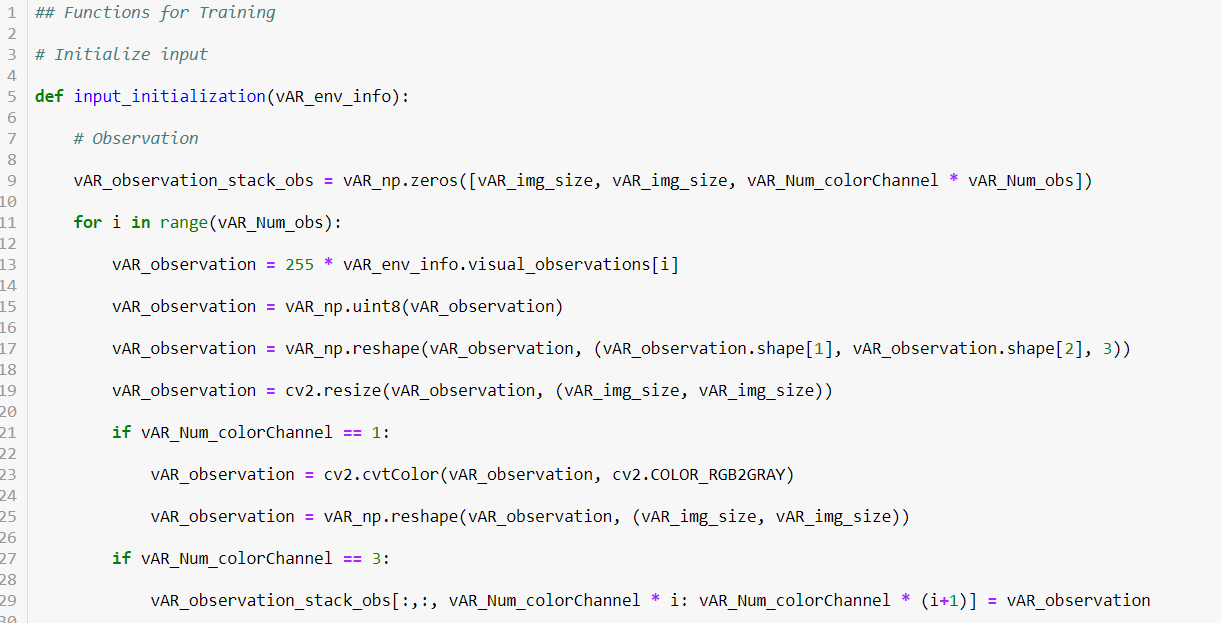

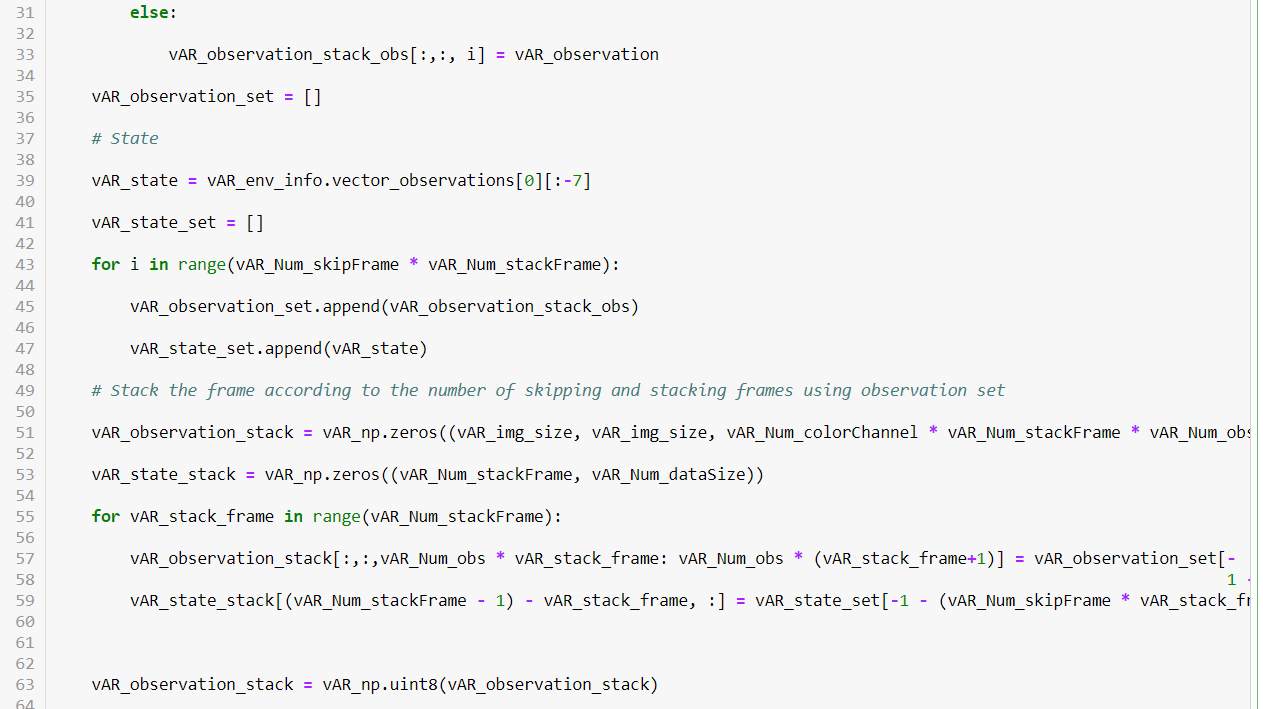

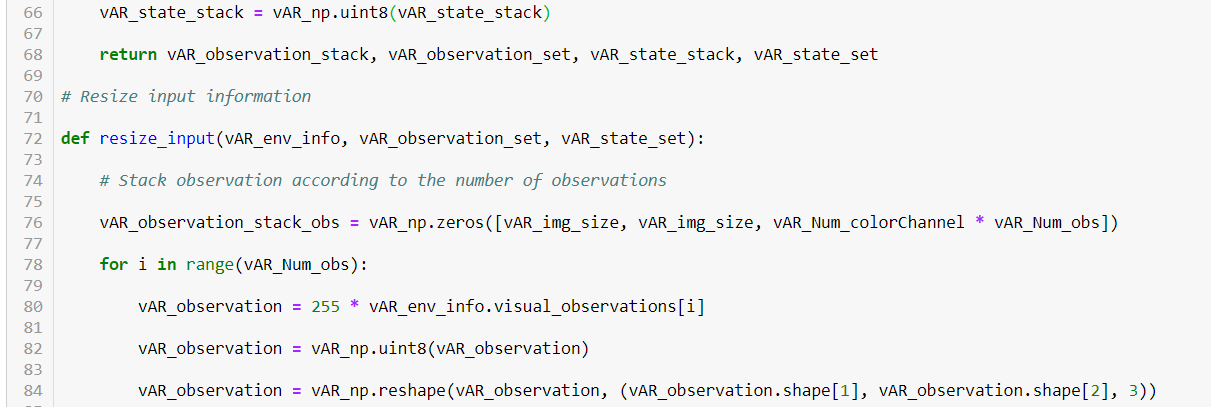

A model implementation, to address a given problem involves several steps. Here are the key steps that are involved to implement a model. You can customize these steps as needed and we developed these steps for learning purpose only.

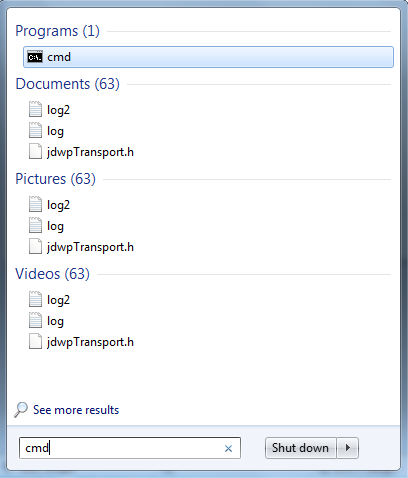

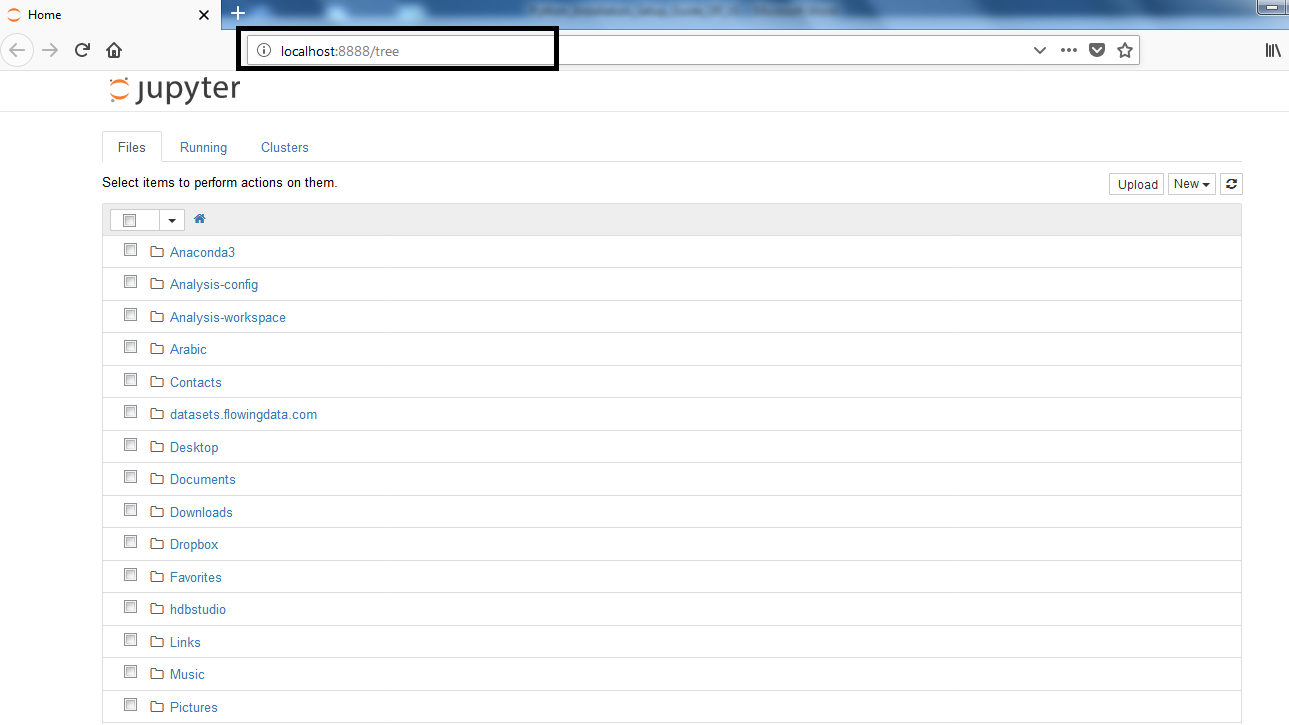

Jupiter notebook is launched through the command prompt. Type cmd & Search to Open Command prompt Terminal.

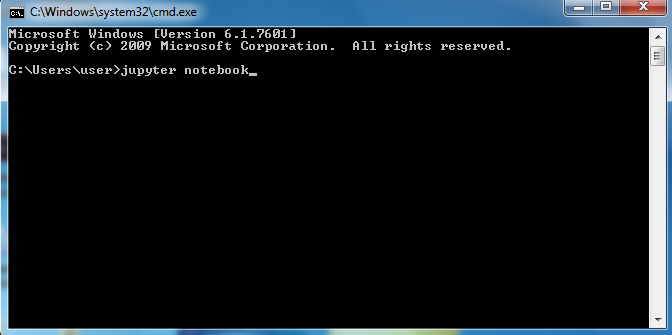

Now, Type Jupiter notebook & press Enter as shown

After typing, the Below Page opens

Open a New File or New Program in Jupyter Notebook

To Open a New File, follow the Below Instructions

Go to New >>> Python [conda root]

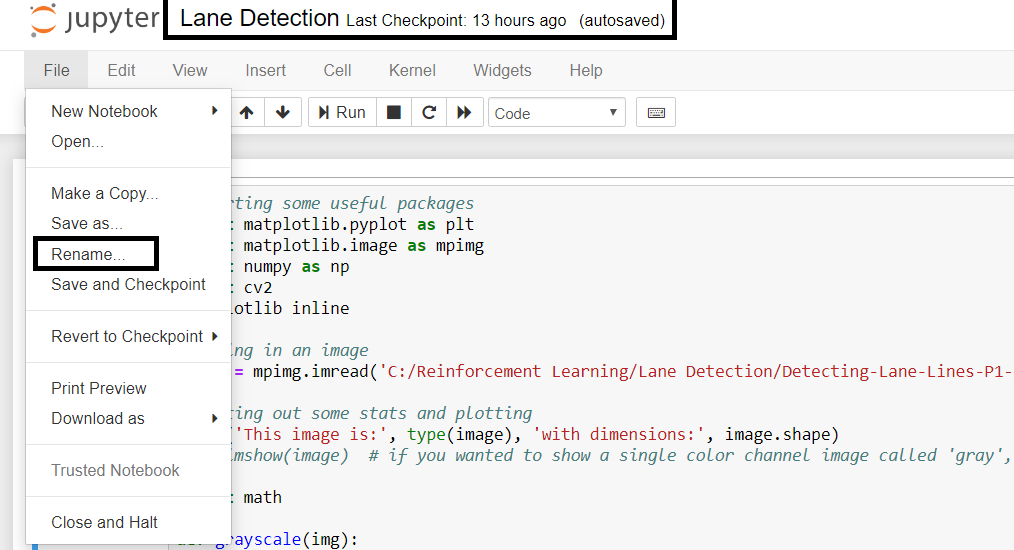

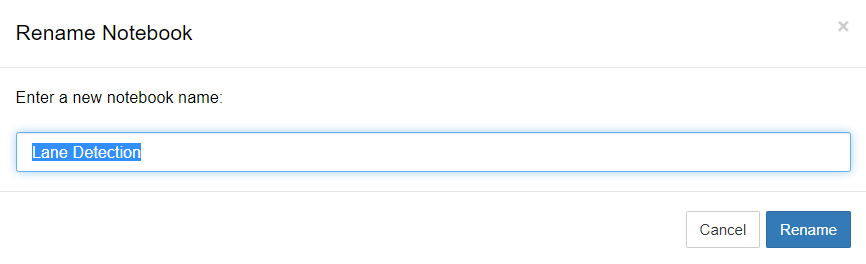

Give a meaningful name to the File as shown below.

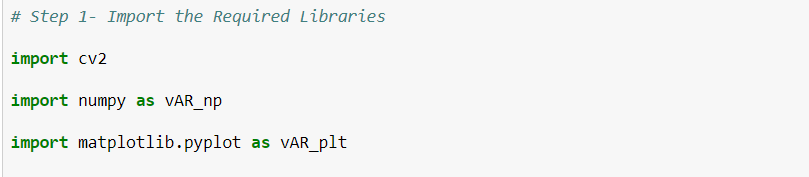

For our Model Implementation we need the Following Libraries:

OpenCV: OpenCV is the leading open source library for computer vision, image processing and machine learning, and features GPU acceleration for real-time operation. Written in optimized C/C++, the library can take advantage of multi-core processing.

Numpy : NumPy is a general-purpose array-processing package. It provides a high-performance multidimensional array object, and tools for working with these arrays. It is the fundamental package for scientific computing with Python. It contains various features including these important ones:

Matplotlib : Matplotlib is a visualization library in Python for 2D plots of arrays. Matplotlib is a multi-platform data visualization library built on NumPy arrays and designed to work with the broader SciPy stack. It has greatest benefits of visualization is that it allows us visual access to huge amounts of data in easily digestible visuals.

Deep Neural Networks : A deep neural network is a neural network with a certain level of complexity, a neural network with more than two layers. Deep neural networks use sophisticated mathematical modeling to process data in complex ways. A neural network, in general, is a technology built to simulate the activity of the human brain specifically, pattern recognition and the passage of input through various layers of simulated neural connections.

Deep neural networks are networks that have an input layer, an output layer and at least one hidden layer in between. Each layer performs specific types of sorting and ordering in a process that some refer to as “feature hierarchy.” One of the key uses of these sophisticated neural networks is dealing with unlabelled or unstructured data. The phrase “deep learning” is also used to describe these deep neural networks, as deep learning represents a specific form of machine learning where technologies using aspects of artificial intelligence seek to classify and order information in ways that go beyond simple input/output protocols.

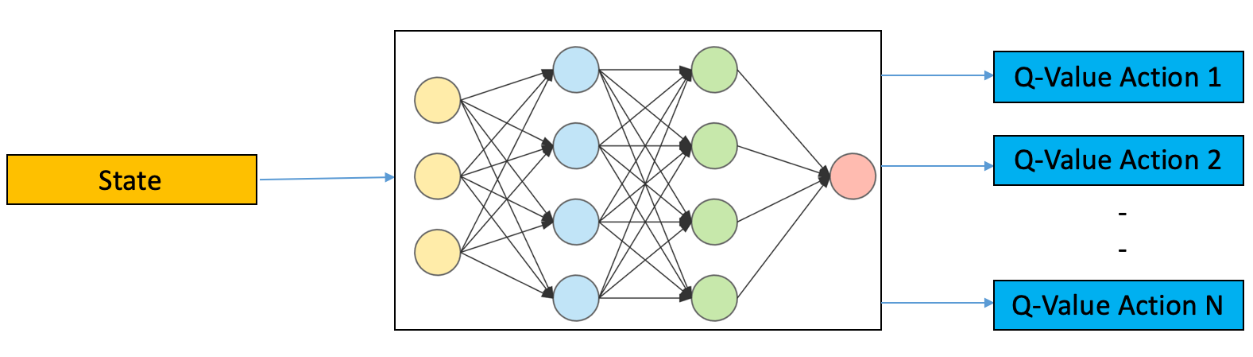

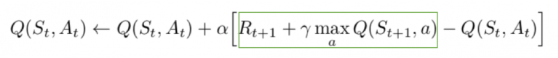

Deep Q-Networks : Deep Learning combined with Q-Learning yields Deep Q-Networks. In Deep Q-learning, we use a neural network to approximate the Q-value function. The state is given as the input and the Q-value of all possible actions is generated as the output.

It follows the below steps:

The input data is the Road Image from the Camera fixed in the Self-Driving Car. There are Several Cameras fixed inside the Self-Driving Car that captures the images & videos which act as input to the computer vision libraries used in our implementation:

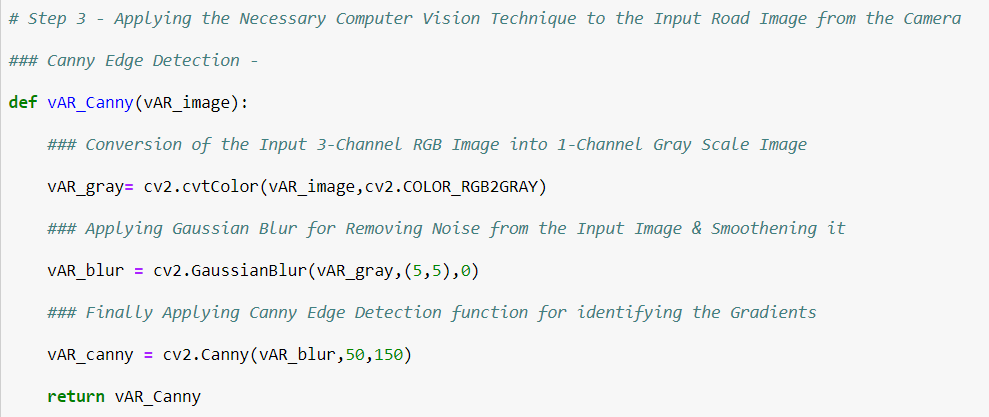

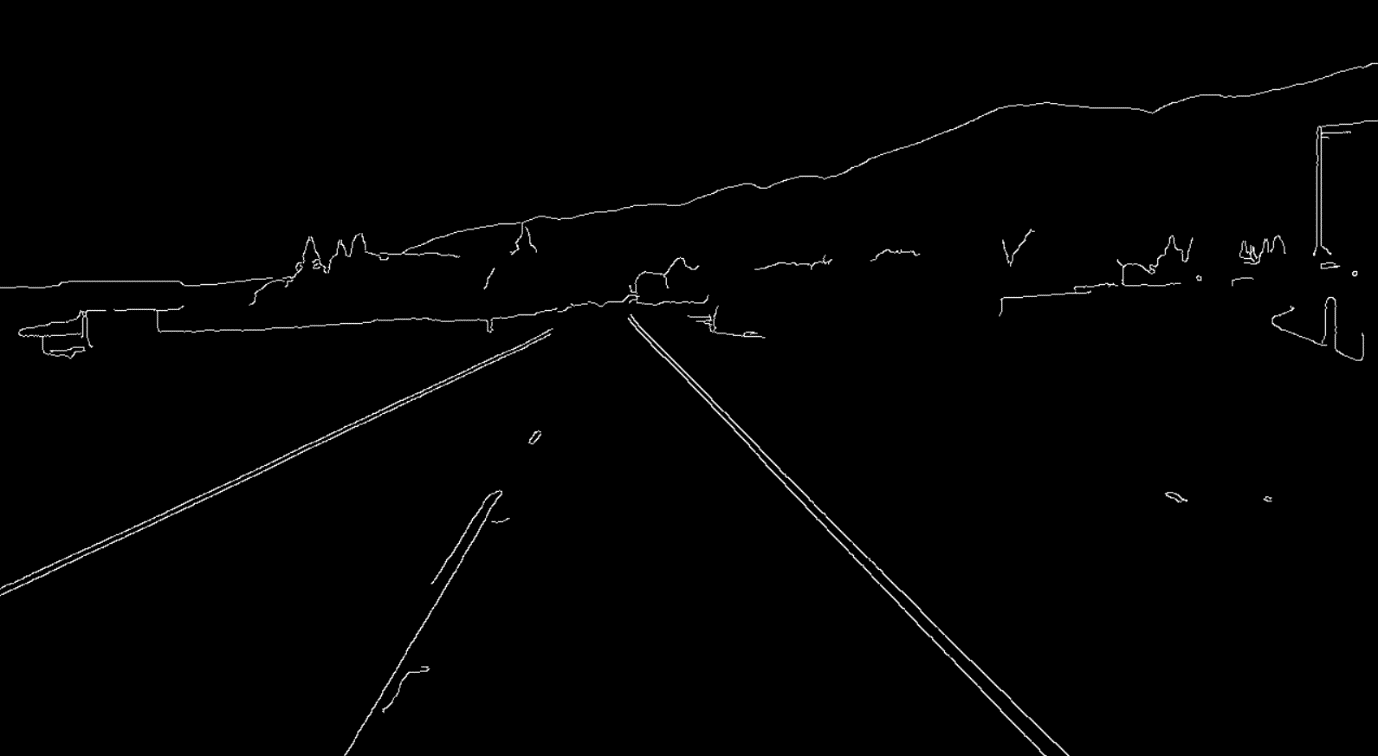

Canny Edge Detection: Canny Edge Detection uses a multi-stage algorithm to detect a wide range of edges in images. Edge detection is an essential image analysis technique when someone is interested in recognizing objects by their outlines, and is also considered an essential step in recovering information from images. For instance, important features like lines and curves can be extracted using edge detection, which are then normally used by higher-level computer vision or image processing algorithms. A good edge detection algorithm would highlight the locations of major edges in an image, while at the same time ignoring any false edges caused by noise.

Canny Edge Detection involves series of steps

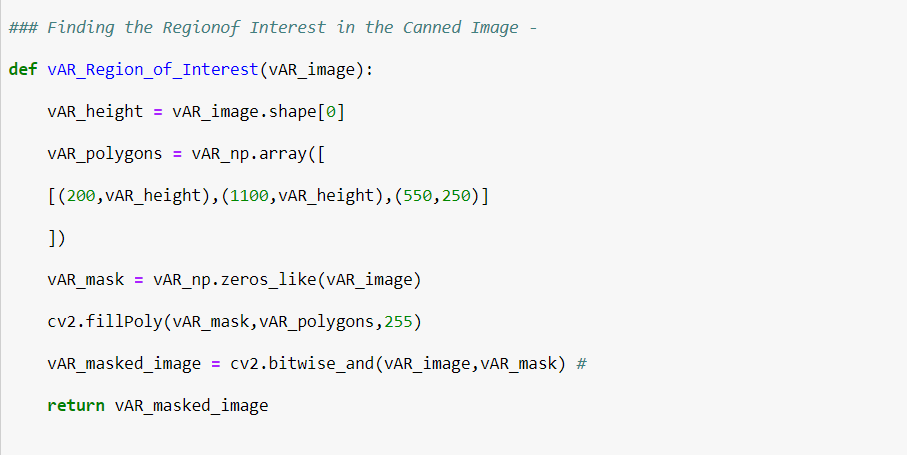

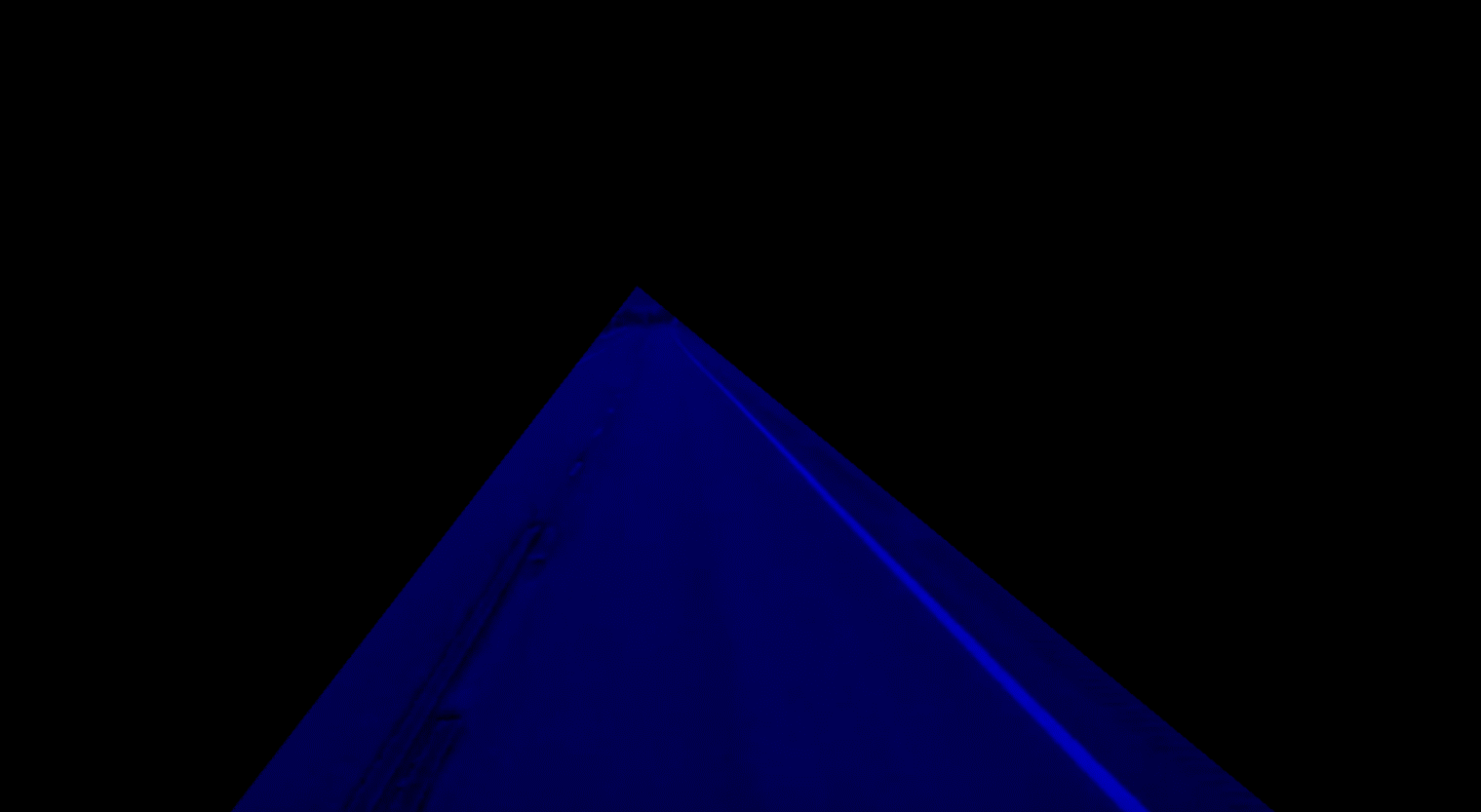

Region of Interest: Region of interest focusses on only the area that is in the interest of lane detection. With that in view a polygon is drawn with fillpoly function.

Hough Transform: The Hough transform is a feature extraction technique used in image analysis, computer vision. The Hough transform is concerned with the identification of lines in the image, then the Hough transform has been extended to identifying positions of arbitrary shapes, most commonly circles or ellipses. Here in our Implementation it is used for feature extraction in the masked image. Since the area concerned with the region interest is an vector space with features & labels, it can be used to draw a straight line along the lane markings.

Original Road Image with lane Detection

Downloading the Environment:: The Environment is typically a set of states the "agent" is attempting to influence via its choice of "actions". The agent arrives at different scenarios known as states by performing actions. Actions lead to rewards which could be positive and negative.

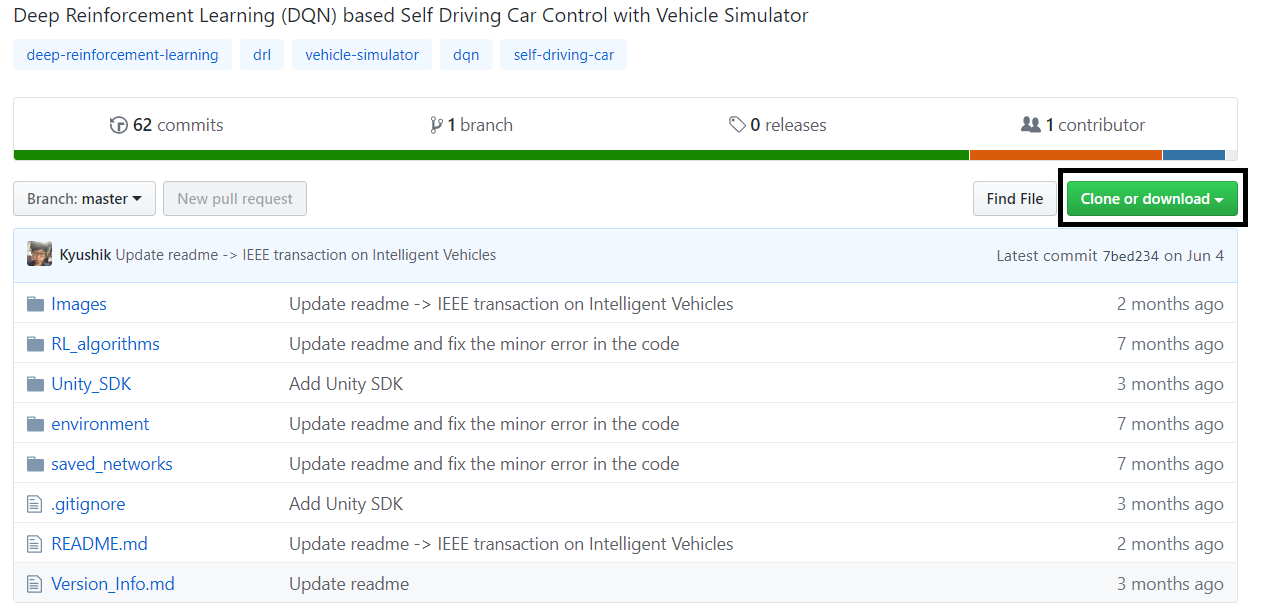

The Environment we are using in this Implementation is an Unity Self driven car Environment that can be downloaded from the below link: https://www.dropbox.com/s/7xti37jv3d28u1z/environment_windows.zip?dl=0

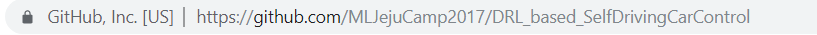

After the Environment is downloaded, Clone the Self Driven Car Repository from the below Link. https://github.com/MLJejuCamp2017/DRL_based_SelfDrivingCarControl

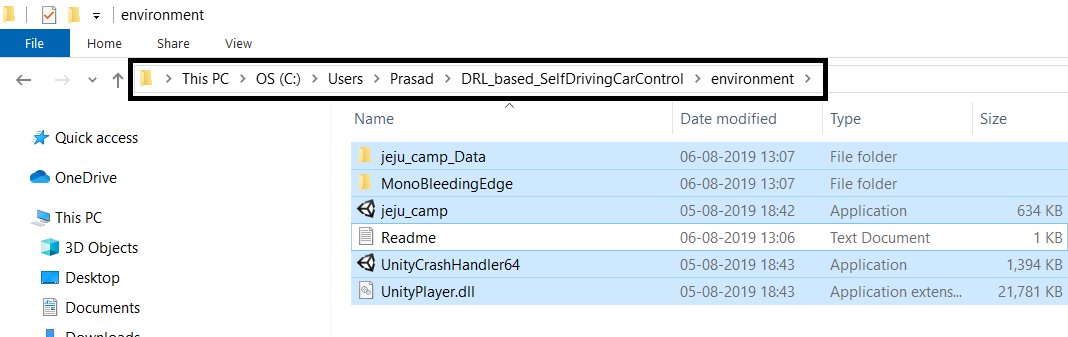

After you clone or download the self-driven car repo, copy & paste all the files from the environment folder (Downloaded from the dropbox) into the environment folder of the cloned repository as shown (First unzip the downloaded folder):

In Our implementation we will be using Unity ML-Agents Toolkit.The Unity Machine Learning Agents Toolkit (ML-Agents) is an open-source Unity plugin that enables games and simulations to serve as environments for training intelligent agents. Agents can be trained using reinforcement learning, imitation learning, neuroevolution, or other machine learning methods through a simple-to-use Python API. The ML-Agents toolkit is mutually beneficial for both game developers and AI researchers as it provides a central platform where advances in AI can be evaluated on Unity’s rich environments and then made accessible to the wider research and game developer communities.

Following are the features of ML-agents toolkit

The ML-Agents tool kit can be downloaded & install in two ways:

To install and use ML-Agents, first you need to install Unity, clone this repository and install Python with additional dependencies

https://store.unity.com/download

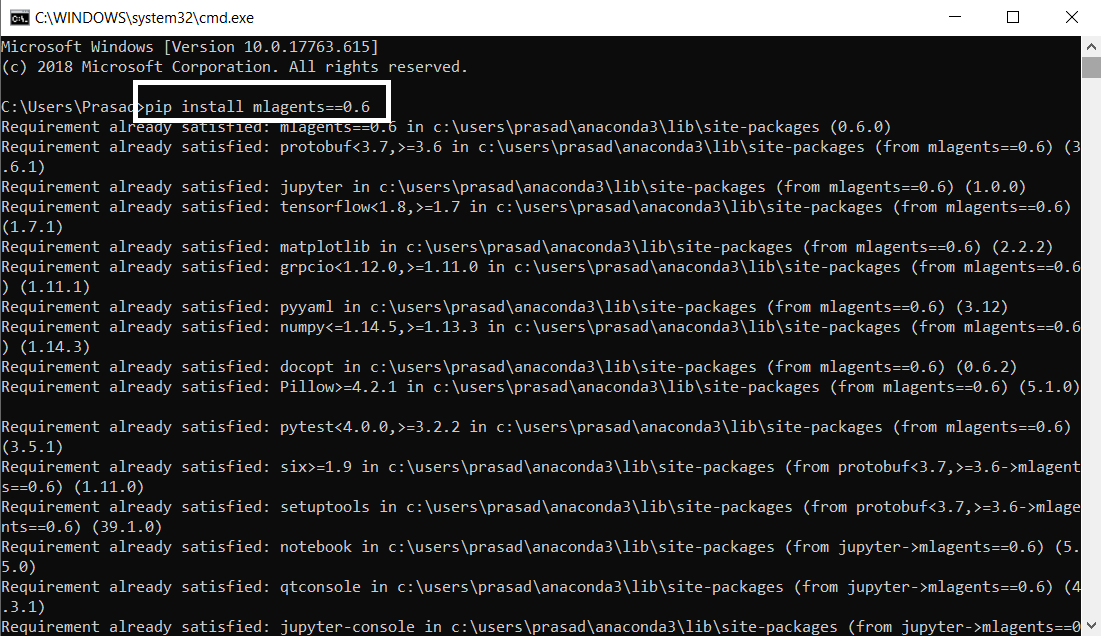

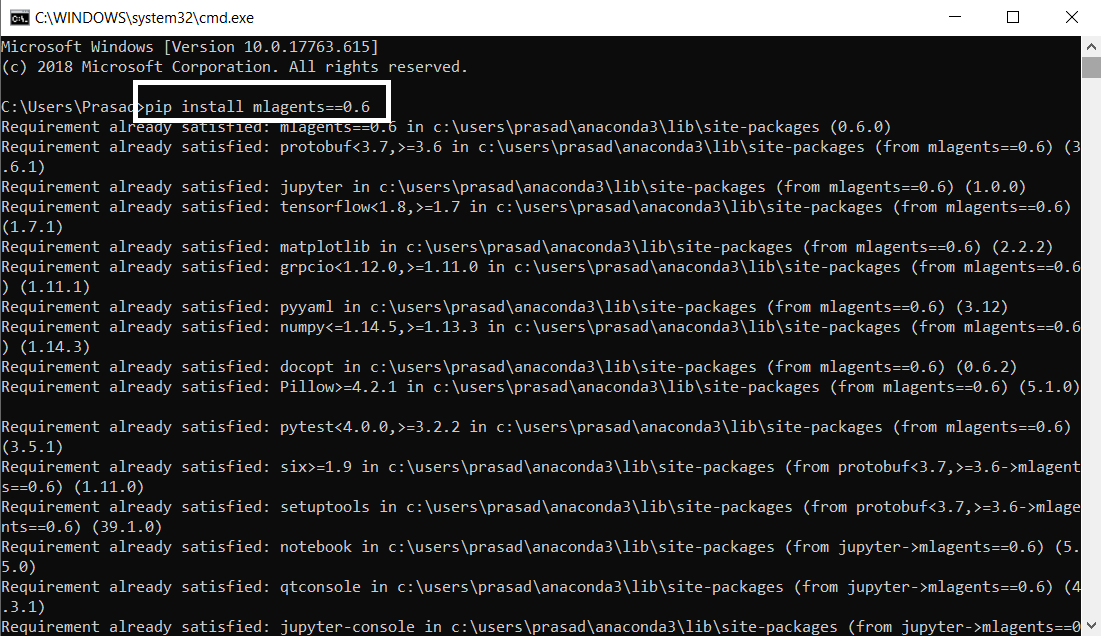

Downloading & Installing from the PyPi python Repository: The Python Package Index (PyPI) is a repository of software for the Python programming language. PyPI helps you find and install software developed and shared by the Python community. Use PyPi to install ML-Agents as shown below

Open Windows Command Prompt as shown

Once the Command Prompt window launches type pip install mlagents==0.6

Note that pip install mlagents==0.6 will install ml-agents from PyPi, not from the cloned repo. If installed correctly, you should be able to run mlagents-learn --help, after which you will see the Unity logo and the command line parameters you can use with mlagents-learn.

By installing the mlagents package, the dependencies listed in the setup.py file are also installed. Some of the primary dependencies include:

To clone the ml-agents repository visit the below URL

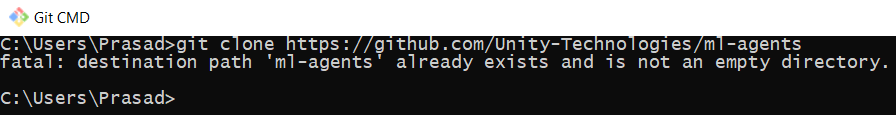

If Git is already installed then using git clone the Repository as shown. If Git is not installed download & install Git from the below link

If you intend to make modifications to ml-agents or ml-agents-envs, you should install the packages from the cloned repo rather than from PyPi. To do this, you will need to install ml-agents and ml-agents-envs separately. Open windows command prompt, navigate to the Cloned repo's root directory as shown

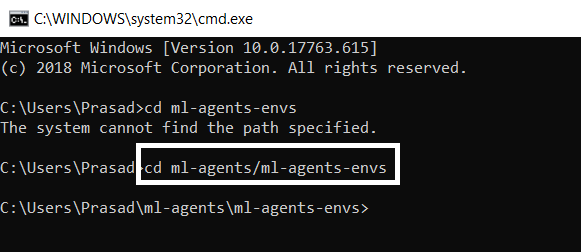

Open Windows Command Prompt as shown

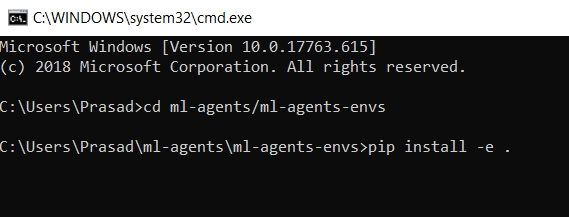

Navigate to the cloned repository root directory & then type cd ml-agents/ml-agents-envs

Type pip install -e .

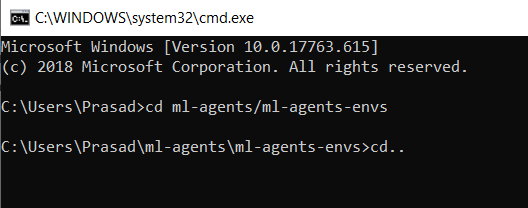

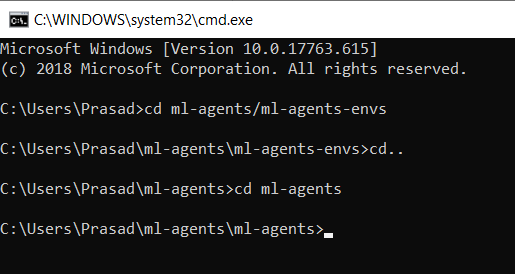

After the Installation completes, type cd.. to navigate back to ml-agents folder

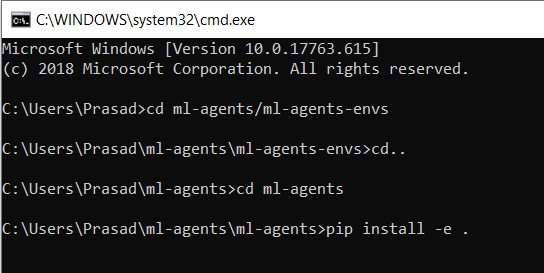

type cd ml-agents/ml-agents-envs

Type pip install -e .

Now that you have the environment both from unity & python end, start the environment, so that you can interact with the unity environment through python as shown

Environments contain brains which are responsible for deciding the actions of their associated agents. Here we check for the first brain available, and set it as the default brain we will be controlling from Python.

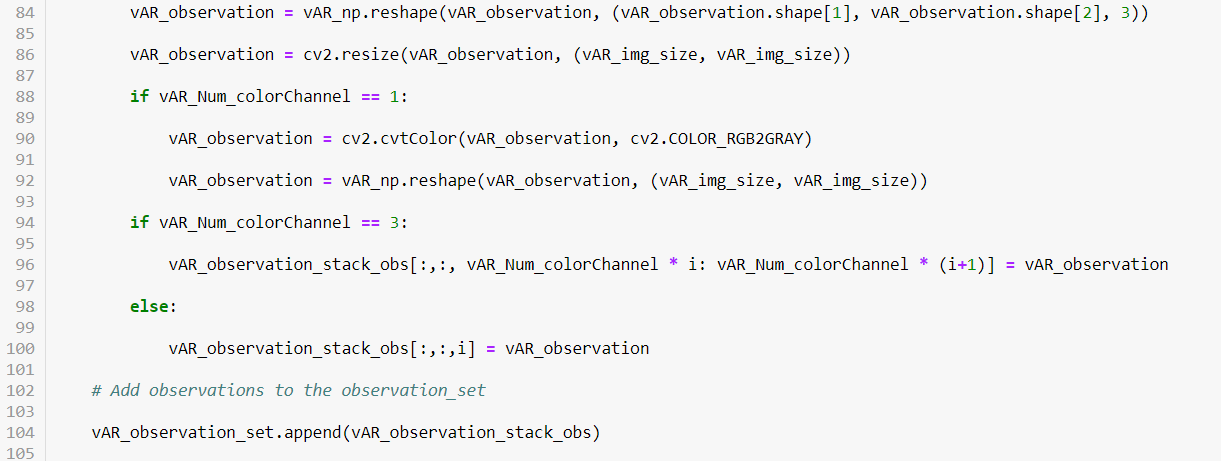

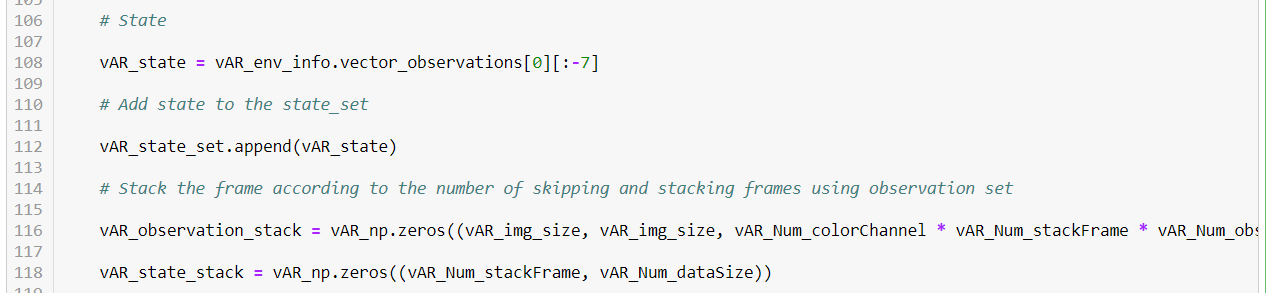

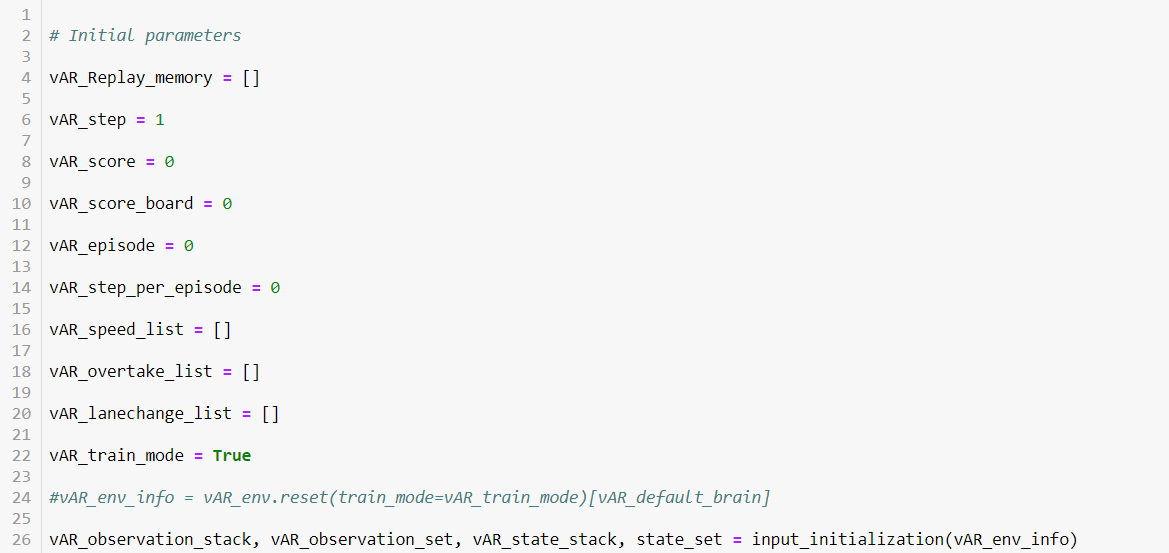

We can reset the environment to be provided with an initial set of observations and states for all the agents within the environment. In ML-Agents, states refer to a vector of variables correspondingto relevant aspects of the environment for an agent. Likewise, observations refer to a set of relevant pixel-wise visuals for an agent.

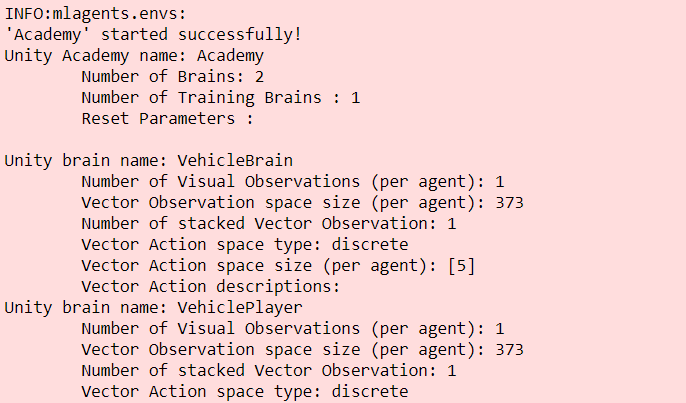

This is how the brain the looks like

In Deep Q-learning, we use a neural network to approximate the Q-value function. The state is given as the input and the Q-value of all possible actions is generated as the output.

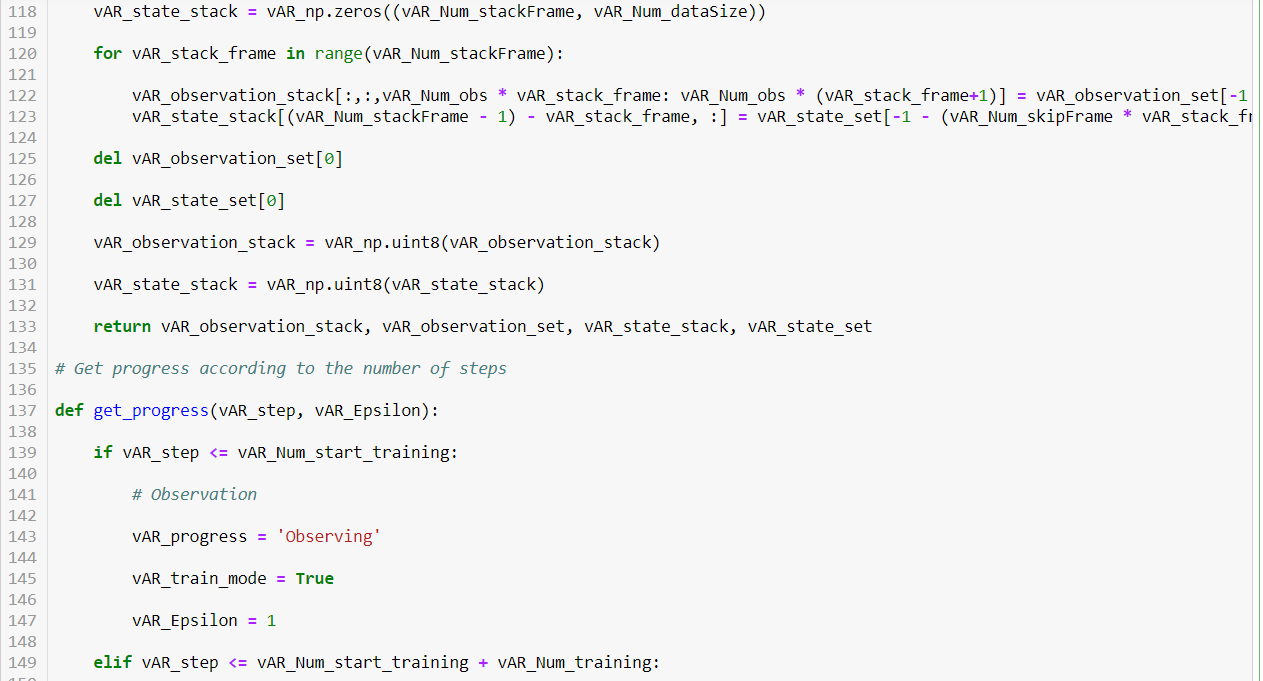

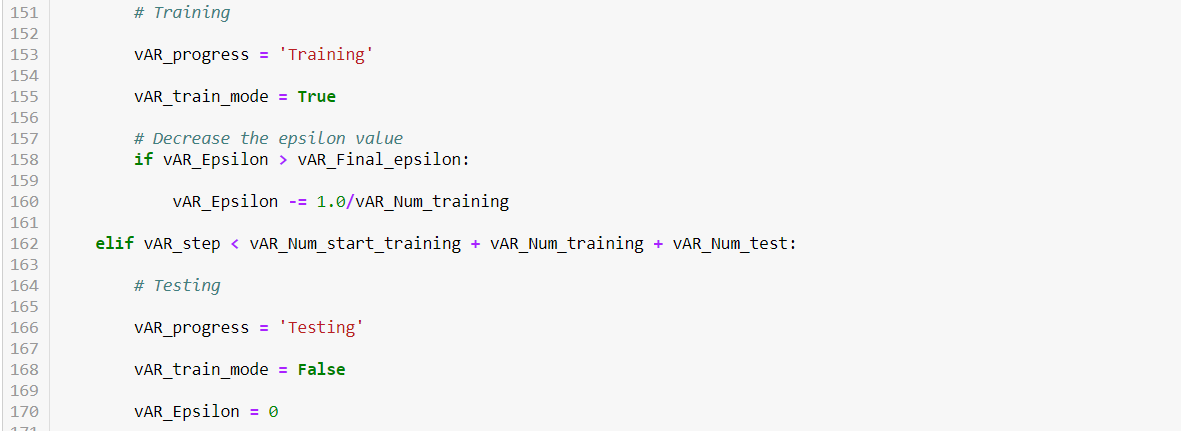

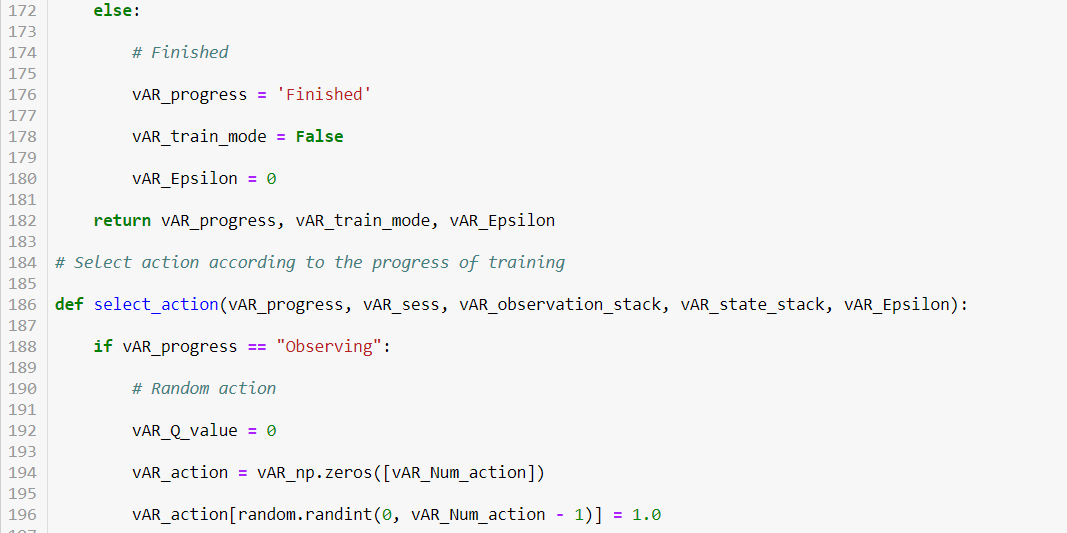

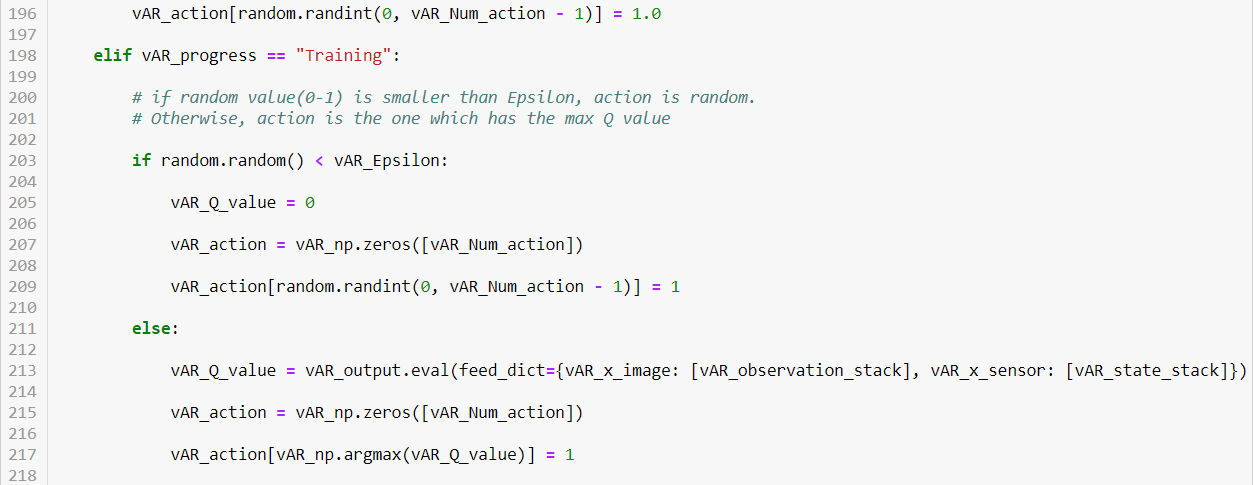

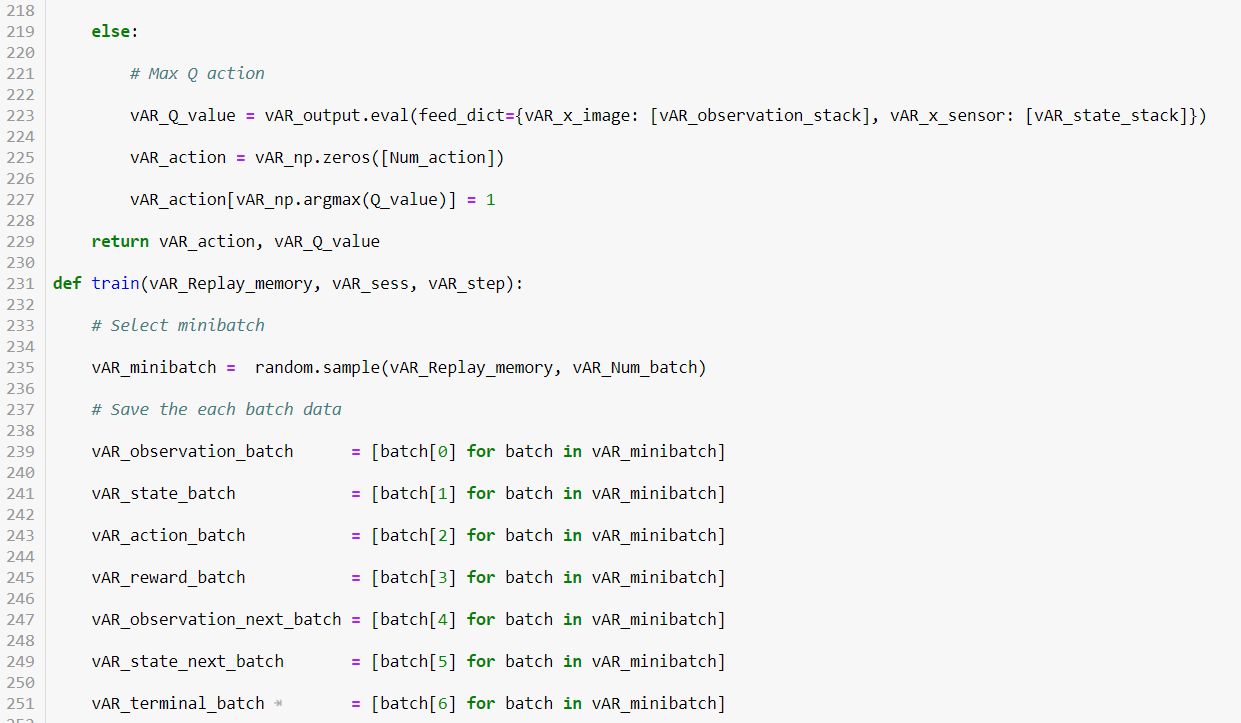

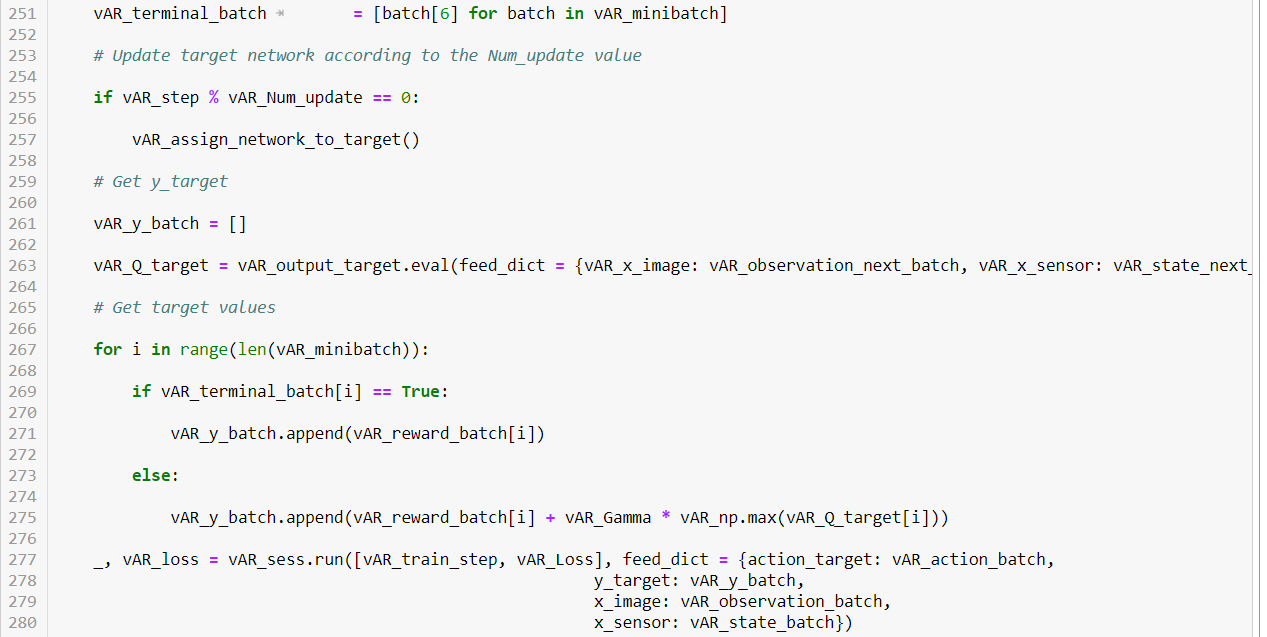

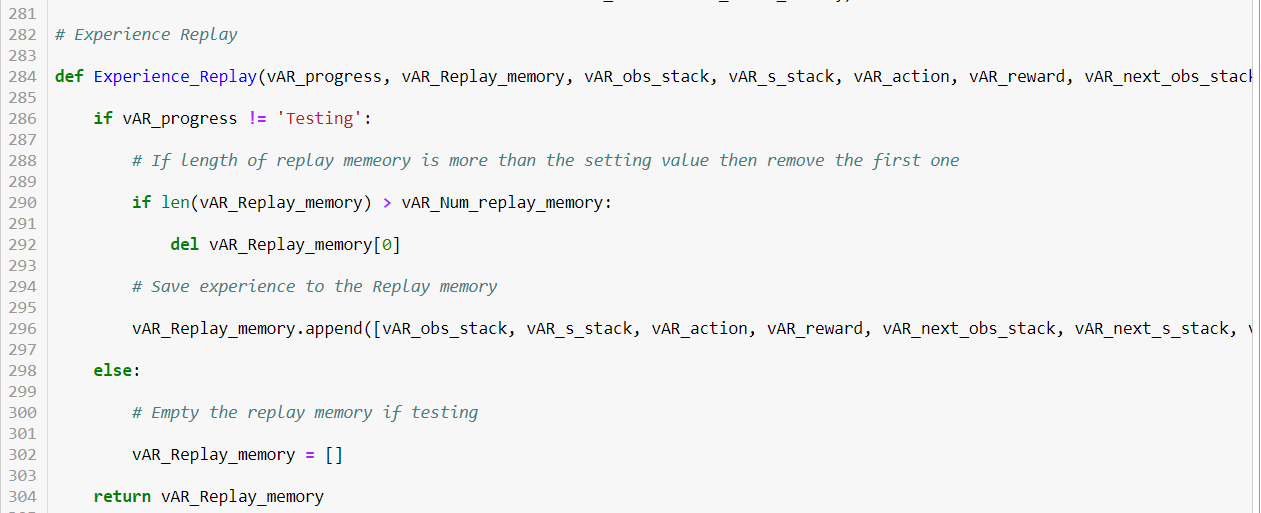

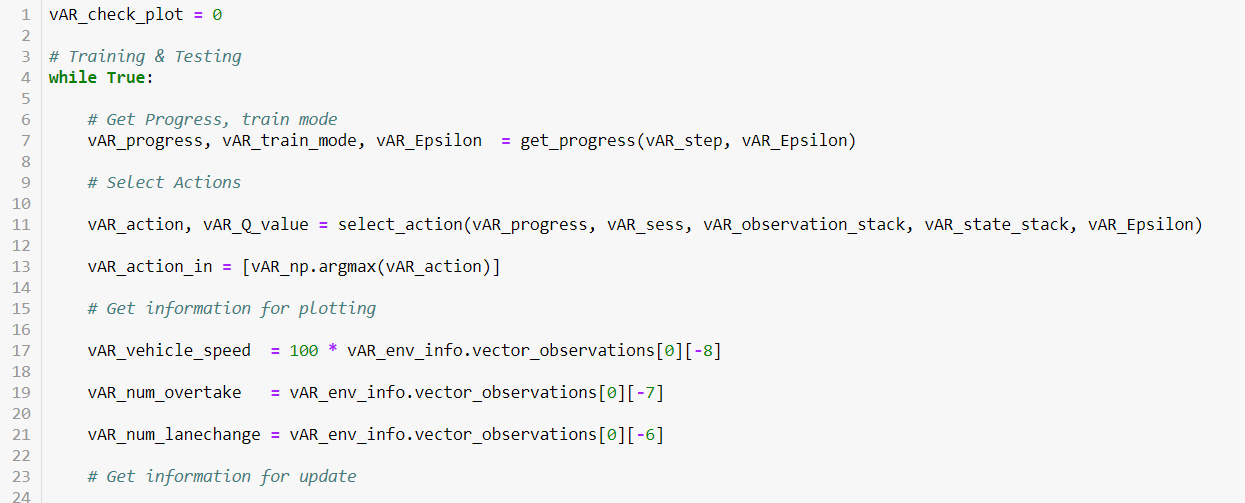

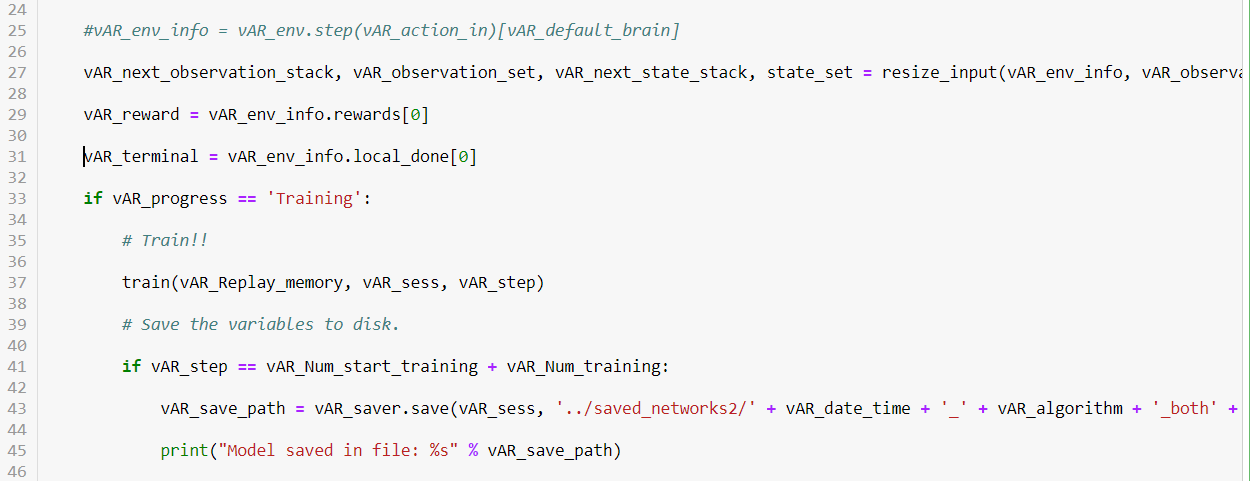

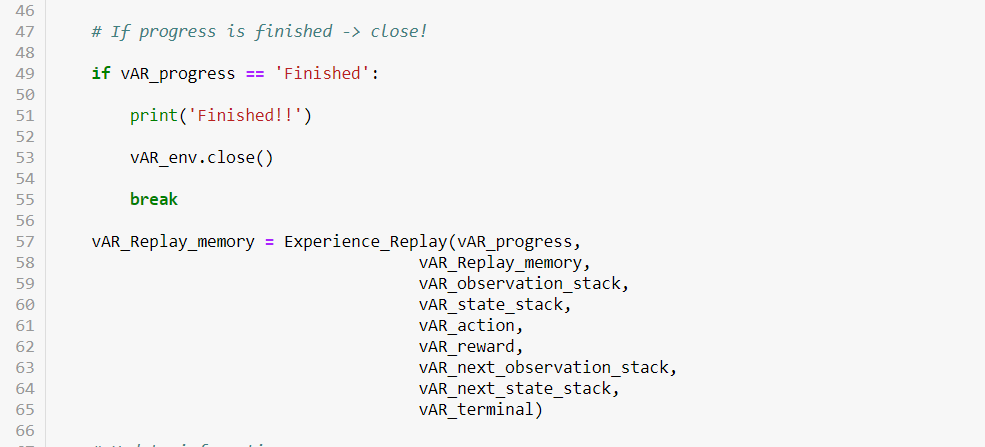

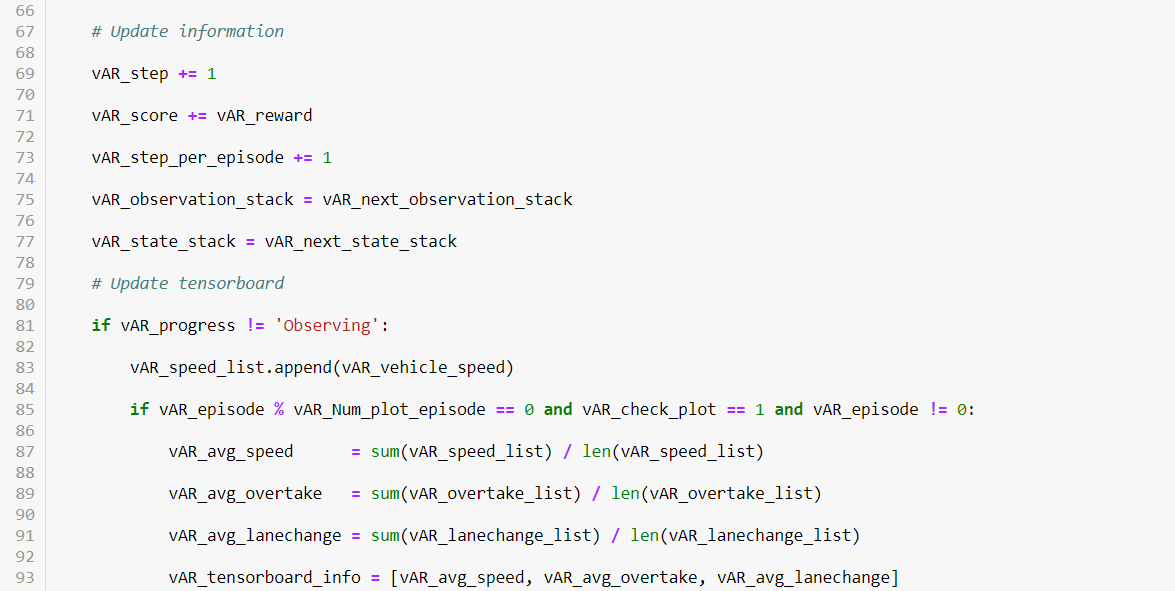

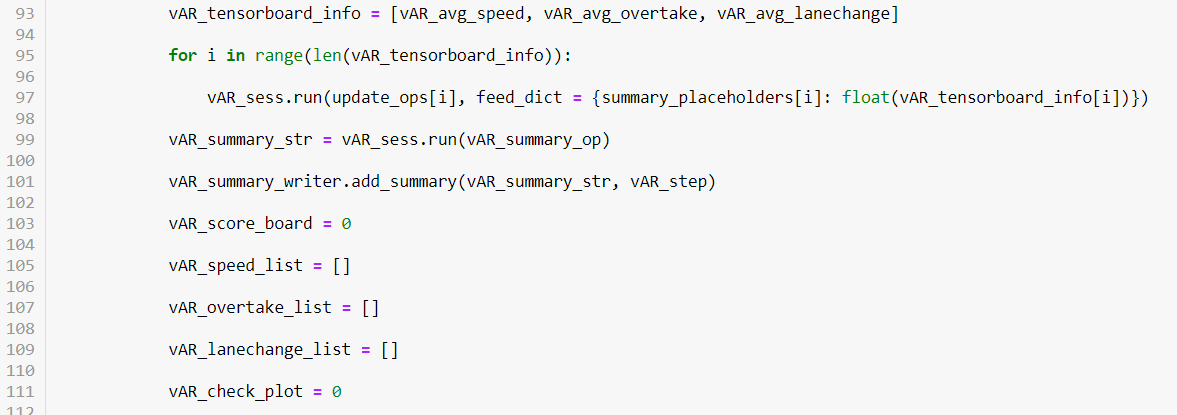

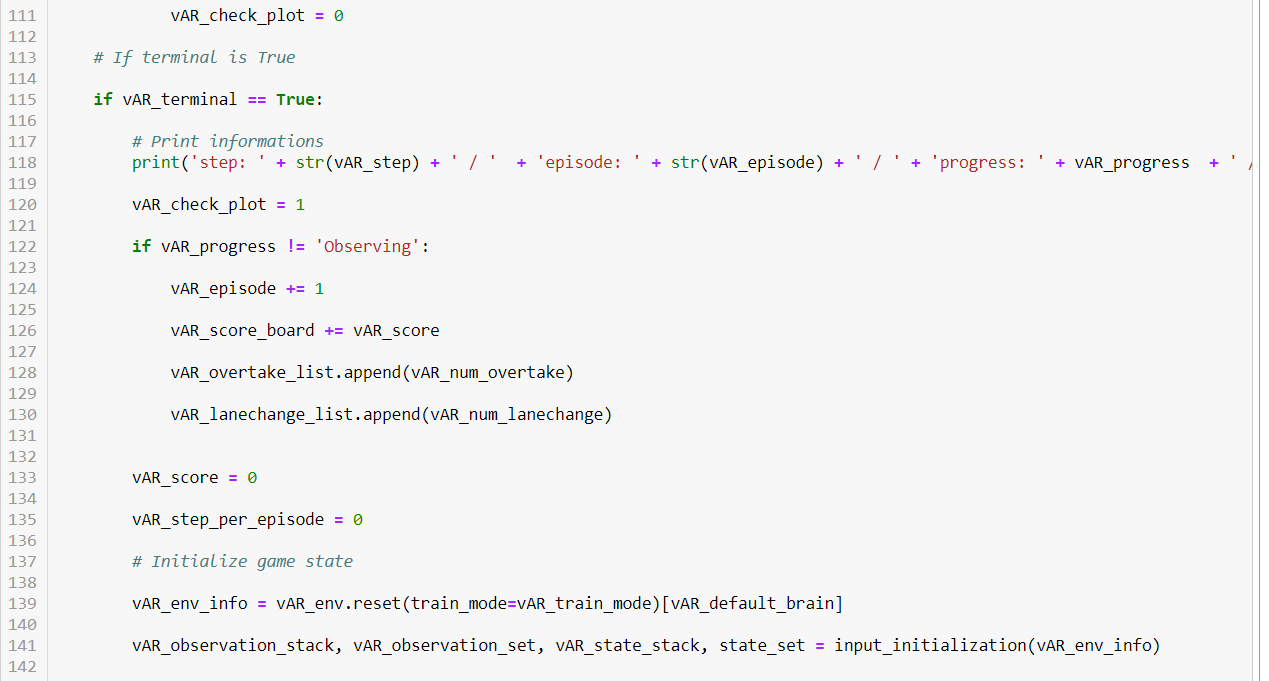

Following are the steps involved in reinforcement learning using deep Q-learning networks (DQNs)?

Train the Agent to interact with the environment. We can step the environment forward and provide actions to all of the agents within the environment. The Agent is trained for actions based on the action_space_type of the default brain.

Before Training

After Training

In this lab work, we have used Deep Q Networks, a reinforcement learning model to train the self-driving car environment on the agent. The model performed well on the & was able to produce the outcome as expected.

This is an implementation to learn and better understand the overall steps and processes that are involved in implementing a reinforcement learning model. There are a lot more steps, processes, data and technologies involved. We strongly request and recommend you to learn and prepare yourself to address real-world problems.

Jothi Periasamy

Chief AI Architect

2100 Geng Road

Suite 210

Palo Alto

CA 94303

(916)-296-0228