All software & hardware's used or referenced in this guide belong to their respective vendors. We have developed this guide based on our development infrastructure and this guide may or may not work on others systems and technical infrastructure. We are not liable for any direct or indirect problems caused to the users using this guide.

The purpose of this document is to provide adequate information to users to implement a Reinforcement model. In order to achieve this, we are using one of the well-known gaming problem solved using Deep Q Network, a Reinforced machine learning model.

A pole is attached by an un-actuated joint to a cart, which moves along a frictionless track. The pendulum starts upright, and the goal is to prevent it from falling over by increasing and reducing the cart's velocity.

The Cart pole system is controlled by applying a force of +1 or -1 to the cart. The pendulum starts upright, and the goal is to prevent it from falling over. A reward of +1 is provided for every timestep that the pole remains upright. The episode ends when the pole is more than 15 degrees from vertical, or the cart moves more than 2.4 units from the center.

Step 1: Defining a Clear Problem Statement

Step 2: Importing the Environment - Import the Environment with which the agent needs to interact

Step 3: Model Selection - Model selection is the process of choosing between different Reinforcement learning approaches. The Model Selected here is a Deep Q-Learning Network.

Step 4: Model Training – Model Training is a process of training the agent. The agent interacts with its environment. The agent arrives at different scenarios known as states by performing actions. Actions lead to rewards which could be positive and negative. The agent has only one purpose here to maximize its total reward across an episode.

Step 5: Model Testing – Now that the agent is trained & we have to test the reinforcement for rewards.

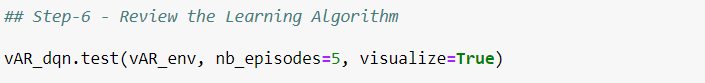

Step 6: Review the Model Outcome – We check the output of Model i.e. the rewards for every action that it takes for the maximum reward.

Model selection is the process of choosing between different reinforcement learning approaches - e.g. Q-Learning, Deep Q Network, Deep Deterministic Policy Gradient etc. - or choosing between different hyperparameters or sets of features for the same reinforcement learning approach.

The choice of the actual reinforcement learning algorithm (e.g. Q-Learning, Deep Q Network) is less important than you'd think - there may be a "best" algorithm for a particular problem, but often its performance is not much better than other well-performing approaches for that problem.

There may be certain qualities you look for in a model:

Our Problem here is a Reinforcement Learning Problem. The Problem is to prevent the pendulum from falling over by increasing and reducing the cart's velocity. This Type of Problem can be Solved by the following Models.

We are going to use Deep Q Networks as the Best fit Model for our Problem Statement as the Problem we are solving is a Binary (Two Classes) Classification Problem.

There are several machine and data engineering libraries available. We are using the following two libraries, and these libraries and their associated functions are readily available to use in Python to develop business application.

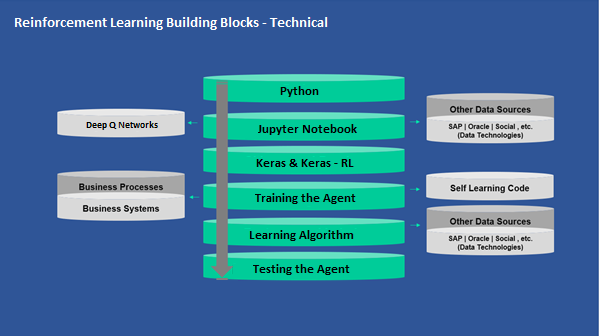

As we explained above, we are using Deep Q Network reinforcement learning model (DQN).

There are several technical and functional components involved in implementing this model. Here are the key building blocks to implement the model.

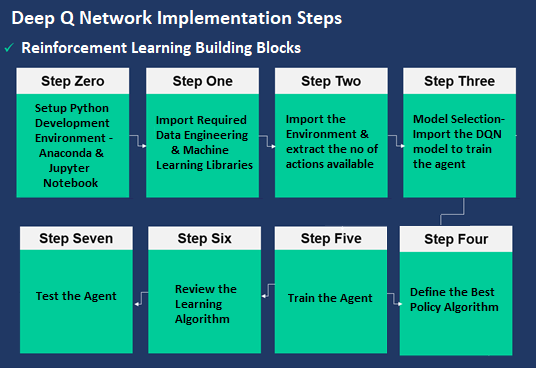

A model implementation, to address a given problem involves several steps. Here are the key steps that are involved to implement a model. You can customize these steps as needed and we developed these steps for learning purpose only.

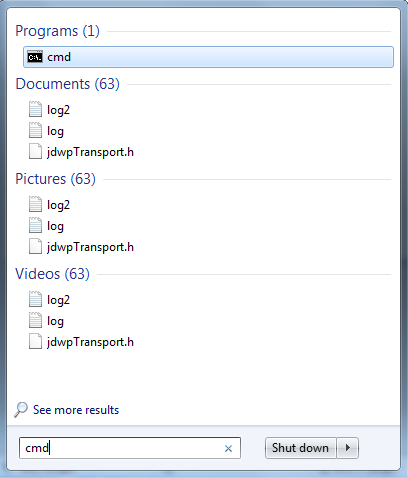

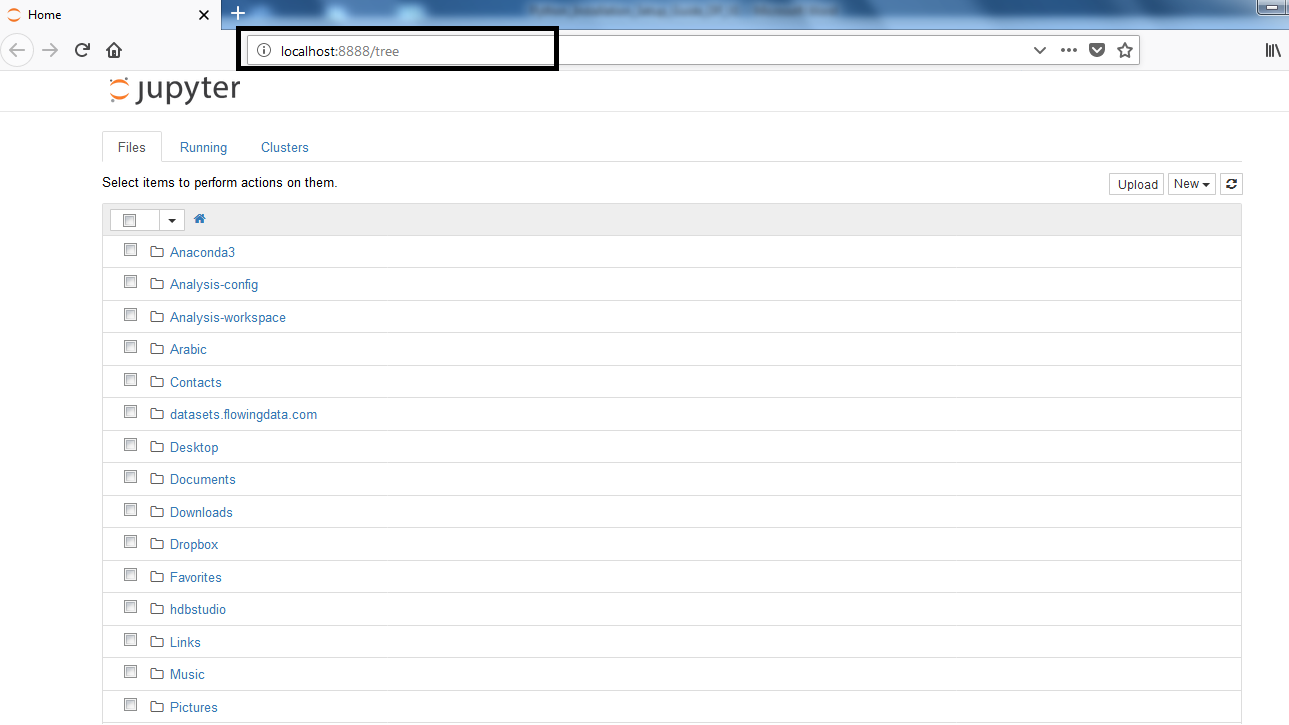

Jupiter notebook is launched through the command prompt. Type cmd & Search to Open Command prompt Terminal.

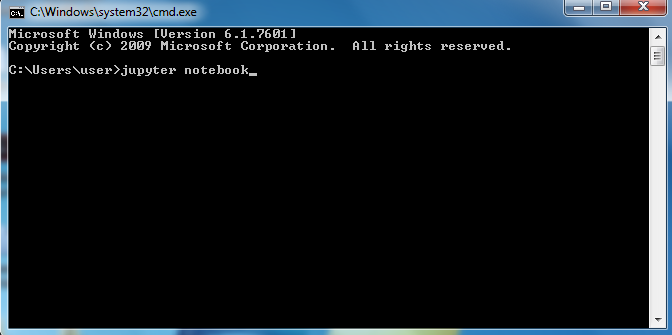

Now, Type Jupiter notebook & press Enter as shown

After typing, the Below Page opens

To Open a New File, follow the Below Instructions

Go to New >>> Python [conda root]

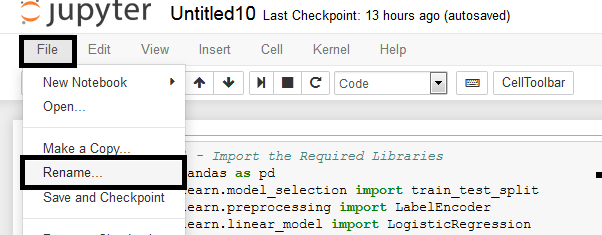

Give a meaningful name to the File as shown below.

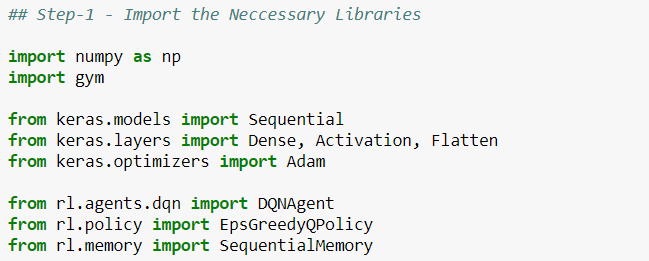

For our Model Implementation we need the following two libraries:

Keras: Keras is an open source neural network library written in Python. It is capable of running on top of TensorFlow, Microsoft Cognitive Toolkit or Theano.

Keras-rl: Keras-RL is a library for Deep Reinforcement Learning with Keras.

Gym: Gym is a toolkit for developing and comparing reinforcement learning algorithms

Numpy: NumPy is a general-purpose array-processing package. It provides a high-performance multidimensional array object, and tools for working with these arrays.

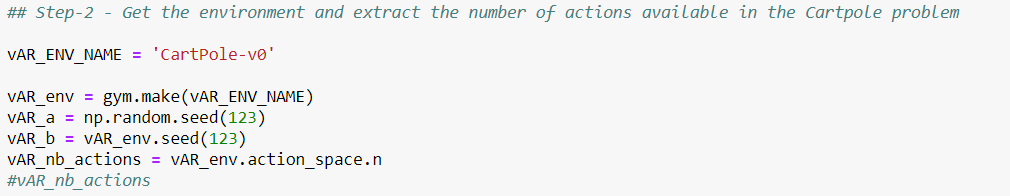

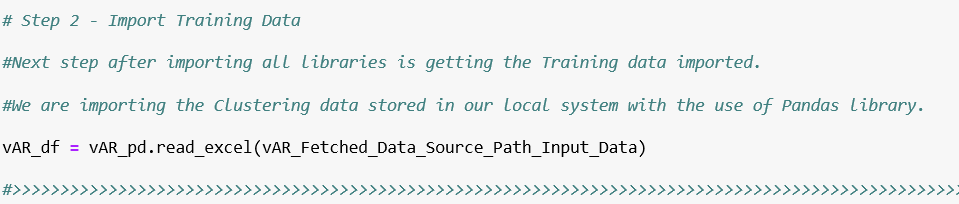

Next immediate step after importing all libraries is getting the Importing the Agent’s Environment & extracting the number of actions available.

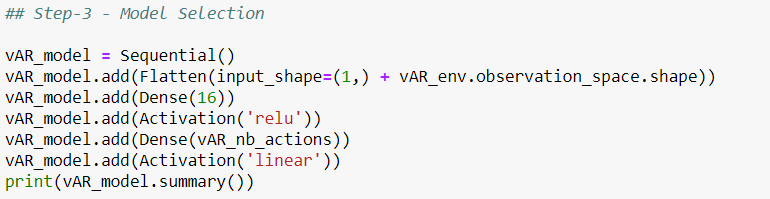

Step 3 of the Implementation is Model Selection. Model selection is the process of choosing between different Reinforcement learning approaches - e.g. Q Learning, Deep Q Networks etc. or choosing between different hyperparameters or sets of features for the same reinforcement learning approach

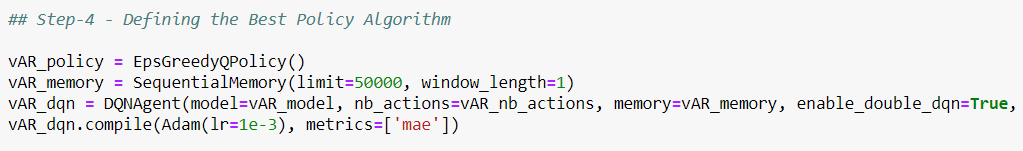

Step 4 is Defining the Best Policy Algorithm. The Best Policy Algorithm gives the maximum reward for the best possible action by the agent by employing the best possible Policy or strategy.

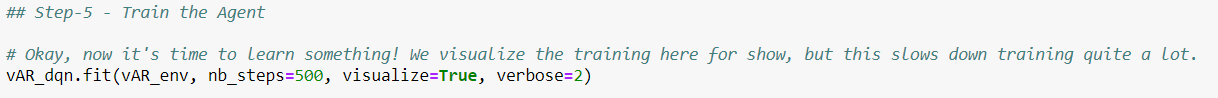

As a next step we need to Training the agent so that the agent interacts with the environment & learns to perform action for the maximum possible reward.

Once the Agent has learned to perform actions for maximum possible reward, the agent can be reviewed for learning algorithm.

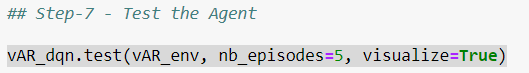

Next, we Test the Agent with its Environment

Execute to View the data

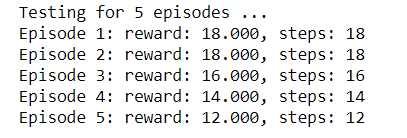

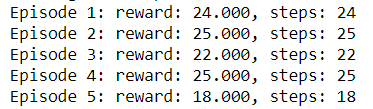

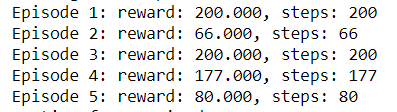

In this lab work, we have used Deep Q Network, a Reinforcement model to achieve maximum reward for a simple Cart Pole Problem. The model performed well on the test agent & achieved the maximum reward in initial episodes.

This is a very basic implementation to learn and better understand the overall steps and processes that are involved in implementing a reinforcement learning model. There are a lot more steps, processes, data and technologies involved. We strongly request and recommend you to learn more and prepare yourself to address real-world problems.

Fitting is a measure of how well a machine learning model generalizes to similar data to that on which it was trained. A model that is well-fitted produces more accurate outcomes, a model that is overfitted matches the data too closely, and a model that is underfitted doesn’t match closely enough. Fitting is the essence of machine learning. If your model doesn’t fit your data correctly, the outcomes it produces will not be accurate enough to be useful for practical decision-making.

The model is Best Fitting, when it performs well on training example & also performs well on unseen data. Ideally, the case when the model makes the predictions with 0 error, is said to have a best fit on the data. This situation is achievable at a spot between overfitting and underfitting. In order to understand it we will have to look at the performance of our model with the passage of time, while it is learning from training dataset.

vAR_policy = EpsGreedyQPolicy()

vAR_memory = SequentialMemory(limit=50000, window_length=1)

vAR_dqn=DQNAgent(model=vAR_model, nb_actions=vAR_nb_actions, memory=vAR_memory, enable_double_dqn=True, nb_steps_warmup=10, target_model_update=1e-2, policy=vAR_policy)

vAR_dqn.compile(Adam(lr=1e-3), metrics=['mae'])

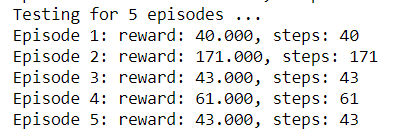

The model is Overfitting, when it performs well on training example but does not perform well on unseen data. It is often a result of an excessively complex model. It happens because the model is memorizing the relationship between the input example (often called X) and target variable (often called y) or, so unable to generalize the data well. Overfitting model predicts the target in the training data set very accurately.

vAR_policy = EpsGreedyQPolicy()

vAR_memory = SequentialMemory(limit=50000, window_length=1)

vAR_dqn = DQNAgent(model=vAR_model, nb_actions=vAR_nb_actions, memory=vAR_memory, enable_double_dqn=False, nb_steps_warmup=10, target_model_update=1e-2, policy=vAR_policy)

vAR_dqn.compile(Adam(lr=1e-3), metrics=['mae'])

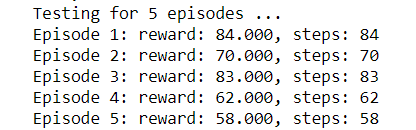

The predictive model is said to be Underfitting, if it performs poorly on training data. This happens because the model is unable to capture the relationship between the input example and the target variable. It could be because the model is too simple i.e. input features are not expressive enough to describe the target variable well. Underfitting model does not predict the targets in the training data sets very accurately. Underfitting can be avoided by using more data and also reducing the features by feature selection.

vAR_policy = EpsGreedyQPolicy()

vAR_memory = SequentialMemory(limit=50000, window_length=1)

vAR_dqn = DQNAgent(model=vAR_model, nb_actions=vAR_nb_actions, memory=vAR_memory)

vAR_dqn.compile(Adam(lr=1e-3), metrics=['mae'])

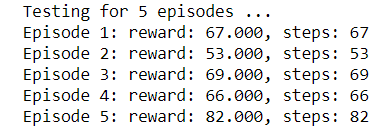

Hyperparameter Optimization or tuning is the problem of choosing a set of optimal hyperparameters for a learning algorithm. The same kind of machine learning model can require different constraints, weights or learning rates to generalize different data patterns. These measures are called hyperparameters, and have to be tuned so that the model can optimally solve the machine learning problem. Hyperparameter optimization finds a tuple of hyperparameters that yields an optimal model which minimizes a predefined loss function on given independent data.

vAR_policy = EpsGreedyQPolicy()

vAR_memory = SequentialMemory(limit=50000, window_length=1)

vAR_dqn = DQNAgent(model=vAR_model, nb_actions=vAR_nb_actions, memory=vAR_memory, enable_double_dqn=True, nb_steps_warmup=100, target_model_update=1e-2, policy=vAR_policy)

vAR_dqn.compile(Adam(lr=1e-3), metrics=['mae'])

vAR_policy = EpsGreedyQPolicy()

vAR_memory = SequentialMemory(limit=50000, window_length=1)

vAR_dqn = DQNAgent(model=vAR_model, nb_actions=vAR_nb_actions, memory=vAR_memory, enable_double_dqn=False, nb_steps_warmup=10, target_model_update=1e-2, policy=vAR_policy)

vAR_dqn.compile(Adam(lr=1e-3), metrics=['mae'])

Our team is comprised of MIT facilitators, Harvard PhD’s, Stanford Alumni's, leading management consulting experts, industry leaders and proven entrepreneurs. Collectively, our team brings business and technology together with risk-free implementation of artificial intelligence for enterprise.

Jothi Periasamy

Chief AI Architect

2100 Geng Road

Suite 210

Palo Alto

CA 94303

(916)-296-0228