All software & hardware's used or referenced in this guide belong to their respective vendors. We have developed this guide based on our development infrastructure and this guide may or may not work on other systems and technical infrastructure. We are not liable for any direct or indirect problems caused to the users using this guide.

The purpose of this document is to provide adequate information to users to implement a K-Means Clustering model. In order to achieve this, we are using one of the most common financial problem that occurs in every company. The problem is solved using K-Means Clustering an Unsupervised machine learning model.

Problem Statement:

Identify the intercompany transaction which has difference in their booking - Identify transactions when a buyer and seller declares different transaction amount for the same transaction - incorrect transaction amount.

Business Challenges:

Business Context:

It is a legal obligation for “Company A” to disclose their financials to the internal and/or external stakeholders of their company. During this process “Company A” collects financial data from all of its subsidiaries and then tries to find out intercompany transactions (ex: transactions that took place between a parent company and its subsidiary since these are not real financial transactions).

Traditionally, this process has been performed by “Company A” using various systems, several datasets and group of accounting experts and it has been a weeklong task.

“Company A” decided to use artificial intelligence to automate this process, in order to increase productivity and reduce the time taken to complete this task.

Step 1: Defining a Clear Problem Statement

Step 2:Data Engineering - Import Training & Test Dataset

Step 3:Feature Engineering - Feature engineering is the process of using domain knowledge of the data to create features that make machine learning algorithms work.

Step 4: Model Selection - Model selection is the process of choosing between different machine learning approaches

Step 5: Model Implementation

Model selection is the process of choosing between different machine learning approaches - e.g. SVM, logistic regression, K-Means etc. or choosing between different hyperparameters or sets of features for the same machine learning approach - e.g. deciding between the polynomial degrees/complexities for linear regression.

The choice of the actual machine learning algorithm (e.g. SVM or logistic regression) is less important than you'd think - there may be a "best" algorithm for a particular problem, but often its performance is not much better than other well-performing approaches for that problem.

There may be certain qualities you look for in a model:

Our Problem here is an Unsupervised Classification Problem. The Problem is to Identify & pair intercompany transactions that have difference in their bookings. This Type of Problem can be Solved by the following Models.

We are going to use K-Means Clustering as the Dataset does not contain any label Information. This is the what we do when there is un labelled data that needs to get the labels. After the Labels are generated Test Dataset is used to Predict what we want to achieve.

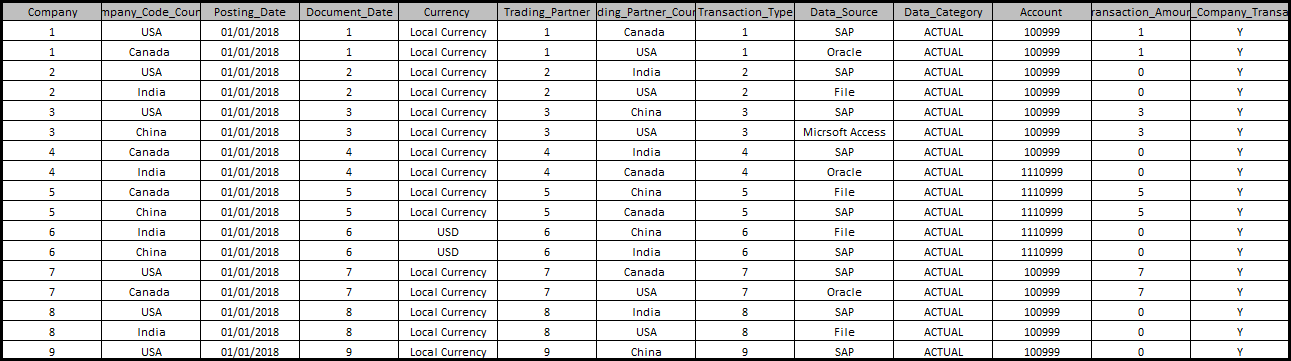

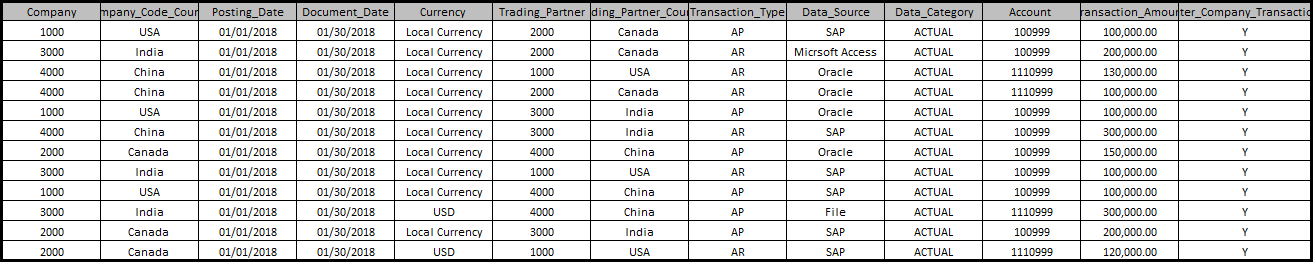

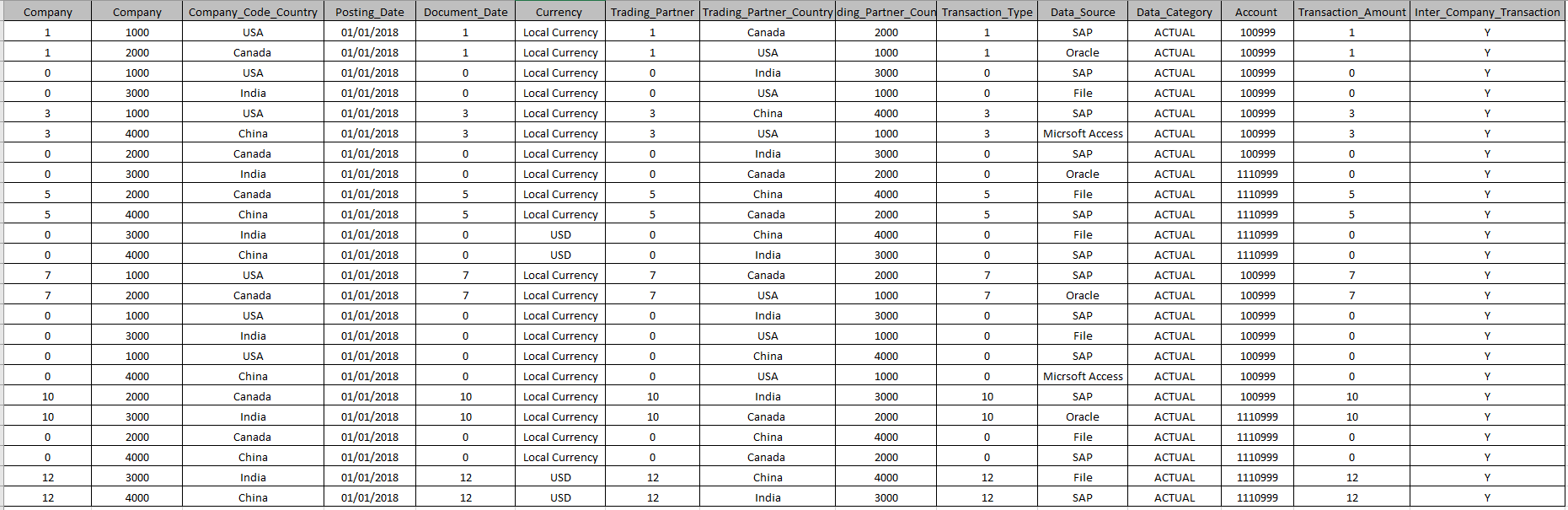

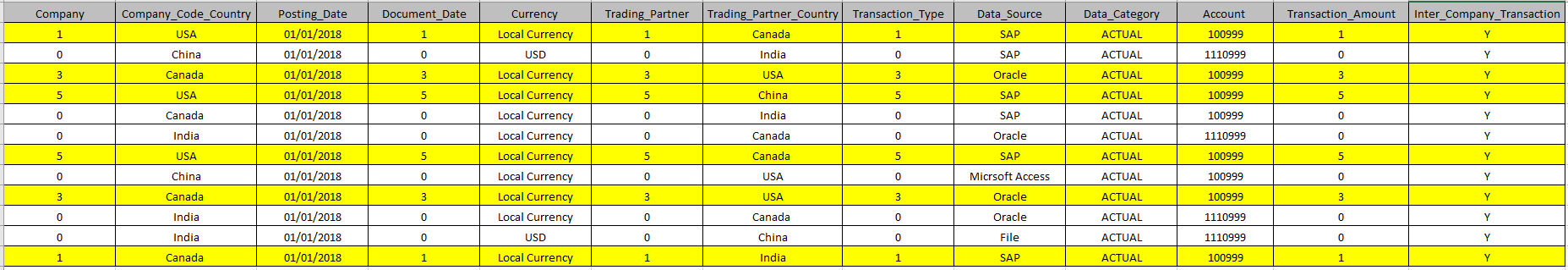

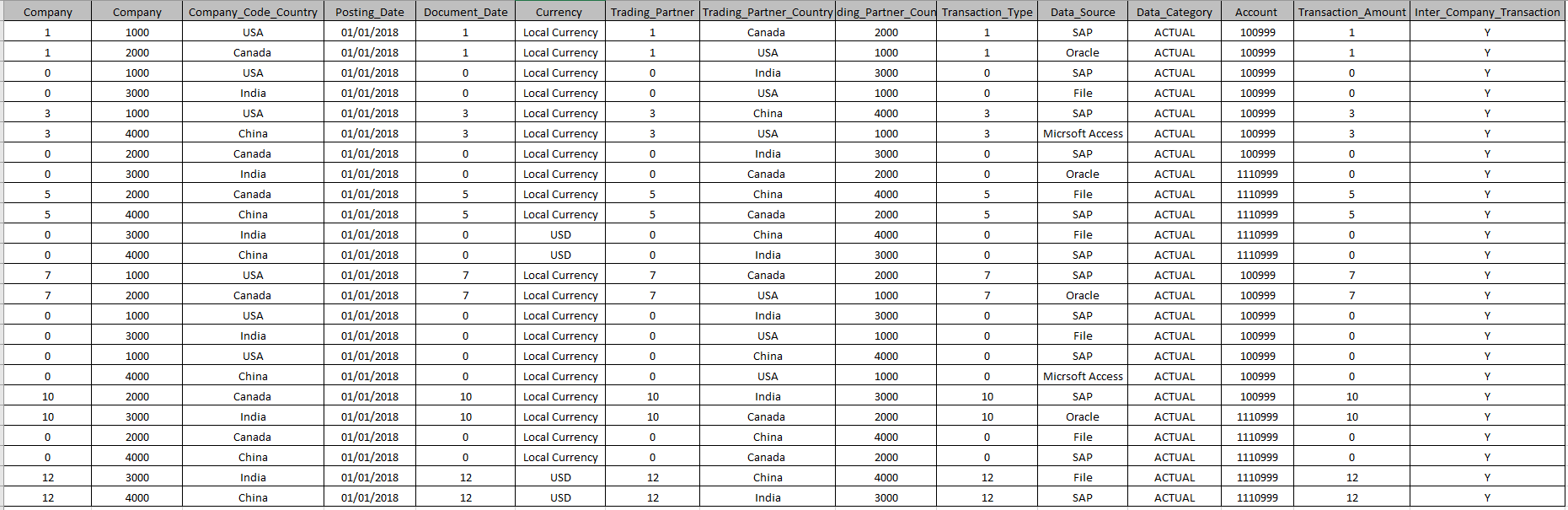

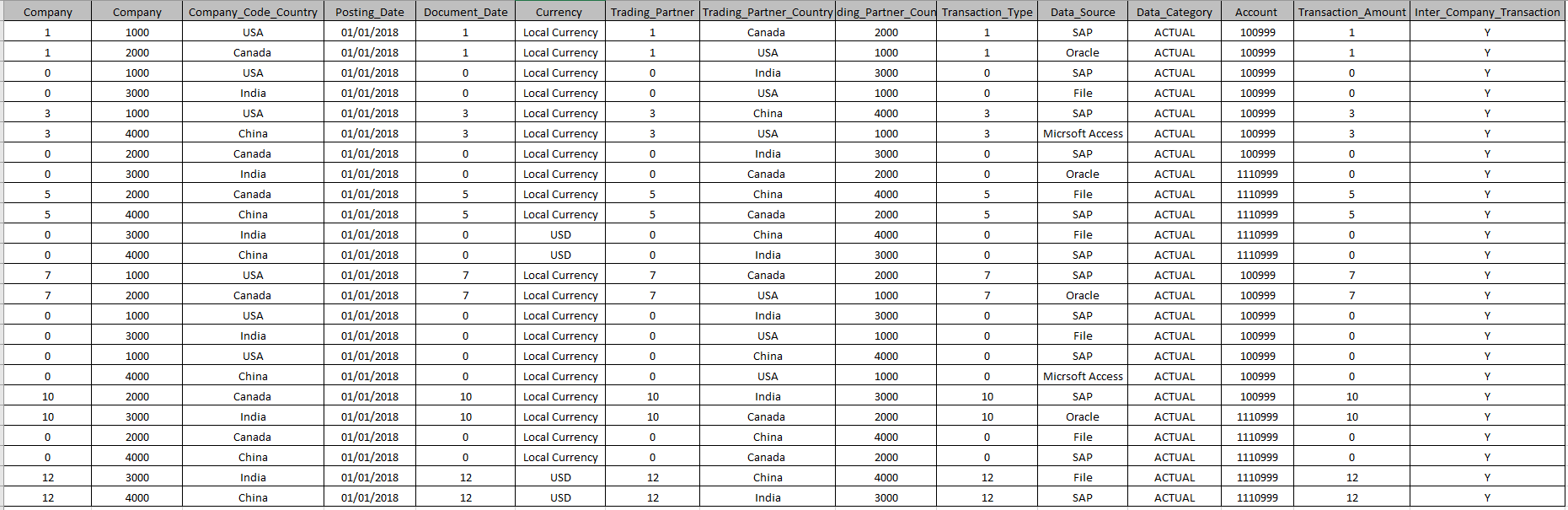

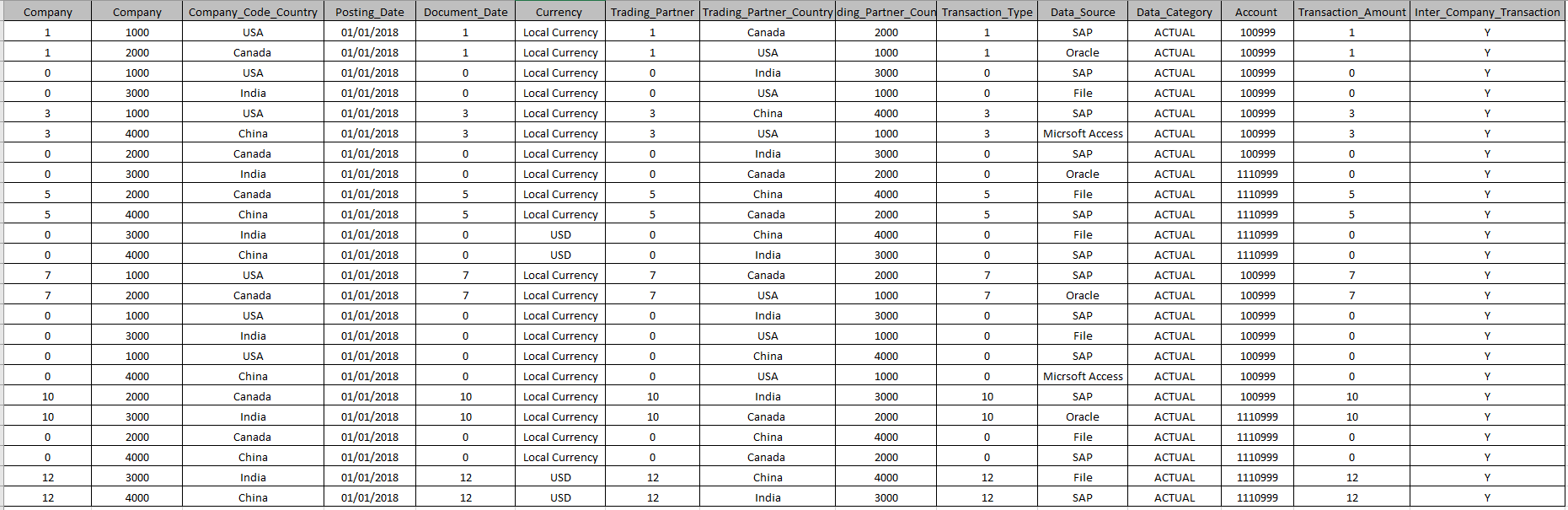

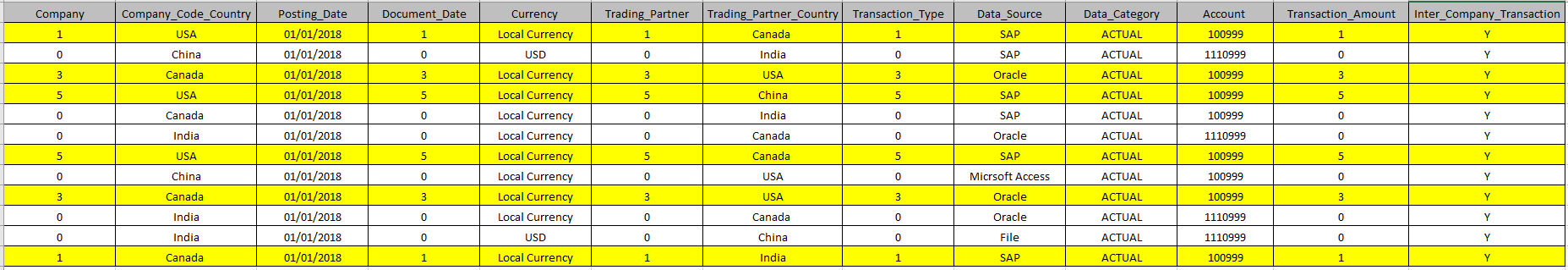

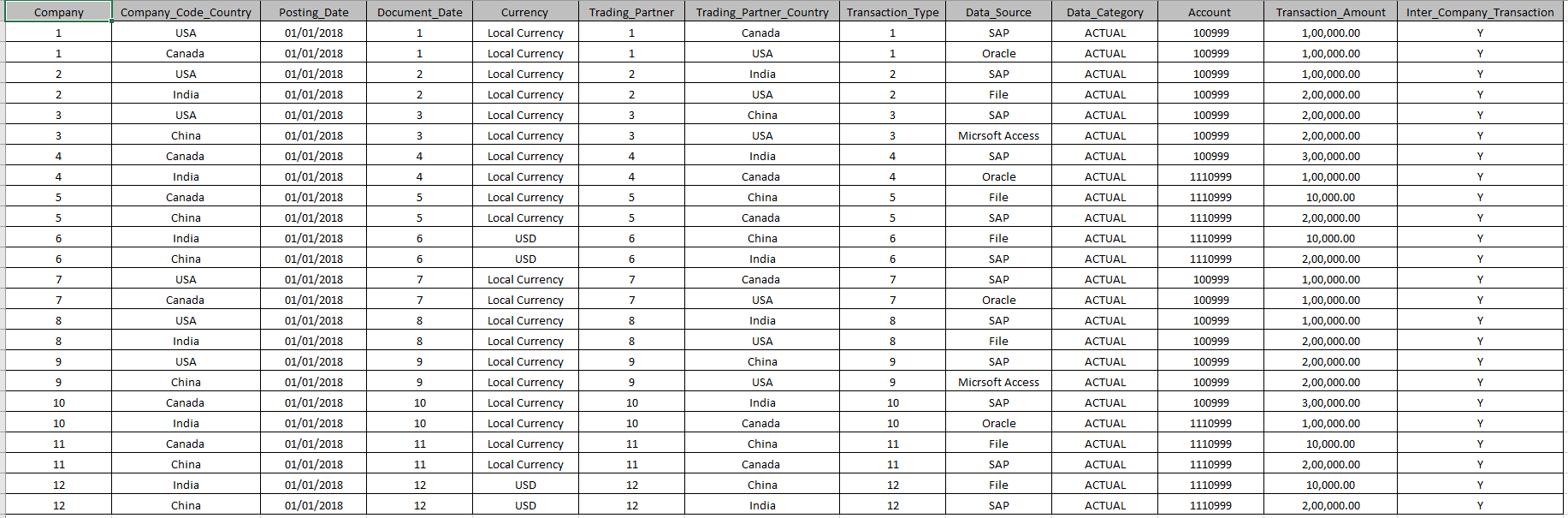

The input data set is the actual dataset used to train the model for performing various Machine Learning Operations (Regression, Classification, Clustering etc.). This is the actual data with which the models learn with various API and algorithm to train the machine to work automatically.

The following section describes the data training data sets and its field level characteristics

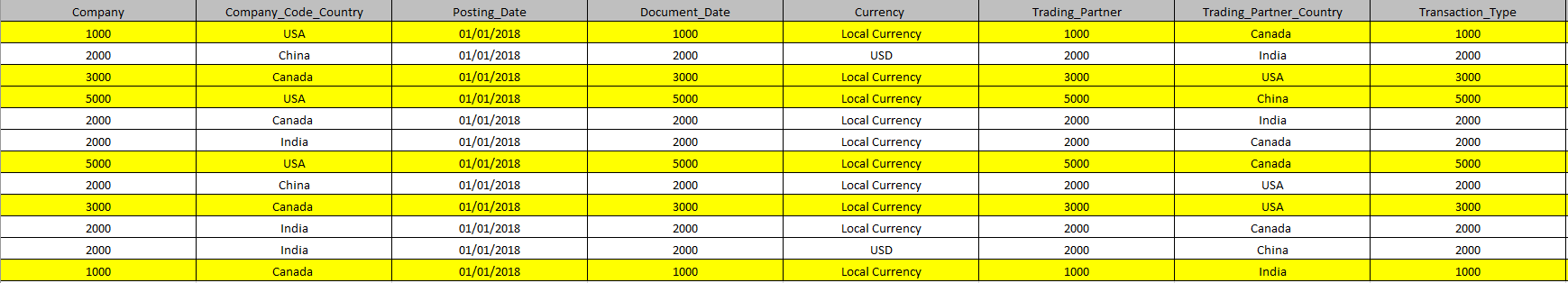

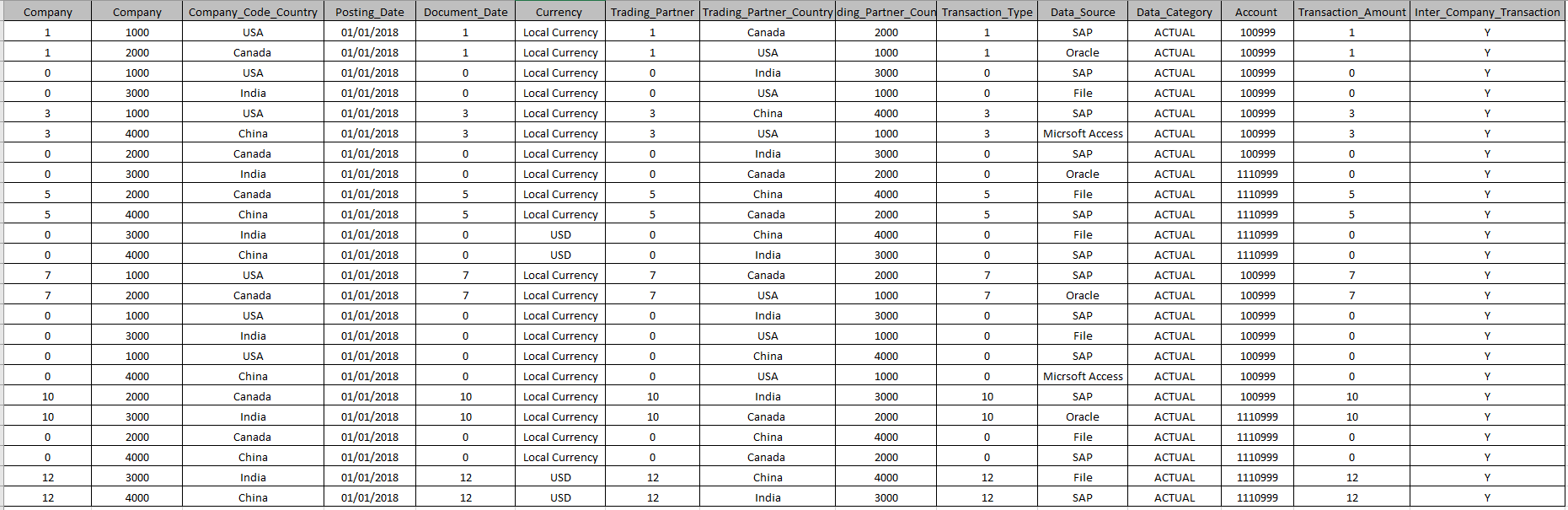

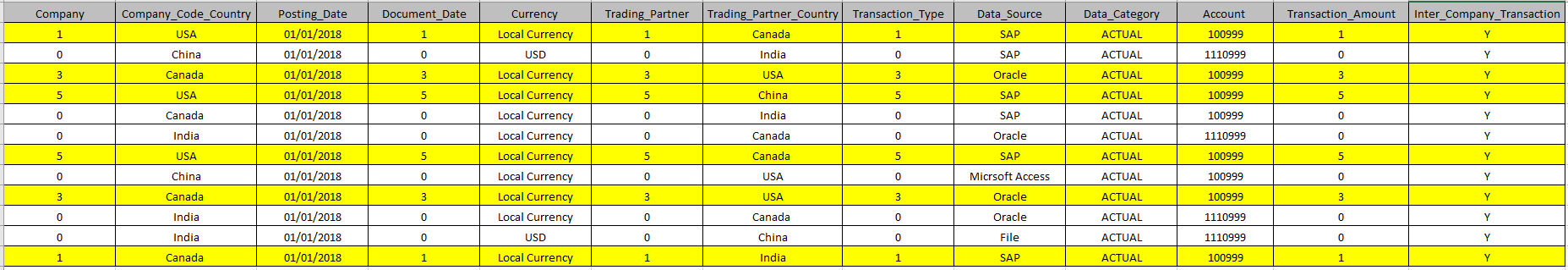

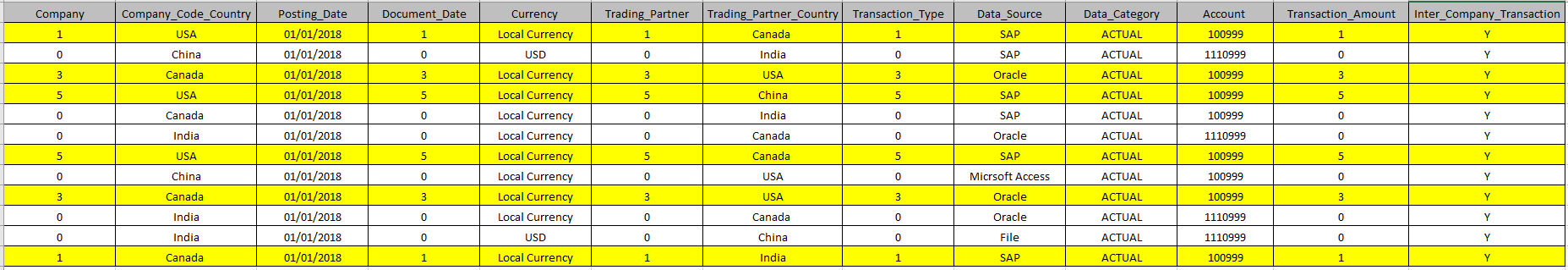

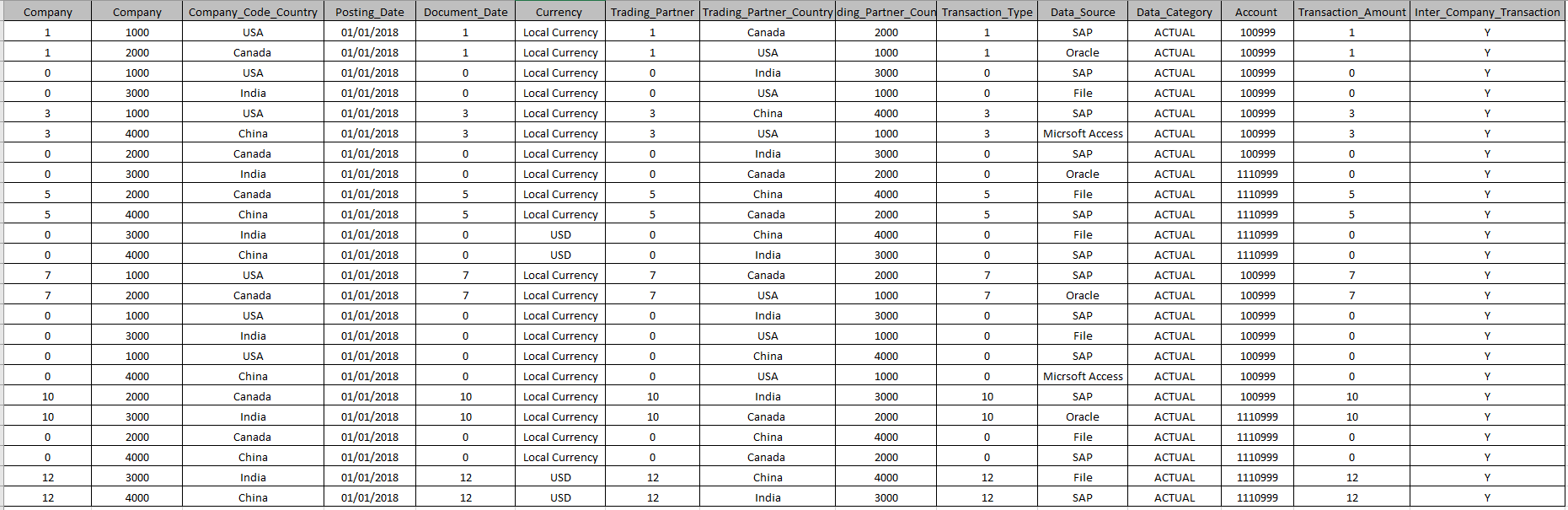

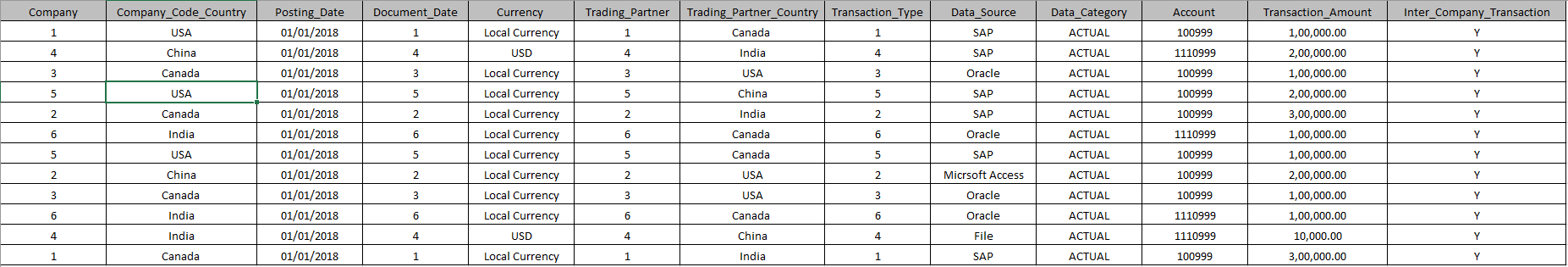

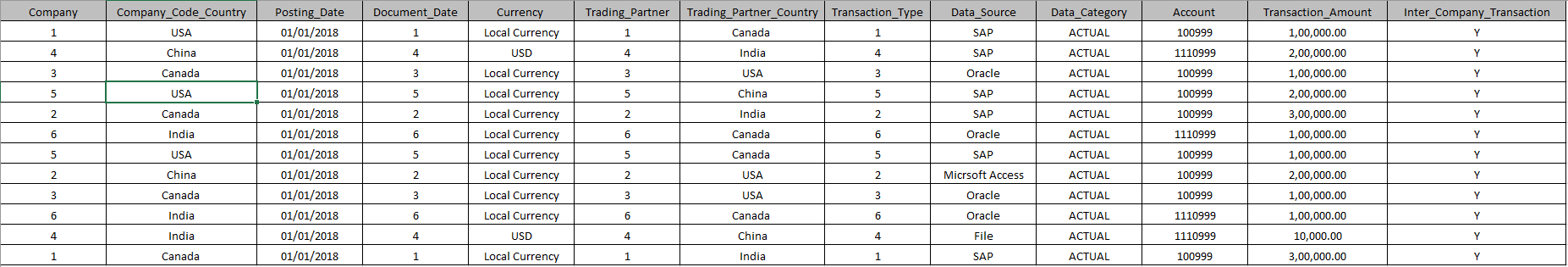

Test data set helps you to validate that the training has happened efficiently in terms of either accuracy, or precision so on. Actually, such data is used for testing the model whether it is responding or working appropriately or not.

The following section describes the features that’s used in the model.

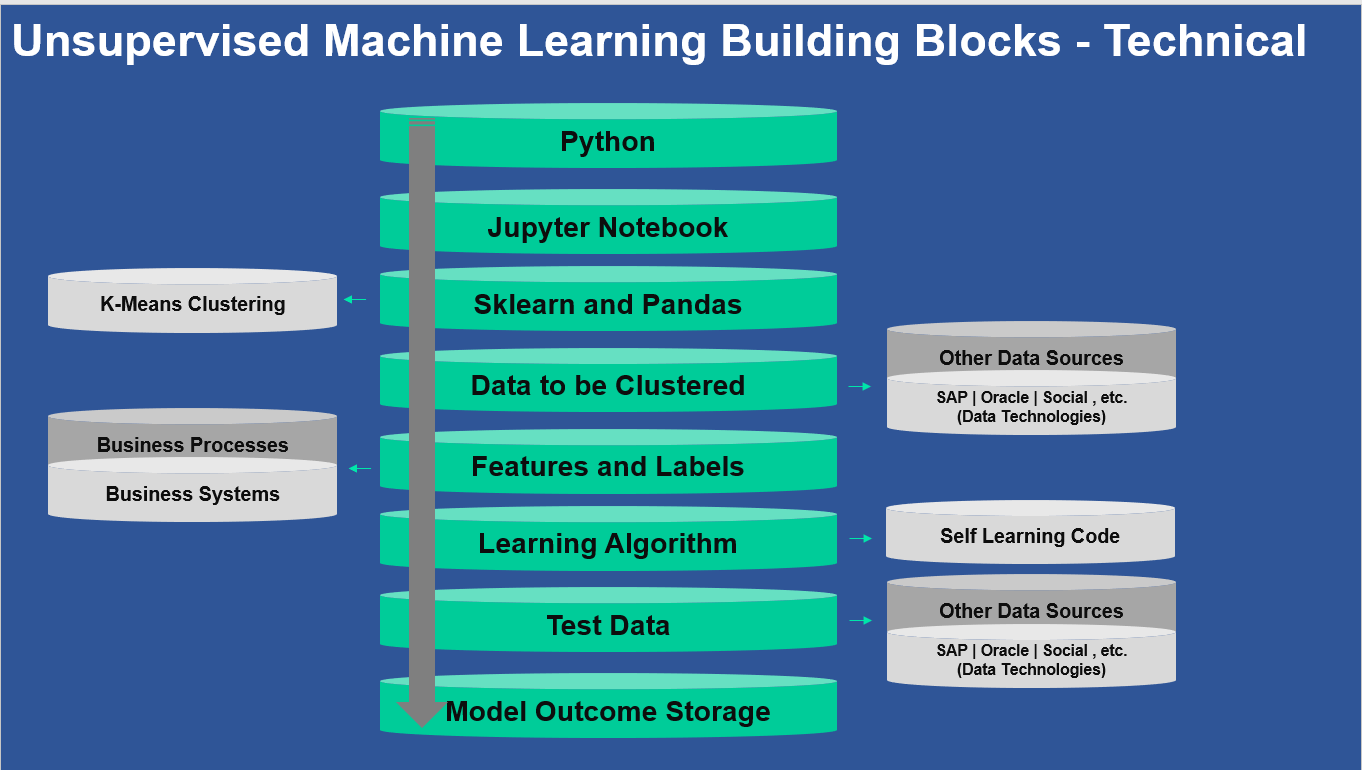

There are several technical and functional components involved in implementing this model. Here are the key building blocks to implement the model.

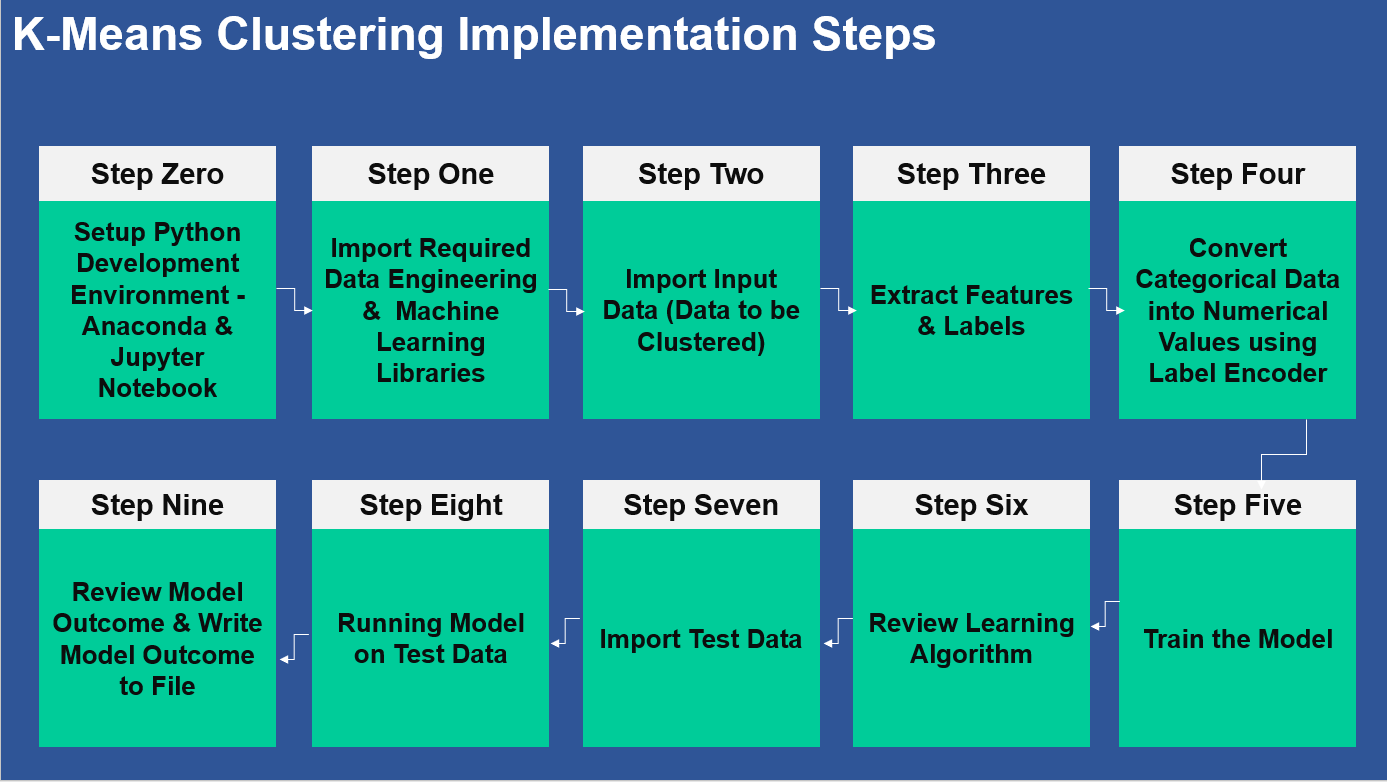

AI model implementation, to address a given problem involves several steps. Here are the key steps that are involved to implement the model. You can customize these steps as needed and these steps are developed for participant learning purpose only.

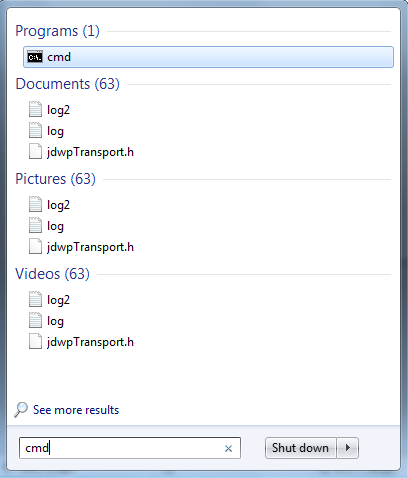

Jupiter notebook is launched through the command prompt. Type cmd & Search to Open Command prompt Terminal.

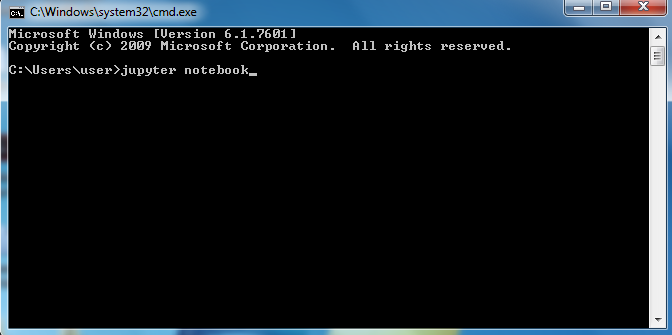

Now, Type Jupiter notebook & press Enter as shown

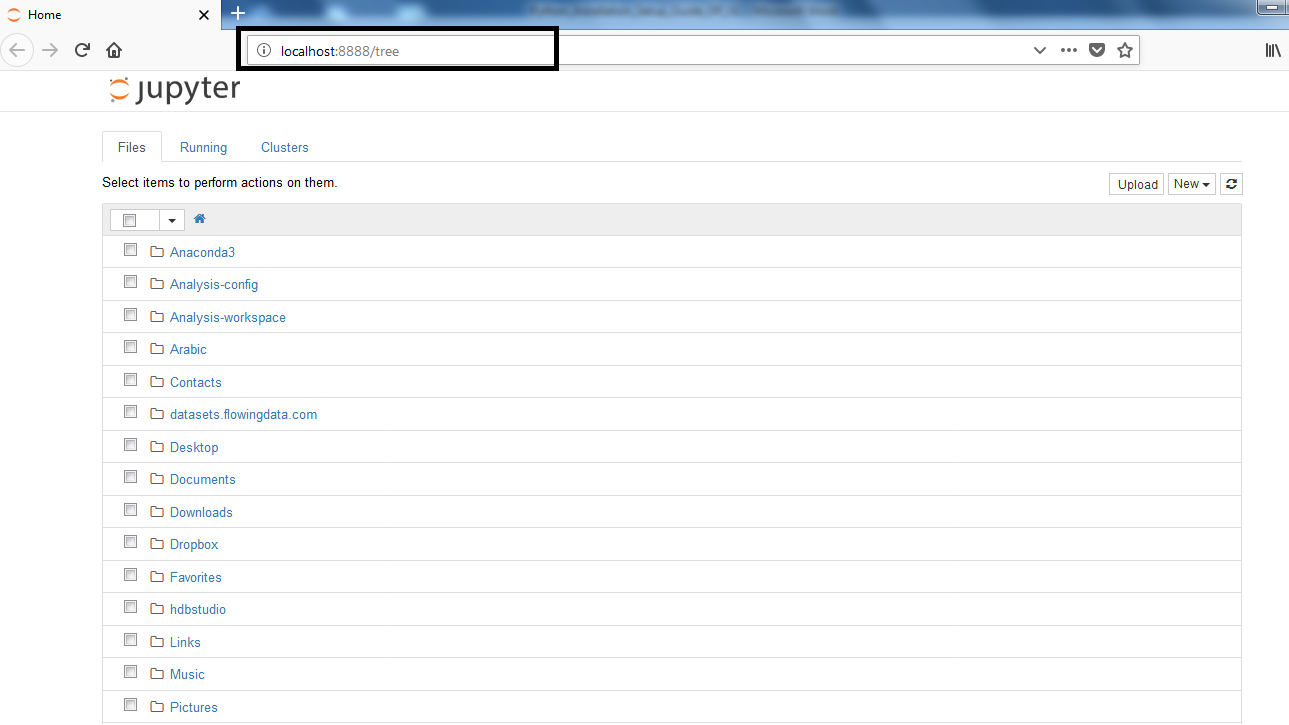

After typing, the Below Page opens

To Open a New File, follow the Below Instructions

Go to New >>> Python [conda root]

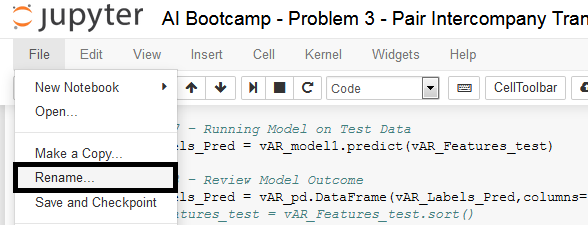

Give a meaningful name to the File as shown below.

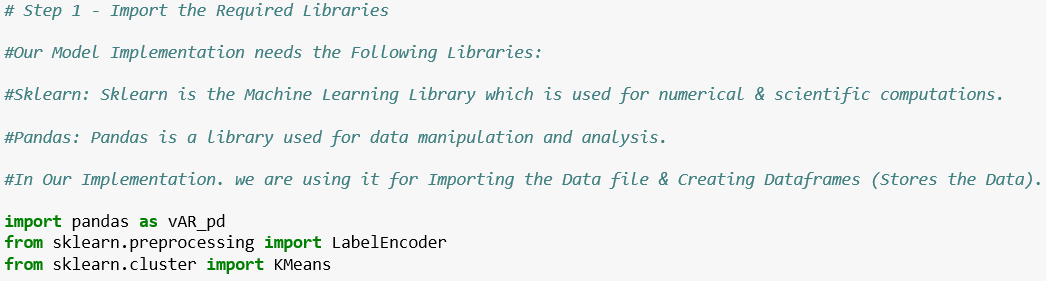

For our Model Implementation we need the Following Libraries:

Sklearn: Sklearn is the Machine Learning Library which contains numerous other libraries like numpy, scipyetc. which are used for numerical & scientific computations.

Pandas: Pandas is a library used for data manipulation and analysis. For Our Implementation we are using it for Importing the Data file & Creating Data frames (Stores the Data).

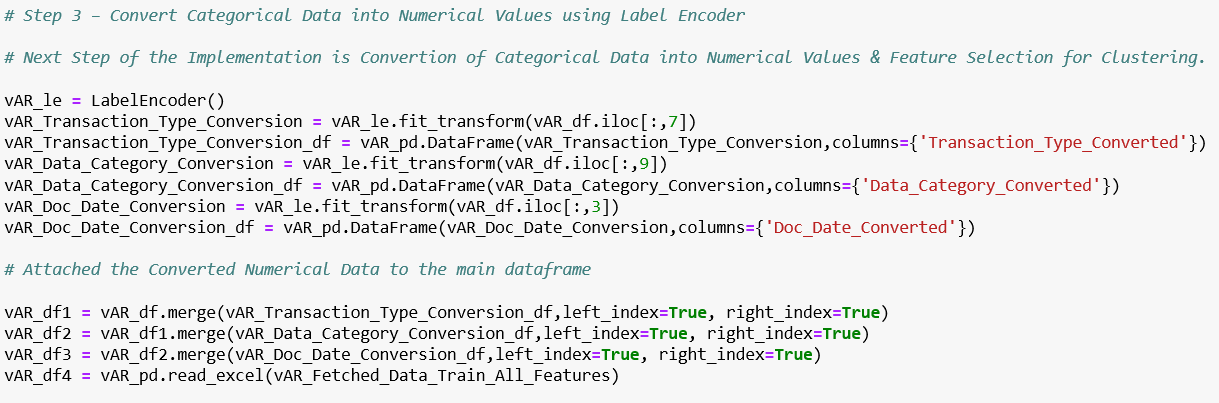

Step 3 of the Implementation is Feature Selection. Machine learning works on a simple rule – if you put garbage in, you will only get garbage to come out. By garbage here, we mean noise in data. This becomes even more important when the numbers of features are very large. We need only those features (Input) that are function of the Labels (Outputs). Ex: To Predict whether the given fruit is an apple or orange Color/Texture of the Fruit becomes a feature to be Considered. If the Color/Texture is Red then it an Apple, If it’s Orange its Orange.

The Features Selected must Numerical. If not, they have to be Converted to numerical values from categorical values. In our Scenario we use Label Encoder for the Conversion.

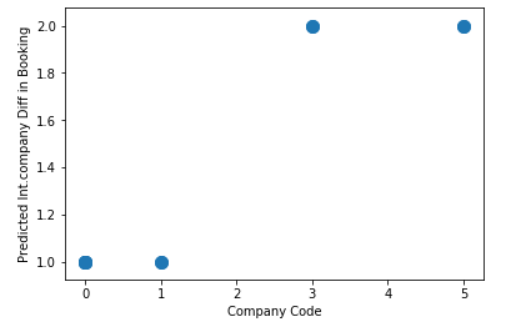

The Features Selected are Company, Document Date, Trading Partner, & Transaction Type. The Label is the Target Variable is the Clusters Predicted by the model.

In this lab work, we have used K-Means Clustering, an Unsupervised Learning model to identify the intercompany transaction which has difference in their booking. The model performed well on the test data & predicted the outcome as expected. For further data analysis and business decision the model outcome is stored on persistent storage - File.

This is a very basic implementation to learn and better understand the overall steps and processes that are involved in implementing an Unsupervised machine learning model. There are a lot more steps, processes, data and technologies involved. We strongly request and recommend you to learn more and prepare yourself to address real-world problems.

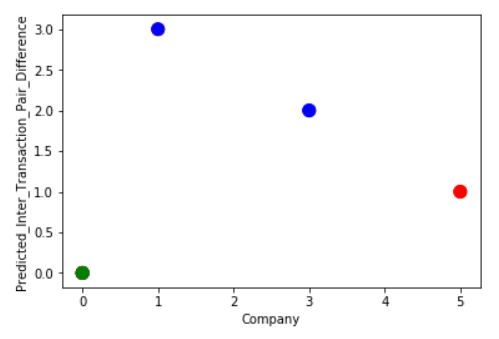

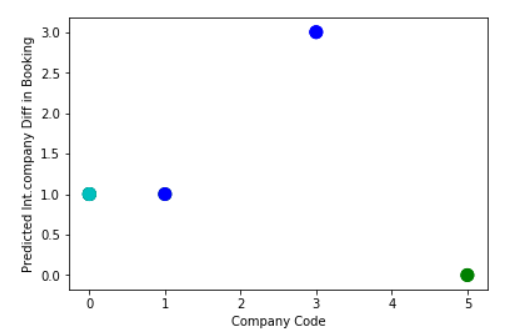

Fitting is a measure of how well a machine learning model generalizes to similar data to that on which it was trained. A model that is well-fitted produces more accurate outcomes, a model that is overfitted matches the data too closely, and a model that is underfitted doesn’t match closely enough. Fitting is the essence of machine learning. If your model doesn’t fit your data correctly, the outcomes it produces will not be accurate enough to be useful for practical decision-making.

The model is Best Fitting, when it performs well on training example & also performs well on unseen data. Ideally, the case when the model makes the predictions with 0 error, is said to have a best fit on the data. This situation is achievable at a spot between overfitting and underfitting. In order to understand it we will have to look at the performance of our model with the passage of time, while it is learning from training dataset.

The training data set is the actual dataset used to train the model for performing various Machine Learning Operations (Regression, Classification, Clustering etc.). This is the actual data with which the models learn with various API and algorithm to train the machine to work automatically

Test data set helps you to validate that the training has happened efficiently in terms of either accuracy, or precision so on. Actually, such data is used for testing the model whether it is responding or working appropriately or not.

if vAR_Fetched_Data_Model_Fitting_Best_Fit_Test =='Y':

def Verify_Model_Bestfit():

import matplotlib.pyplot as vAR_plt

vAR_model.labels_

vAR_df8 = vAR_pd.read_csv(open(vAR_Fetched_Data_Best_Fit_File_Example_1,'r',encoding ='utf-8'))

vAR_df9 = vAR_df8.merge(vAR_Labels_Pred,left_index=True, right_index=True)

vAR_plt.scatter(vAR_df9.iloc[:,0],vAR_df9.iloc[:,13],s=100, c='rgb')

vAR_plt.xlabel('Company')

vAR_plt.ylabel('Predicted_Inter_Transaction_Pair_Difference_Booking')

#vAR_plt.show()

vAR_plt.savefig(vAR_Fetched_Data_Best_Fit_Image_Example_1)

print(Verify_Model_Bestfit())

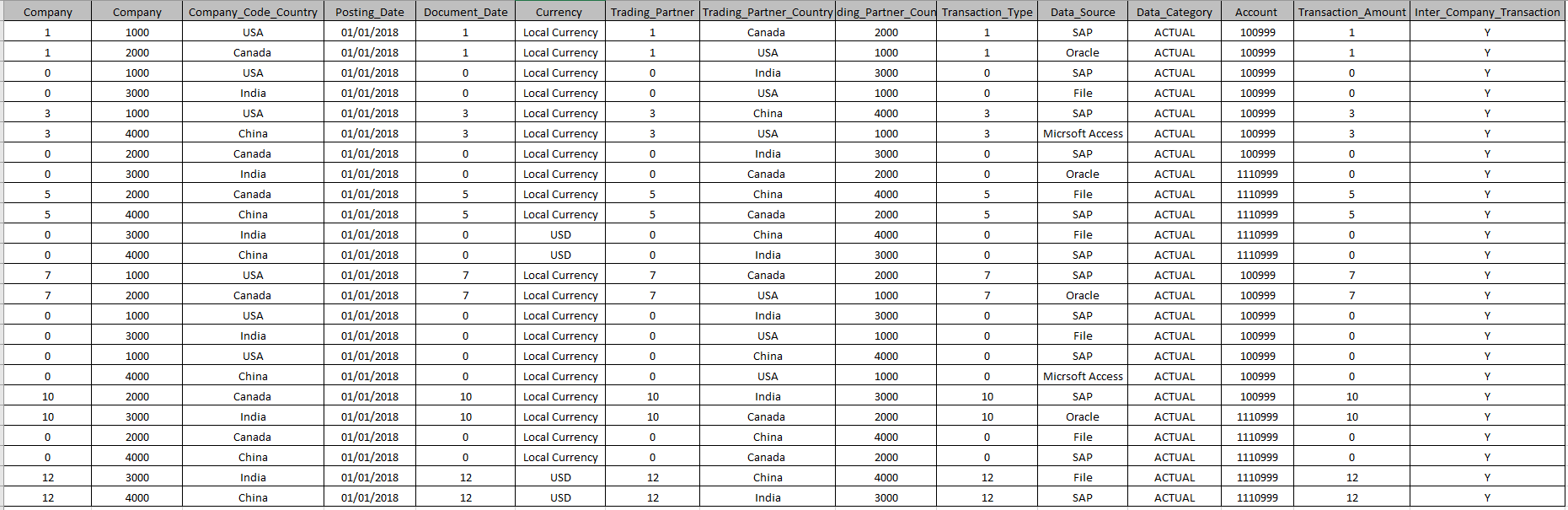

The training data set is the actual dataset used to train the model for performing various Machine Learning Operations (Regression, Classification, Clustering etc.). This is the actual data with which the models learn with various API and algorithm to train the machine to work automatically

Test data set helps you to validate that the training has happened efficiently in terms of either accuracy, or precision so on. Actually, such data is used for testing the model whether it is responding or working appropriately or not.

def Verify_Model_Bestfit():

if vAR_Fetched_Data_Model_Fitting_Best_Fit_Test =='Y':

def Verify_Model_Bestfit():

import matplotlib.pyplot as vAR_plt

]vAR_model.labels_

vAR_df8 = vAR_pd.read_csv(open(vAR_Fetched_Data_Best_Fit_File_Example_2,'r',encoding ='utf-8'))

vAR_df9 = vAR_df8.merge(vAR_Labels_Pred,left_index=True, right_index=True)

vAR_plt.scatter(vAR_df9.iloc[:,0],vAR_df9.iloc[:,13],s=100, c='bky')

vAR_plt.xlabel('Company')

vAR_plt.ylabel('Predicted_Inter_Transaction_Pair_Difference_Booking')

#vAR_plt.show()

vAR_plt.savefig(vAR_Fetched_Data_Best_Fit_Image_Example_2)

print(Verify_Model_Bestfit())

The training data set is the actual dataset used to train the model for performing various Machine Learning Operations (Regression, Classification, Clustering etc.). This is the actual data with which the models learn with various API and algorithm to train the machine to work automatically

Test data set helps you to validate that the training has happened efficiently in terms of either accuracy, or precision so on. Actually, such data is used for testing the model whether it is responding or working appropriately or not.

if vAR_Fetched_Data_Model_Fitting_Best_Fit_Test =='Y':

def Verify_Model_Bestfit():

import matplotlib.pyplot as vAR_plt

vAR_model.labels_

vAR_df8 = vAR_pd.read_csv(open(vAR_Fetched_Data_Best_Fit_File_Example_3,'r',encoding ='utf-8'))

vAR_df9 = vAR_df8.merge(vAR_Labels_Pred,left_index=True, right_index=True)

vAR_plt.scatter(vAR_df9.iloc[:,0],vAR_df9.iloc[:,13],s=100, c='gcg')

vAR_plt.xlabel('Company')

vAR_plt.ylabel('Predicted_Inter_Transaction_Pair_Difference_Booking')

#vAR_plt.show()

vAR_plt.savefig(vAR_Fetched_Data_Best_Fit_Image_Example_3)

\print(Verify_Model_Bestfit())

The model is Overfitting, when it performs well on training example but does not perform well on unseen data. It is often a result of an excessively complex model. It happens because the model is memorizing the relationship between the input example (often called X) and target variable (often called y) or, so unable to generalize the data well. Overfitting model predicts the target in the training data set very accurately.

The training data set is the actual dataset used to train the model for performing various Machine Learning Operations (Regression, Classification, Clustering etc.). This is the actual data with which the models learn with various API and algorithm to train the machine to work automatically

Test data set helps you to validate that the training has happened efficiently in terms of either accuracy, or precision so on. Actually, such data is used for testing the model whether it is responding or working appropriately or not.

if vAR_Fetched_Data_Model_Fitting_Best_Fit_Test =='Y':

def Verify_Model_Overfit():

import matplotlib.pyplot as vAR_plt

vAR_df8 = vAR_pd.read_csv(open(vAR_Fetched_Data_Over_Fit_File_Example_1,'r',encoding ='utf-8'))

vAR_df9 = vAR_df8.merge(vAR_Labels_Pred,left_index=True, right_index=True)

vAR_plt.scatter(vAR_df9.iloc[:,0],vAR_df9.iloc[:,13],s=100, c='rgb')

vAR_plt.xlabel('Company')

vAR_plt.ylabel('Predicted_Inter_Transaction_Pair_Differnce_Booking')

#vAR_plt.show()

vAR_plt.savefig(vAR_Fetched_Data_Over_Fit_Image_Example_1)

print(Verify_Model_Overfit())

The training data set is the actual dataset used to train the model for performing various Machine Learning Operations (Regression, Classification, Clustering etc.). This is the actual data with which the models learn with various API and algorithm to train the machine to work automatically

Test data set helps you to validate that the training has happened efficiently in terms of either accuracy, or precision so on. Actually, such data is used for testing the model whether it is responding or working appropriately or not.

if vAR_Fetched_Data_Model_Fitting_Best_Fit_Test =='Y':

def Verify_Model_Overfit():

import matplotlib.pyplot as vAR_plt

vAR_df8 = vAR_pd.read_csv(open(vAR_Fetched_Data_Over_Fit_File_Example_2,'r',encoding ='utf-8'))

vAR_df9 = vAR_df8.merge(vAR_Labels_Pred,left_index=True, right_index=True)

vAR_plt.scatter(vAR_df9.iloc[:,0],vAR_df9.iloc[:,13],s=100, c='cyb')

vAR_plt.xlabel('Company')

vAR_plt.ylabel('Predicted_Inter_Transaction_Pair_Difference_Booking')

#vAR_plt.show()

vAR_plt.savefig(vAR_Fetched_Data_Over_Fit_Image_Example_2)

print(Verify_Model_Overfit())

The training data set is the actual dataset used to train the model for performing various Machine Learning Operations (Regression, Classification, Clustering etc.). This is the actual data with which the models learn with various API and algorithm to train the machine to work automatically

Test data set helps you to validate that the training has happened efficiently in terms of either accuracy, or precision so on. Actually, such data is used for testing the model whether it is responding or working appropriately or not.

if vAR_Fetched_Data_Model_Fitting_Best_Fit_Test =='Y':

def Verify_Model_Overfit():

import matplotlib.pyplot as vAR_plt

vAR_df8 = vAR_pd.read_csv(open(vAR_Fetched_Data_Over_Fit_File_Example_3,'r',encoding ='utf-8'))

vAR_df9 = vAR_df8.merge(vAR_Labels_Pred,left_index=True, right_index=True)

vAR_plt.scatter(vAR_df9.iloc[:,0],vAR_df9.iloc[:,13],s=100, c='bby')

vAR_plt.xlabel('Company')

vAR_plt.ylabel('Predicted_Inter_Transaction_Pair_Difference_Booking')

#vAR_plt.show()

vAR_plt.savefig(vAR_Fetched_Data_Over_Fit_Image_Example_3)

\print(Verify_Model_Overfit())

The predictive model is said to be Underfitting, if it performs poorly on training data. This happens because the model is unable to capture the relationship between the input example and the target variable. It could be because the model is too simple i.e. input features are not expressive enough to describe the target variable well. Underfitting model does not predict the targets in the training data sets very accurately. Underfitting can be avoided by using more data and also reducing the features by feature selection.

The training data set is the actual dataset used to train the model for performing various Machine Learning Operations (Regression, Classification, Clustering etc.). This is the actual data with which the models learn with various API and algorithm to train the machine to work automatically.

Test data set helps you to validate that the training has happened efficiently in terms of either accuracy, or precision so on. Actually, such data is used for testing the model whether it is responding or working appropriately or not.

if vAR_Fetched_Data_Model_Fitting_Best_Fit_Test =='Y':

def Verify_Model_Underfit():

import matplotlib.pyplot as vAR_plt

vAR_df8 = vAR_pd.read_csv(open(vAR_Fetched_Data_Under_Fit_File_Example_1,'r',encoding ='utf-8'))

vAR_df9 = vAR_df8.merge(vAR_Labels_Pred,left_index=True, right_index=True)

vAR_plt.scatter(vAR_df9.iloc[:,0],vAR_df9.iloc[:,13],s=100, c='rgb')

vAR_plt.xlabel('Company')

vAR_plt.ylabel('Predicted_Inter_Transaction_Pair_Difference_Booking')

#vAR_plt.show()

vAR_plt.savefig(vAR_Fetched_Data_Under_Fit_Image_Example_1)

print(Verify_Model_Underfit())

The training data set is the actual dataset used to train the model for performing various Machine Learning Operations (Regression, Classification, Clustering etc.). This is the actual data with which the models learn with various API and algorithm to train the machine to work automatically.

Test data set helps you to validate that the training has happened efficiently in terms of either accuracy, or precision so on. Actually, such data is used for testing the model whether it is responding or working appropriately or not.

if vAR_Fetched_Data_Model_Fitting_Best_Fit_Test =='Y':

def Verify_Model_Underfit():

import matplotlib.pyplot as vAR_plt

vAR_df8 = vAR_pd.read_csv(open(vAR_Fetched_Data_Under_Fit_File_Example_2,'r',encoding ='utf-8'))

vAR_df9 = vAR_df8.merge(vAR_Labels_Pred,left_index=True, right_index=True)

vAR_plt.scatter(vAR_df9.iloc[:,0],vAR_df9.iloc[:,13],s=100, c='bky')

vAR_plt.xlabel('Company')

vAR_plt.ylabel('Predicted_Inter_Transaction_Pair_Difference_Booking')

#vAR_plt.show()

vAR_plt.savefig(vAR_Fetched_Data_Under_Fit_Image_Example_2)

print(Verify_Model_Underfit())

Training Data Set (Example-3): The training data set is the actual dataset used to train the model for performing various Machine Learning Operations (Regression, Classification, Clustering etc.). This is the actual data with which the models learn with various API and algorithm to train the machine to work automatically.

Test data set helps you to validate that the training has happened efficiently in terms of either accuracy, or precision so on. Actually, such data is used for testing the model whether it is responding or working appropriately or not.

if vAR_Fetched_Data_Model_Fitting_Best_Fit_Test =='Y':

def Verify_Model_Underfit():

import matplotlib.pyplot as vAR_plt

vAR_df8 = vAR_pd.read_csv(open(vAR_Fetched_Data_Under_Fit_File_Example_3,'r',encoding ='utf-8'))

vAR_df9 = vAR_df8.merge(vAR_Labels_Pred,left_index=True, right_index=True)

vAR_plt.scatter(vAR_df9.iloc[:,0],vAR_df9.iloc[:,13],s=100, c='gcg')

vAR_plt.xlabel('Company')

vAR_plt.ylabel('Predicted_Inter_Transaction_Pair_Difference_Booking')

#vAR_plt.show()

vAR_plt.savefig(vAR_Fetched_Data_Under_Fit_Image_Example_3)

print(Verify_Model_Underfit())

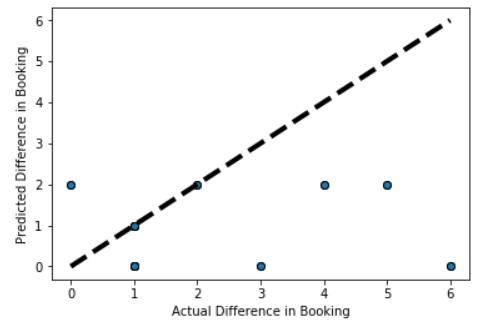

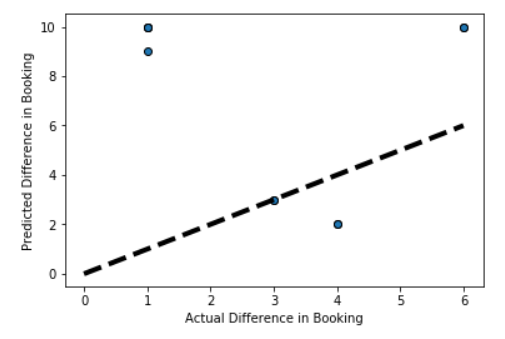

Cross-validation is a technique in which we train our model using the subset of the data-set and then evaluate using the complementary subset of the data-set.

The training data set is the actual dataset used to train the model for performing various Machine Learning Operations (Regression, Classification, Clustering etc.). This is the actual data with which the models learn with various API and algorithm to train the machine to work automatically

Test data set helps you to validate that the training has happened efficiently in terms of either accuracy, or precision so on. Actually, such data is used for testing the model whether it is responding or working appropriately or not.

if vAR_Fetched_Data_Cross_Validation_Required =='Y':

#from sklearn import datasets

from sklearn.model_selection import cross_val_predict

from sklearn.cluster import KMeans

import matplotlib.pyplot as vAR_plt

vAR_model = KMeans(n_clusters=7,random_state=0)

vAR_model.fit(vAR_df4)

vAR_model.labels_

]vAR_Predicted = cross_val_predict(vAR_model, vAR_df4, cv=2)

vAR_fig, vAR_ax = vAR_plt.subplots()

vAR_Labels_Pred = vAR_model.labels_

vAR_ax.scatter(vAR_Labels_Pred, vAR_Predicted, edgecolors=(0, 0, 0))

vAR_ax.plot([vAR_Labels_Pred.min(), vAR_Labels_Pred.max()], [vAR_Labels_Pred.min(), vAR_Labels_Pred.max()], 'k--', lw=4)

vAR_ax.set_xlabel('Actual Difference in Booking')

vAR_ax.set_ylabel('Predicted Difference in Booking')

#vAR_plt.show()

vAR_plt.savefig(vAR_Fetched_Data_Cross_Validation_Image_Example_1)

The training data set is the actual dataset used to train the model for performing various Machine Learning Operations (Regression, Classification, Clustering etc.). This is the actual data with which the models learn with various API and algorithm to train the machine to work automatically

Test Data Set (Example-2): Test data set helps you to validate that the training has happened efficiently in terms of either accuracy, or precision so on. Actually, such data is used for testing the model whether it is responding or working appropriately or not.

if vAR_Fetched_Data_Cross_Validation_Required =='Y':

#from sklearn import datasets

from sklearn.model_selection import cross_val_predict

from sklearn.cluster import KMeans

import matplotlib.pyplot as vAR_plt

vAR_model = KMeans(n_clusters=7,random_state=0)

vAR_model.fit(vAR_df4)

vAR_model.labels_

vAR_Predicted = cross_val_predict(vAR_model, vAR_df4, cv=4)

vAR_fig, vAR_ax = vAR_plt.subplots()

vAR_Labels_Pred = vAR_model.labels_

vAR_ax.scatter(vAR_Labels_Pred[:15], vAR_Predicted[:15], edgecolors=(0, 0, 0))

vAR_ax.plot([vAR_Labels_Pred.min(), vAR_Labels_Pred.max()], [vAR_Labels_Pred.min(), vAR_Labels_Pred.max()], 'k--', lw=4)

vAR_ax.set_xlabel('Actual Difference in Booking')

vAR_ax.set_ylabel('Predicted Difference in Booking')

#vAR_plt.show()

vAR_plt.savefig(vAR_Fetched_Data_Cross_Validation_Image_Example_2)

The training data set is the actual dataset used to train the model for performing various Machine Learning Operations (Regression, Classification, Clustering etc.). This is the actual data with which the models learn with various API and algorithm to train the machine to work automatically

Test Data Set (Example-3): Test data set helps you to validate that the training has happened efficiently in terms of either accuracy, or precision so on. Actually, such data is used for testing the model whether it is responding or working appropriately or not.

if vAR_Fetched_Data_Cross_Validation_Required =='Y':

#from sklearn import datasets

from sklearn.model_selection import cross_val_predict

from sklearn.cluster import KMeans

import matplotlib.pyplot as vAR_plt

vAR_model = KMeans(n_clusters=7,random_state=0)

vAR_model.fit(vAR_df4)

vAR_model.labels_

vAR_Predicted = cross_val_predict(vAR_model, vAR_df4, cv=4)

vAR_fig, vAR_ax = vAR_plt.subplots()

vAR_Labels_Pred = vAR_model.labels_

vAR_ax.scatter(vAR_Labels_Pred[:10], vAR_Predicted[:10], edgecolors=(0, 0, 0))

vAR_ax.plot([vAR_Labels_Pred.min(), vAR_Labels_Pred.max()], [vAR_Labels_Pred.min(), vAR_Labels_Pred.max()], 'k--', lw=4)

vAR_ax.set_xlabel('Actual Difference in Booking')

vAR_ax.set_ylabel('Predicted Difference in Booking')

#vAR_plt.show()

vAR_plt.savefig(vAR_Fetched_Data_Cross_Validation_Image_Example_3)

Hyperparameter Optimization or tuning is the problem of choosing a set of optimal hyperparameters for a learning algorithm. The same kind of machine learning model can require different constraints, weights or learning rates to generalize different data patterns. These measures are called hyperparameters, and have to be tuned so that the model can optimally solve the machine learning problem. Hyperparameter optimization finds a tuple of hyperparameters that yields an optimal model which minimizes a predefined loss function on given independent data.

The training data set is the actual dataset used to train the model for performing various Machine Learning Operations (Regression, Classification, Clustering etc.). This is the actual data with which the models learn with various API and algorithm to train the machine to work automatically

Test data set helps you to validate that the training has happened efficiently in terms of either accuracy, or precision so on. Actually, such data is used for testing the model whether it is responding or working appropriately or not.

if vAR_Fetched_Data_Hyperparameter_Tuning_Required =='Y':

def Before_Hyperparameter_Tuning():

from sklearn.cluster import KMeans

vAR_model = KMeans(n_clusters=6,random_state=0)

vAR_model.fit(vAR_df4)

vAR_df6 = vAR_pd.read_excel(vAR_Fetched_Data_Source_Path_Test_Data)

#plt.scatter(vAR_df.iloc[:,0],vAR_df.iloc[:,12])

vAR_Features_Test = vAR_pd.read_excel(vAR_Fetched_Data_Test_All_Features)

vAR_Labels_Pred = vAR_model.predict(vAR_Features_Test).astype(int)

vAR_Labels_Pred = vAR_pd.DataFrame(vAR_Labels_Pred,columns={'Predicted_Inter_Transaction_Pair_Difference_Booking'})

vAR_df7 = vAR_pd.read_csv(open(vAR_Fetched_Data_Under_Fit_File_Example_1,'r',encoding ='utf-8'))

vAR_df8 = vAR_df7.iloc[:,:-1]

vAR_df10 = vAR_df8.merge(vAR_Labels_Pred,left_index=True, right_index=True)

import matplotlib.pyplot as vAR_plt

vAR_plt.scatter(vAR_df9.iloc[:,0],vAR_df9.iloc[:,13],s=100, c='rgb')

vAR_plt.xlabel('Company Code')

vAR_plt.ylabel('Predicted_Inter_Transaction_Pair_Difference_Booking')

#vAR_plt.show()

#vAR_plt.savefig(vAR_Fetched_Data_Before_Hyperparameter_Tuning_Image)

print(Before_Hyperparameter_Tuning())

The training data set is the actual dataset used to train the model for performing various Machine Learning Operations (Regression, Classification, Clustering etc.). This is the actual data with which the models learn with various API and algorithm to train the machine to work automatically

Test data set helps you to validate that the training has happened efficiently in terms of either accuracy, or precision so on. Actually, such data is used for testing the model whether it is responding or working appropriately or not.

if vAR_Fetched_Data_Hyperparameter_Tuning_Required =='Y':

def After_Hyperparameter_Tuning():

from sklearn.cluster import KMeans

vAR_model = KMeans(n_clusters=12,random_state=0)

vAR_model.fit(vAR_df4)

vAR_df6 = vAR_pd.read_excel(vAR_Fetched_Data_Source_Path_Test_Data)

#plt.scatter(vAR_df.iloc[:,0],vAR_df.iloc[:,12])

vAR_Features_Test = vAR_pd.read_excel(vAR_Fetched_Data_Test_All_Features)

vAR_Labels_Pred = vAR_model.predict(vAR_Features_Test).astype(int)

vAR_Labels_Pred = vAR_pd.DataFrame(vAR_Labels_Pred,columns={'Predicted_Inter_Transaction_Pair_Difference_Booking'})

vAR_df7 = vAR_pd.read_csv(open(vAR_Fetched_Data_Under_Fit_File_Example_1,'r',encoding ='utf-8'))

vAR_df8 = vAR_df7.iloc[:,:-1]

vAR_df10 = vAR_df8.merge(vAR_Labels_Pred,left_index=True, right_index=True)

import matplotlib.pyplot as vAR_plt

vAR_plt.scatter(vAR_df9.iloc[:,0],vAR_df9.iloc[:,13],s=100, c='rgb')

vAR_plt.xlabel('Company Code')

vAR_plt.ylabel('Predicted_Inter_Transaction_Pair_Difference_Booking')

#vAR_plt.show()

#vAR_plt.savefig(vAR_Fetched_Data_Before_Hyperparameter_Tuning_Image)

print(After_Hyperparameter_Tuning())

Our team is comprised of MIT learning facilitators, Harvard PhDs, Stanford alumni, leading management consulting experts, industry leaders and proven entrepreneurs. Collectively, our team brings business and technology together with risk-free implementation of artificial intelligence for enterprise.

Jothi Periasamy

Chief AI Architect

2100 Geng Road

Suite 210

Palo Alto

CA 94303

(916)-296-0228